Assessing student performance is challenging as educators must measure understanding, maintain fairness, and ensure academic integrity amid growing class sizes and diverse learning needs. Traditional tests often fall short, causing inconsistencies and heavy manual workloads. An ExamSoft AI-like assessment tool bridges these gaps by combining automation, analytics, and secure evaluation into a single, reliable system for accurate and efficient student assessment.

AI-powered assessment platforms show how technology can transform the entire testing lifecycle, from creating questions and detecting patterns to preventing misconduct and generating deep performance insights. By combining machine learning, proctoring automation, and real-time analytics, these tools help educators make faster, more accurate decisions while giving institutions stronger oversight and transparency.

In this guide, we’ll break down what it takes to develop an AI-first assessment system, core features, technical foundations, and the development roadmap that shapes a scalable solution. This blog will help you understand the essentials behind creating a smarter, more secure testing experience.

What is an AI Assessment Tool, ExamSoft?

ExamSoft is a secure platform for educators, institutions, and certification bodies to create, deliver, and evaluate exams efficiently. It supports offline delivery, device lockdown, customizable questions, and analytics that reveal learning outcomes and curriculum strengths. As part of Turnitin, ExamSoft provides secure testing and academic integrity tools for high-stakes and routine assessments.

It transforms assessments into data-driven insights that enhance learning outcomes and decision-making. With secure exam enforcement, performance analytics, and AI-assisted proctoring to detect unusual behavior, ExamSoft maintains integrity and supports education, certification, and licensure worldwide.

- AI-Assisted Remote Proctoring: ExamMonitor analyzes video, audio, and movement patterns to flag unusual behavior while allowing human review for integrity.

- Enhanced Integrity via Turnitin AI Reports: Integration with Turnitin provides AI writing reports to detect AI-generated or paraphrased content for deeper authenticity analysis.

- Offline Secure Delivery at Scale: Exams can be securely delivered offline with device lockdown and encryption, reducing reliance on live proctoring and ensuring integrity in low-connectivity settings.

- Flexible Modality with AI Reinforcement: Supports on-campus, remote, or hybrid exams with consistent security and data-driven insights across any instructional setting.

A. Business Model: How it Operates

ExamSoft is a secure digital assessment platform that consolidates exam creation, delivery, scoring, and analytics. It replaces fragmented workflows with a centralized system, ensuring academic integrity and providing learning insights.

- Delivers a cloud-based assessment platform that allows institutions to author, administer and analyze high-stakes exams with full control and data visibility.

- Ensures exam integrity using secure device lockdown, offline exam delivery, restricted navigation and encrypted test files to prevent cheating during assessments.

- Provides institutions with performance analytics dashboards that measure student outcomes, question reliability, curriculum alignment and accreditation readiness.

- Integrates with Turnitin’s ecosystem to support similarity checking, AI-writing detection, and authenticity verification for written assessments.

- Supports multiple testing modalities including on-campus, remote, offline and hybrid exam environments, ensuring consistent security regardless of location.

- Utilizes a centralized item bank and tagging system to help educators map questions to competencies and track longitudinal student improvement.

B. Funding & Investment Context of ExamSoft

ExamSoft’s financial and ownership journey reflects its strategic position in the education technology and assessment market rather than a typical venture-scale funding trajectory.

- ExamSoft raised approximately $7.7 million in early funding rounds, with investors including Gemini Investors, before becoming part of a larger edtech ecosystem.

- In October 2020, ExamSoft was acquired by Turnitin, integrating the platform into Turnitin’s global assessment and academic integrity portfolio. Acquisition terms were not publicly disclosed.

- The acquisition aligns with Turnitin’s goal to create a comprehensive assessment and integrity platform, which also includes Gradescope and Ouriginal.

- Turnitin was acquired by Advance Publications in 2019 for around $1.75 billion, highlighting the significance of assessment and integrity technologies, with ExamSoft playing a central role in this ecosystem.

How an AI Assessment Tool Works?

An AI assessment tool like ExamSoft uses advanced algorithms to simplify exam creation, grading, and analytics, ensuring accurate, secure evaluation. It combines efficiency with data insights to help institutions improve learning and maintain assessment integrity.

1. Secure Exam Creation and Configuration

Educators build assessments using an item bank with mapped outcomes and metadata. The system applies structured content tagging to ensure reliable exam assembly while maintaining alignment with competencies, difficulty levels and institutional standards.

2. AI-Assisted Exam Integrity & Delivery

Before delivery, the platform enforces secure testing conditions through file encryption, restricted navigation and identity validation. AI-assisted checks ensure exam sessions follow controlled protocols across online, offline and hybrid environments.

3. Intelligent Remote Proctoring & Monitoring

During remote exams, integrated AI models analyze behavioral patterns, audio cues and visual activity to detect irregularities. These integrity indicators help exam administrators focus only on events that require review, reducing manual proctoring load.

4. Offline Exam Execution with Fail-Safe Syncing

Test takers can complete assessments without internet access using tamper-resistant local storage. Once reconnected, encrypted responses sync automatically, preserving exam continuity and preventing data loss in low connectivity settings.

5. Automated Scoring & Structured Feedback

The system evaluates objective items instantly and applies rubric-based scoring for subjective responses. Its intelligent scoring engine accelerates grading cycles and produces targeted feedback that supports clearer learning pathways.

6. Performance Analytics & Outcome Insights

Post exam, the tool generates multi-dimensional analytics showing competency gaps, item reliability, cohort trends and curriculum alignment. These insights guide faculty decisions, accreditation reporting and long-term program improvement.

Types of AI Used in an AI Assessment Tool

AI assessment tools use AI, like machine learning and natural language processing, to evaluate student performance. These improve accuracy, efficiency, and insights in education.

| AI Type | What It Does | Why It Matters |

| Computer Vision Models | Monitor facial behavior, gaze patterns & environmental cues during remote exams. | Delivers reliable exam integrity by detecting impersonation and suspicious activity without constant human supervision. |

| Behavioral Analytics AI | Analyzes response timing, interaction patterns & decision latency to detect anomalies. | Catches subtle cheating behaviors that visual proctoring and rule-based systems cannot detect. |

| Natural Language Processing (NLP) | Uses semantic understanding to evaluate written responses and automate subjective scoring. | Provides consistent, scalable grading and reduces instructor workload for essay or case-based assessments. |

| Automated Scoring Engines | Apply pattern recognition and rubric logic to evaluate objective and structured tasks. | Ensures fast, accurate and repeatable scoring, essential for high-volume assessments. |

| Predictive Analytics Models | Identify competency gaps, performance risks and learning trends using historical data. | Enables data-driven decisions, improves curriculum planning and supports personalized student intervention. |

| Anomaly Detection Algorithms | Detect outlier behaviors, irregular exam events and unexpected activity patterns. | Strengthens integrity by revealing non-obvious threats, improving reliability during high-stakes testing. |

How 88% Student Adoption Proves AI Assessment Tools Are Essential?

The global K-12 testing market is projected to grow from USD 10.5 billion in 2023 to USD 27.2 billion by 2033, at a 10.0% CAGR. This growth is driven by increasing demand for digital assessment solutions, prompting educational institutions to develop AI-powered tools that ensure academic integrity and enhance the testing experience.

In 2025, 88% of students use generative AI for assessments, up from 53% in 2024, showing rapid integration into critical academic work. Overall, 92% of UK students use AI in their studies, with 65% considering it essential for success.

A. High Teacher Adoption and Student Usage Drive $27B Market Opportunity

A U.S. survey of 2,232 teachers found 60% used AI in 2024-25. Combined with 88% student usage, this increases pressure for schools to adopt official AI assessment platforms.

- 60% teacher adoption proves educators are ready for AI assessment tools, eliminating resistance and validating demand for purpose-built platforms that outperform consumer AI solutions.

- High school teachers (66% adoption) and early-career teachers (69%) are the most valuable initial segments, offering concentrated demand, larger budgets, and comfort with sophisticated AI tools.

- The 66% year-over-year student growth signals a hypergrowth market, ideal for new entrants offering innovative features and superior user experiences.

- Current teacher dependence on fragmented consumer tools reveals a huge opportunity; dedicated AI assessment platforms that integrate with gradebooks, align with standards, and comply with FERPA can capture significant market share.

B. AI Assessment Tools Save 222 Hours, Boosting Teacher Productivity

Teachers using AI tools weekly save an average of 5.9 hours per week. Over a 37.4-week school year, this totals 222 hours per teacher, or six work weeks. Across 3.7 million U.S. teachers, this equals 821 million hours and over $27 billion in productivity gains.

- Annual teacher time savings create a $27+ billion addressable market. Each teacher gains $7,300+ in time value, allowing schools to pay $500–$2,000 per teacher, with 10% market penetration yielding $370 million in U.S. recurring revenue.

- Teacher burnout and high hiring costs make AI tools a clear ROI, preventing resignations and saving districts substantial recruitment expenses.

- Early-career teachers’ 69% adoption signals that AI assessment tools will become the expected standard technology, supporting long-term workforce needs.

- Automating 25% of teacher assessment work delivers higher ROI than other major edtech investments, enabling premium pricing while maximizing value.

- Revenue opportunities extend beyond school subscriptions to freemium models, enterprise licensing, state deals, and international markets, supporting a $100M+ ARR potential.

Key Features of an ExamSoft AI-like Assessment Tool

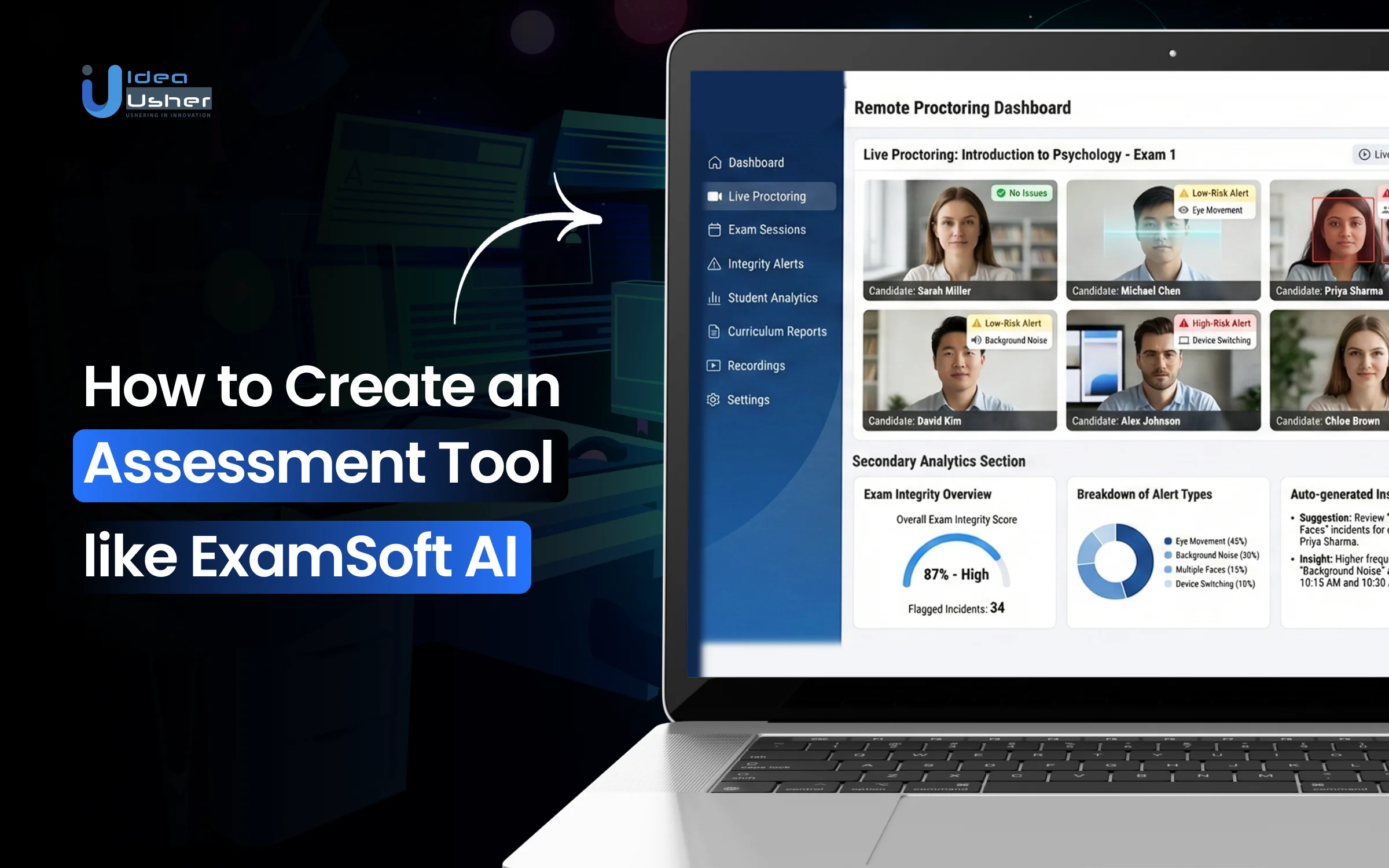

An ExamSoft AI-like assessment tool combines secure exam delivery, AI-assisted proctoring, and analytics to enhance integrity and efficiency. These features help educators create, administer, and evaluate assessments while generating actionable insights into student performance.

1. Secure Exam Delivery & Device Lockdown

The tool creates a controlled testing environment using device lockdown, encrypted files and restricted navigation. It prevents unauthorized app switching or internet use, ensuring assessments reflect true performance. This secure delivery layer protects exam integrity in both online and offline settings.

2. AI-Assisted Remote Proctoring

An integrated proctoring module uses AI-driven behavior analysis to review video, audio and environmental activity during remote exams. It identifies anomalies in movement, gaze and background noise, providing automated integrity signals that help exam administrators focus only on validated alerts.

3. Identity Verification & Authentication

The system includes a multi-step identity check that verifies test takers through facial capture, credential matching and session-level authentication. This prevents impersonation and unauthorized access while maintaining a seamless exam experience through lightweight biometric validation processes.

4. Offline Exam Capability & Fail-Safe Syncing

Exams can be downloaded in advance and taken completely offline, with secure local storage and tamper-resistant answer tracking. Once reconnected, the system uses fail-safe syncing protocols to upload encrypted responses without risking data loss or integrity breakdown.

5. Advanced Reporting & Performance Analytics

The platform offers multi-dimensional analytics that surface student strengths, learning gaps and outcome trends. It analyzes item reliability, competency mapping and cohort comparison, giving faculty data-driven insights that enhance curriculum planning and remediation strategies.

6. Question Bank Customization & Outcome Tagging

Educators can build structured item banks with outcome tagging, difficulty weighting and version control. This supports purposeful exam design and long-term content management while enabling granular alignment of assessments with accreditation standards and learning objectives.

7. Automated Exam Scoring & Feedback Generation

The system supports automated scoring for objective items and structured rubrics for subjective responses. Its intelligent scoring engine accelerates evaluation cycles and generates targeted feedback that strengthens student understanding and instructional refinement.

8. Audit Trails & Compliance Ready Data Logs

Every action across exam creation, delivery and grading is recorded through comprehensive audit logs. These time-stamped data trails support accreditation reviews, regulatory compliance and internal quality assurance with verifiable, tamper-resistant exam records.

9. Adaptive Pattern Analysis for Cheating Detection

The system applies cognitive pattern modeling to analyze response rhythm, decision latency and answer switching behavior. By detecting irregular cognitive signatures that diverge from a user’s baseline, the tool uncovers subtle cheating patterns that traditional proctoring and item-level analysis cannot identify.

10. AI Question Variant Generator

A built-in module uses generative intelligence to produce secure variants of existing questions while preserving difficulty levels and learning outcomes. This enables dynamic exam creation, minimizes content exposure and ensures continuous freshness of assessment items across cohorts and retakes.

How to Create an Assessment Tool like ExamSoft AI?

Creating an ExamSoft AI-like assessment tool involves secure exam delivery, AI-assisted proctoring, and analytics. Our developers focus on core features, data handling, and development processes to build a reliable, scalable, and effective assessment platform.

1. Consultation

We begin by analyzing the institution’s assessment workflow, exam security requirements and data ecosystem. Through structured planning sessions, we outline core functionality, exam modalities, AI features and compliance expectations to ensure the platform aligns with academic objectives and integrity standards from the start.

2. Exam Content Structuring

We gather sample assessments, outcomes, competency maps and institutional rules to build structured training and evaluation datasets. This process ensures the system can support outcome-tagged questions, rubric-based grading and performance analytics grounded in real instructional requirements.

3. Assessment Workflow & Item Bank System

Our team creates a flexible item management framework that supports question creation, tagging, versioning and blueprinting. This enables faculty to align assessments with competencies and generate exams efficiently using structured categories and long term content governance.

4. Secure Exam Delivery Mechanisms

We develop exam delivery protocols that enforce device restrictions, encrypted exam files and restricted navigation. This secure layer supports online and offline delivery while preventing unauthorized resource access, ensuring exams reflect authentic student performance.

5. AI-Assisted Proctoring & Identity Verification

Our developers implement AI-enabled monitoring that analyzes behavior, audio cues and environmental patterns during remote exams. Identity checks use multi-step authentication to prevent impersonation, maintaining exam integrity while keeping the student experience seamless and accessible.

6. Offline Exam Capability with Fail-Safe Syncing

We create robust offline workflows that allow students to take exams without network dependency. Answers are stored in tamper-resistant local containers and uploaded securely once connected, ensuring reliability in low-connectivity environments.

7. Advanced Analytics & Performance Reporting

Our team designs multi-layer analytics dashboards that surface student strengths, competency gaps and item performance. These insights support curriculum decisions, accreditation reviews and targeted remediation through structured data storytelling and outcome-aligned reporting.

8. Automated Scoring & Feedback Engines

We create scoring workflows that support objective grading and rubric-based evaluation for subjective tasks. The intelligent scoring engine accelerates grading cycles and generates actionable feedback for learners and faculty.

9. Integration with Institutional Systems

We ensure smooth interoperability with learning platforms, identity systems and administrative workflows. This integration supports end-to-end assessment continuity, reducing faculty workload and simplifying exam administration across departments.

10. Deployment & Continuous Optimization

We deploy the platform in phases, train AI and refine workflows using real-world feedback. Our developers continuously optimize AI detection accuracy, reporting models and user experience to maintain long-term value for institutions.

Cost to Build an ExamSoft AI-like Assessment Tool

The cost to build an ExamSoft AI-like assessment tool depends on features, security, AI capabilities, and scalability. Understanding these factors helps plan budgets for a secure, reliable, and high-performance assessment platform.

| Development Phase | Description | Estimated Cost |

| Consultation | Defines scope, academic integrity needs and core AI-driven assessment goals through structured planning sessions. | $5,000 – $8,000 |

| Exam Content Structuring | Gathers institutional exam data and builds structured outcome-tagged datasets for analytics and modeling. | $10,000 – $17,000 |

| Assessment Workflow & Item Bank System | Develops item creation, tagging and versioning systems with curriculum-aligned question management. | $14,000 – $20,000 |

| Secure Exam Delivery Mechanisms | Builds lockdown, encryption and offline-safe protocols for secure, tamper-resistant exam sessions. | $13,000 – $22,000 |

| AI Assisted Proctoring & Identity Verification | Implements behavioral monitoring, environmental analysis and multi-step identity authentication. | $16,000 – $28,000 |

| Offline Capability | Enables offline testing with encrypted local storage and automatic sync recovery. | $10,000 – $12,000 |

| Advanced Analytics & Reporting | Creates dashboards and models for multi-dimensional insights on student outcomes. | $13,000 – $20,000 |

| Automated Scoring & Feedback Engine | Builds objective scoring and rubric-based evaluation with intelligent feedback generation. | $11,000 – $16,000 |

| System Integrations & Workflow Alignment | Connects the platform with LMS, identity systems and institutional operational workflows. | $12,000 – $15,000 |

| Deployment & Optimization | Executes rollout, faculty training and ongoing enhancement of AI accuracy and user experience. | $5,000 – $12,000 |

Total Estimated Cost: $65,000 – $124,000

Note: Development costs vary by project scope, institution size, AI complexity, compliance, and scalability, with customization, accreditation, and security needs influencing the final investment.

Consult with IdeaUsher for a tailored cost estimate and development roadmap to build a high-performing, ExamSoft-like AI assessment platform aligned with your institutional goals and standards.

Cost-Affecting Factors to Consider during Development

Several factors, including features, AI integration, security, and scalability, influence the cost and complexity of developing an ExamSoft AI-like assessment tool.

1. Project Scope & Functional Depth

Broader functionality such as proctoring, offline delivery and deep analytics raises development effort. More complex assessment flows increase cost as they require additional modeling, security layers and workflow engineering.

2. Volume & Quality of Assessment Data

Limited, unstructured or outdated exam data requires extensive cleaning and tagging. Higher preprocessing effort increases cost, especially when building outcome-aligned question banks or analytics-ready datasets.

3. AI Proctoring & Detection Sophistication

Advanced AI modules that detect visual, audio and behavioral anomalies require more training cycles. Greater intelligence levels add to cost because they demand continuous refinement and evaluation.

4. Integrity Controls & Regulatory Alignment

Encrypted delivery, lockdown protocols and compliance auditing expand development scope. Strict security expectations increase cost as they require multi-layer validation and robust exam-hardening frameworks.

5. Integration With Institutional Systems

Seamless integration with LMS platforms and identity providers requires configuration and testing. Complex interoperability raises cost since each system needs alignment with exam workflows and permissions.

6. High Volume Exam Delivery & Real-Time Processing

Institutions requiring large-scale exam sessions need optimized pipelines and load-tested architecture. Greater scalability demands add cost due to enhanced infrastructure and performance engineering.

Challenges & How Our Developers Will Solve Those?

Developing an ExamSoft AI-like assessment tool presents challenges such as data quality, security, and real-time processing. Our developers address these by implementing robust AI models, secure architecture, and adaptive solutions for reliable, scalable performance.

1. Ensuring High-Integrity Remote Testing

Challenge: Remote exams face identity fraud, device misuse and environment manipulation, making it difficult to preserve assessment integrity across uncontrolled testing spaces.

Solution: We implement AI-assisted monitoring that analyzes behavior, audio cues and identity signals in real time. Combined with multi-step authentication, the system reliably detects anomalies and prevents impersonation or unauthorized resource access.

2. Building Reliable Offline Exam Capability

Challenge: Low connectivity environments risk answer loss, sync failures and compromised exam integrity when students test without stable networks.

Solution: We use tamper-resistant local storage and fail-safe sync logic that captures every response offline and securely uploads data when reconnected, safeguarding both exam continuity and result accuracy.

3. Creating Outcome-Aligned Item Banks at Scale

Challenge: Institutions often have large, unstructured question sets lacking outcome tags, versioning or competency mapping, making exam design labor-intensive.

Solution: We develop structured item bank frameworks with tagging, blueprinting and metadata controls. This enables scalable question organization and ensures assessments remain aligned with competencies and accreditation expectations.

4. Achieving Accurate AI Proctoring Signals

Challenge: AI proctoring can misinterpret benign behavior as violations, creating false flags and unnecessary administrative review.

Solution: We refine detection models using behavioral baselines, noise filtering and multi-signal analysis. This reduces false positives and ensures only meaningful integrity risks are elevated to human evaluators.

5. Integrating with Institutional Systems Without Disruption

Challenge: Connecting the platform with LMS systems, identity providers and administrative workflows can disrupt existing academic processes.

Solution: We use modular workflow mapping and standards-based interoperability to ensure seamless data exchange. This maintains operational continuity while enhancing assessment processes with minimal institutional friction.

Revenue Models of an ExamSoft AI-like Assessment Tool

AI assessment tools like ExamSoft AI use subscription plans, enterprise licensing, and direct-to-teacher models. These revenue streams help maximize adoption, recurring revenue, and long-term growth opportunities.

1. B2B SaaS Licensing Model

Institutions purchase annual or multi-year licenses to access the platform. Pricing typically scales with user count, exam volume and required feature sets, creating predictable and stable recurring revenue across academic cycles.

2. Tiered Subscription Packages

The platform can offer multiple tiers such as Basic, Advanced and Enterprise, each adding capabilities like analytics depth, offline delivery, or AI proctoring. This tiered structure increases average contract value by aligning pricing with institutional needs.

3. AI Proctoring & Identity Verification

Advanced modules like AI-assisted proctoring, behavior analysis and identity verification can be sold as optional add-ons. These features generate high-margin revenue because institutions often require enhanced exam integrity for high-stakes assessments.

4. Per Student or Per Exam Usage Fees

Some institutions prefer pay-per-exam or pay-per-student models for budgeting flexibility. This usage-based structure works well for certification boards, licensure exams and seasonal programs with fluctuating assessment loads.

5. Marketplace for Proctoring or Partner Services

A platform can host a marketplace of approved proctoring vendors, content providers or training partners, earning a commission on services purchased through the ecosystem, expanding revenue beyond core licensing.

Conclusion

Creating an ExamSoft AI-like Assessment Tool ultimately comes down to blending strong pedagogical design with reliable, scalable technology. By focusing on secure test delivery, automated scoring, insightful analytics, and a user-friendly interface, you can build a platform that genuinely supports educators and learners. As you plan your roadmap, think carefully about accuracy, integrity, and adaptability, since these elements determine how well your solution performs in real academic environments. When executed thoughtfully, your AI assessment tool can strengthen assessment quality and improve learning outcomes across institutions.

Build Your AI Assessment Tool With IdeaUsher!

At IdeaUsher, we specialize in building advanced assessment platforms that combine secure testing environments with intelligent analytics. Our team creates systems that streamline exam delivery, automate scoring, and strengthen integrity across institutions of every size. We design every solution to support scalable growth and long-term academic efficiency.

Why Work With Us?

- Assessment Technology Expertise: We design platforms that support high-stakes exams with reliability, scalability, and precision.

- Custom Development: From question banks to proctoring tools, every feature is tailored to your academic or organizational needs.

- Security-Driven Architecture: We implement robust safeguards to protect exam data, reduce risks, and maintain compliance.

- Proven Track Record: Our experience in edtech helps clients launch assessment solutions that improve learning outcomes and reduce administrative workload.

Browse our portfolio to see how our development expertise has helped clients build scalable, high-performing products tailored to their goals.

Partner with us to create a modern, AI assessment tool that elevates the testing experience for educators, administrators, and learners.

Work with Ex-MAANG developers to build next-gen apps schedule your consultation now

FAQs

An ExamSoft AI-like assessment tool needs secure delivery, automated scoring, item analysis, exam integrity checks, and role-based dashboards. These features ensure reliable evaluations, reduce manual work, support academic needs, and improve both instructor efficiency and learner performance.

Exam integrity relies on remote proctoring, identity verification, secure browsers, question randomization, and activity monitoring. These safeguards reduce cheating risks, protect exam content, and create a trustworthy testing environment for institutions that require strict compliance and audit-ready assessment workflows.

A strong stack combines cloud infrastructure, databases optimized for large test data, machine learning models for scoring, and frameworks for web and mobile access. Together, these components deliver stability, scalability, and consistent performance throughout varied assessment scenarios.

Analytics highlight performance trends, question difficulty, learner progress, and knowledge gaps. These insights help educators refine content, adjust teaching strategies, and support students more effectively, ultimately making assessments more meaningful and results easier to interpret.