- What are AI Agents?

- How Does an AI Agent Work?

- Key Market Takeaways for Autonomous Agents

- Various Functional Architectural Blocks of an Autonomous AI Agent

- A Detailed Overview of Microsoft Autogen

- How do you develop AI agents with Microsoft Autogen?

- Top 7 AI Agents Platforms

- Some of Our Projects at Idea Usher

- Conclusion

- Looking to Develop AI Agents?

- FAQs

In late 2022, the release of OpenAi chatgpt marked a turning point for conversational AI applications. It demonstrated the power of large language models for natural language interaction and led to the integration of chatbots with customer service, marketing, and support functions. However, in 2024, AI agents came into the spotlight. These agents go beyond simple chatbots by utilizing multiple LLMs for specialized tasks.

A recent study by McKinsey & Company examined the potential of AI agents in the manufacturing industry. The study highlighted a case where a large automotive manufacturer deployed AI agents to analyze sensor data from assembly lines in real-time. These AI agents were able to identify potential equipment failures before they occurred, allowing for preventive maintenance and significantly reducing downtime.

This is just one example of how AI agents can be used across various industries. In finance, AI agents can handle routine loan applications, detect potential fraud attempts, and even provide personalized investment recommendations based on a customer’s financial profile. Leading platforms such as Zapier, Bedrock, and Duet AI are making it easier for businesses to integrate AI agents into their existing infrastructure and leverage the power of OpenAI’s GPT models to create custom workflows.

In this blog post, we are going to unveil the secrets of AI agents, explore their development process, and also discuss the features that make them so powerful. You will learn how to leverage AI agents to empower your business and gain a competitive edge in the ever-evolving technological landscape.

- What are AI Agents?

- How Does an AI Agent Work?

- Key Market Takeaways for Autonomous Agents

- Various Functional Architectural Blocks of an Autonomous AI Agent

- A Detailed Overview of Microsoft Autogen

- How do you develop AI agents with Microsoft Autogen?

- Top 7 AI Agents Platforms

- Some of Our Projects at Idea Usher

- Conclusion

- Looking to Develop AI Agents?

- FAQs

What are AI Agents?

AI agents are basically intelligent virtual assistants powered by artificial intelligence. Unlike traditional automation tools that follow pre-programmed steps, AI agents can learn and adapt. They generally use ML algorithms to analyze data, identify patterns, and even make decisions within certain parameters.

AI agents can act as mini-brains within the business that not only receive information but truly understand it. This is made possible through the integration of technologies such as Large Language Models or supervised learning models.

LLMs are essentially sophisticated neural networks that are trained on massive amounts of text data. As a result, they can process information with a level of comprehension that is similar to that of a human and perform tasks such as sentiment analysis or even generate creative text formats. By incorporating LLMs, AI agents can have a more nuanced understanding of their environment and interpret data more effectively.

The benefits of AI agents are numerous. They are particularly valuable for handling repetitive tasks that would otherwise require human attention, freeing up employees to focus on more complex issues. Additionally, AI agents are adept at analyzing huge amounts of data to find trends and anomalies that humans might miss. This can provide valuable insights for business decisions, such as predicting customer behavior or optimizing marketing campaigns.

Let’s Understand This with an Example

First, let us consider the example of a retail business that struggles with inefficient inventory management. An AI agent can be of great help in optimizing this task.

- An AI agent can be connected to the inventory management system and point-of-sale terminals of the business, where it will collect real-time data on product sales, stock levels, and supplier lead times.

- Using historical sales data, current trends, and seasonal variations, the AI agent will forecast the future demand for each product.

- Based on the demand forecast and the present inventory, the AI agent can autonomously generate purchase orders to ensure sufficient inventory without overstocking.

- Furthermore, the AI agent can analyze market data and supplier pricing to identify the most cost-effective options for replenishing the inventory.

- With each sales cycle, the AI agent will continually refine its demand forecasting model based on real-time data. This continuous learning ensures that the system becomes increasingly accurate over time.

How Does an AI Agent Work?

At their core, AI agents are software programs designed to perceive their environment and take specific actions to achieve certain goals. They can adapt to new information and make decisions based on what they learn from the data they interact with.

AI Agents Rely on Large Language Models

Many AI agents utilize Large Language Models, which are powerful technologies trained on vast amounts of text data. This enables them to understand and respond to human language fluently, allowing them to perform tasks such as summarizing documents, generating creative text formats like poetry or code, and even translating languages. All of these capabilities are quite crucial for business efficiency.

However, complex tasks often require a coordinated effort from multiple AI agents with different specializations. This is where the concept of “agent orchestration” can come in handy! It enables you to design a workflow where various AI agents collaborate seamlessly by sharing information and passing tasks between them.

For instance, in a customer service scenario, a business can use an LLM-based agent to analyze a customer query first and then orchestrate a handoff to a specialized agent trained for technical support or financial transactions.

The Concept of Retrieval Augmented Generation

Apart from the orchestration approach, businesses can also use the help of Retrieval Augmented Generation, which can make agents more intelligent and help them tackle more difficult tasks!

RAG acts like a digital librarian for your AI agent. It searches for relevant information from external sources like company databases or industry reports, feeding this context to the agent before it responds. This empowers the agent to provide more accurate and up-to-date information, ultimately enhancing its intelligence and reliability for complex tasks.

Taking it a Step Further: Autonomous Agents.

While Retrieval Augmented Generation and orchestration empower LLM agents with additional data and coordinated workflows, the functionalities of a truly autonomous agent can take a significant leap.

They ditch pre-defined responses and orchestrate tasks for real-time adaptation. This is achieved through reinforcement learning, where the agent continuously learns and refines its decision-making within set goals through trial and error, eliminating the need for pre-programmed information for every situation. This real-time adaptability makes autonomous agents the most advanced, allowing them to tackle unforeseen challenges without explicit human intervention.

A Powerful Autonomous Agent Framework

Let us understand the power of autonomous agents with a basic framework of an autonomous agent designed for real-time fraud detection.

1. The Core Components: Specialized Agents for Intelligent Action

These components will make the core backbone of the autonomous agent framework,

- Observer Agent: This agent employs event monitoring techniques to capture user inputs (transactions) and application triggers. It leverages message queues or streaming services to handle a high volume of events efficiently.

- Prioritization Agent: This agent utilizes machine learning algorithms, specifically classification models, to analyze observed events and their context. Features like transaction amount, location, and purchase history are extracted and fed into the model. The model outputs a risk score, prioritizing tasks based on the likelihood of fraud.

- Execution Agent: This agent translates prioritized tasks into concrete actions. It interacts with external systems through APIs. In fraud detection, it might trigger workflows for further investigation using rule-based systems, notify security personnel via secure communication channels, or initiate card blocking procedures through integrations with banking systems.

2. Real-Time Intelligence: Unveiling Fraudulent Activity

Consider a scenario where a customer swipes their card for an unusually large purchase overseas. The observer agent detects the event and retrieves the user’s context data, which is stored in a user database. The prioritization agent analyzes this context and feeds it alongside transaction details into a pre-trained fraud detection model. This model, continuously updated with historical labeled data (fraudulent and legitimate transactions), assigns a risk score.

Furthermore, If RAG is employed, the prioritization agent can make API calls to external knowledge bases. These could include:

- Fraud watchlists: Real-time access to blacklisted merchants or suspicious IP addresses associated with fraudulent activity.

- Customer travel data: Confirmation of planned trips through airline or travel agency databases can help validate overseas purchases.

This enriched context empowers the model to make a more informed decision. With confirmation of a planned trip, a high-value overseas purchase with a moderate risk score might be flagged for review but not immediate action. Otherwise, the execution agent would initiate actions based on pre-defined thresholds and pre-configured workflows.

3. Beyond Fraud Detection: A Framework for Broader Applications

The power of this framework goes beyond just detecting fraud, as businesses can easily integrate it into their different customer support workflows. For example, if the observer agent receives a new query, then the prioritization agent can analyze its urgency and category.

By using RAG, it can access relevant product manuals or troubleshooting guides from external knowledge bases. Based on this analysis, the execution agent either directs the query to the right support agent for complex issues or instantly provides an automated response using the retrieved information, making customer support resolution times more efficient.

This framework can empower businesses with real-time, data-driven decision-making, enabling intelligent automation and improved efficiency across various domains.

Key Market Takeaways for Autonomous Agents

Source: MarketsAndMarkets

One of the main drivers behind this growth is the extensive implementation of AI across various industries. AI is being increasingly used by companies in fields like healthcare, finance, transportation, and manufacturing to tackle complex issues and improve processes.

These applications of AI employ advanced algorithms, machine learning, and data analysis to extract valuable insights and automate decision-making. With the rising demand for intelligent and efficient solutions, the need for AI agents that can operate independently and adapt to changing situations without constant human intervention is growing as well.

For example, JPMorgan Chase, one of the largest banks in the USA, has successfully harnessed the power of AI agents. Their AI agents can answer frequently asked questions, troubleshoot basic issues, and even schedule appointments. By delegating these tasks to AI agents, human agents can focus on complex customer inquiries, thereby improving overall customer service efficiency.

Various Functional Architectural Blocks of an Autonomous AI Agent

Now, let us discuss the key functional blocks that power these intelligent agents to optimize business operations.

1. Agent and Agent Development Framework

The Agent development framework serves as a centralized hub for businesses to create and customize their AI teams. With this framework, businesses can define the role of each agent, whether it is handling customer inquiries, automating data analysis with frameworks, or managing IT tasks.

Complex business challenges often require a coordinated effort from multiple AI agents. In such cases, orchestration frameworks like LangChain or LlamaIndex step in to manage this collaboration. These frameworks ensure smooth information exchange between agents and facilitate a handover process similar to a relay race. By working together, agents can efficiently address customer issues.

For example, a customer service scenario might involve one agent to understand the initial query, another to access account information, and a third agent to finalize a resolution. Orchestration frameworks ensure these agents work seamlessly together, passing information back and forth to tackle the issue efficiently.

Furthermore, Building and training AI agents no longer require extensive in-house AI expertise. Platforms like Microsoft Autogen or crewAI provide user-friendly interfaces that act as pre-built shells for AI assistants. With these tools, businesses can customize functionalities and train agents on their specific data.

2. Large Language Models

Large Language Models are a fundamental part of many autonomous AI agents. These models are generally trained on vast amounts of text data, which gives them exceptional fluency in understanding and responding to human language. As a result, they are perfect for several tasks that are crucial for effective AI agents.

When a user interacts with an AI agent, LLMs can analyze the user’s query or request and extract key information to understand the user’s underlying intent. For instance, an LLM can determine if a customer service inquiry is about billing, product features, or order status.

Businesses can also use LLMs to sift through large volumes of text data to pinpoint relevant information. This can be invaluable for tasks such as summarizing reports, extracting key details from contracts, or compiling customer feedback from social media conversations.

Effective AI agents need to keep track of the conversation. LLMs are excellent at this because they can remember previous interactions and the context of the current dialogue. This allows them to make sure their responses are consistent and relevant to the user’s needs, and to avoid repetitive questions.

Types of LLMs

There are two main categories in LLMs:

- General-purpose LLMs: These models, like GPT-3 or BERT, are trained on a broad spectrum of text data. This helps them to handle a wide range of tasks across different domains.

- Domain-specific/Customized LLMs: These models are trained on data specific to a particular industry or domain. This allows them to perform tasks with greater accuracy and possess a deeper understanding of the unique terminology and concepts relevant to that domain. For example, a legal document analysis LLM would outperform a general-purpose LLM in tasks related to contract reviews.

By leveraging the power of LLMs, autonomous AI agents can interact with users naturally, extract valuable information from various sources, and maintain a coherent conversation flow.

3. Tools Integration

It’s true that LLMs are quite powerful for understanding and responding to human language, but autonomous AI agents might need more muscle to excel truly. This is where tool integration comes in, allowing agents to extend their capabilities beyond language processing and interact with the real world.

For instance, let’s consider a customer service agent powered by an LLM. A customer may inquire about their order status. Without tool integration, the agent may be restricted to pre-programmed responses or offering to connect the customer to a human representative. However, with an API connection to the company’s CRM system, the agent can instantly retrieve the order status and provide a real-time update to the customer. This not only improves customer satisfaction by eliminating unnecessary wait times but also empowers the agent to handle a wider range of inquiries without human intervention.

Examples of these kinds of tool integration can include CRM systems for customer data access, logistics APIs for tracking shipments, and financial APIs for account balance inquiries.

Going Beyond Language

LLMs excel at communication, but what about tasks requiring calculations or data manipulation? Tool integration allows AI agents to connect with external tools and perform actions beyond simply responding with text.

For instance, an LLM integrated with a calculator could automatically calculate discounts or shipping costs based on user input. Similarly, an agent could connect with enterprise backend services like ERP or Enterprise Resource Planning systems to automate tasks like data entry from customer forms or report generation based on real-time data.

4. Memory and Context Management

If businesses want to provide a smooth and uninterrupted user experience, AI agents must be capable of remembering past interactions and information. This is where memory and context management come into play.

An AI agent’s memory functions like a filing cabinet with two compartments. The short-term compartment stores recent information that pertains to the current conversation. This allows the agent to maintain a natural conversational flow by recalling what was just discussed and preventing repetitive questions. For example, suppose a customer inquires about the size of a product after asking about its color. In that case, the agent can use short-term memory to provide a tailored response about the available sizes for that particular color.

On the other hand, the long-term compartment stores user preferences and historical data that are relevant to the agent’s domain. This may include a customer’s preferred communication channel, purchase history for a retail agent, or past service requests for a customer support agent. By leveraging long-term memory, the agent can personalize interactions and provide more relevant information.

However, what happens in situations where the agent’s own memory banks do not hold the necessary knowledge?

This is where RAG comes into the picture. RAG acts like a helpful assistant, allowing the LLM to access external knowledge bases or industry reports before formulating a response.

For example, businesses in the financial sector can use RAG to access the latest market data before responding to a client’s query. This injects valuable context into the LLM’s responses, leading to more accurate and informative interactions.

A Detailed Overview of Microsoft Autogen

Microsoft Autogen is a powerful tool that allows businesses to take advantage of the capabilities of Large Language Models such as GPT-3 and GPT-4. The framework is specifically designed to create intelligent applications that utilize collaboration, customization, and cutting-edge AI models.

With Autogen, businesses can create a team of virtual assistants with specialized skills to tackle complex tasks that require diverse functionalities. These agents work together by sharing information and building on each other’s contributions.

Autogen not only relies on AI but also allows for human expertise to be integrated seamlessly. This provides human oversight and intervention when necessary. For example, in a legal document review process, an Autogen agent can identify key clauses, followed by a final review and approval by a human lawyer.

The Building Blocks of Microsoft Autogen

Here are the four main building blocks of Microsoft Autogen,

Skills: The Recipe for Success

Skills are basically pre-programmed instructions for the agents. Similar to OpenAI’s Custom GPTs, Autogen skills combine prompts and code snippets. These define how the agent should approach specific tasks, ensuring efficient and accurate execution. For instance, an agent summarizing news articles might have a skill that involves identifying key sentences and compiling them into a concise summary.

Choosing the Right Tool for the Job

Autogen enables users to customize Language Model Models for specific tasks. This is significant because different LLMs exhibit their unique strengths. For instance, a model that has been trained on legal documents would be more appropriate for contract analysis tasks, while a model trained on customer reviews would be better for sentiment analysis.

The Agents: Putting it All Together

Agents are the heart of Autogen. They are configured with specific models, skills, and pre-defined prompts. Think of them as the “bots” that carry out the designated tasks based on the capabilities you’ve assigned them. An agent analyzing financial data might be equipped with a financial LLM, a skill for understanding financial reports, and prompts for extracting key metrics.

Workflow: The Master Plan

An individual agent is powerful, but the true magic of Autogen lies in its ability to orchestrate collaboration. A Workflow acts as a comprehensive blueprint, encapsulating all the agents required to complete a complex task and achieve the desired goal. These workflows define how the agents interact, share information, and pass on results to achieve the outcome.

The Group Chat Feature

Autogen takes collaboration a step further with its group chat features. Multiple agents can work together in a group setting, sharing information and coordinating efforts. Features like message visibility control, termination conditions for the group chat, and speaker selection methods ensure a well-orchestrated flow of communication within the agent team.

This allows for complex workflows where different agents contribute their expertise. For instance, imagine a group chat involving a document understanding agent, a legal analysis agent, and a risk assessment agent working together to review a contract.

Some Other Interesting Features

Apart from the features mentioned above, AutoGen also provides a variety of pre-built AI-powered agents that can jumpstart the development process. The agents include User Proxy Agents, which act as user representatives to receive and execute instructions; Assistant Agents, who are helpful teammates with default system messages to assist in tasks; and Conversational Agents, which form the foundation for both user representatives and assistants.

In addition, AutoGen allows users to experiment with cutting-edge agents such as Compressible Agents, which are suitable for resource-light environments, and GPT Assistant Agents, which are focused on GPT-based functionalities. While OpenAI’s LLMs like GPT-3.5 and GPT-4 are commonly used, AutoGen can be configured to work with users’ own local LLMs or those hosted elsewhere, providing flexibility in AI development.

How do you develop AI agents with Microsoft Autogen?

Microsoft AutoGen empowers businesses to create intelligent applications through its unique framework for building AI agents. Here’s a detailed stepwise guide for developing AI agents in Microsoft Autogen,

1. Setting Up AutoGen Studio: Your Development Hub

AutoGen leverages the Python Package Index for installation. You’ll use the pip command-line tool with the following syntax: pip install autogen. This installs the necessary libraries for working with AutoGen within your Python development environment.

To access the power of Large Language Models like GPT-3 and GPT-4, users need to configure their API key. This key acts as their access card, granting their agents the ability to interact with these powerful AI models. AutoGen doesn’t limit users to specific hosting options. You can leverage:

- Locally Hosted LLMs: If you have pre-trained LLM models within your infrastructure, AutoGen allows integration with these models. This might involve specifying the location of the model files and any additional configuration parameters specific to your local deployment.

- External LLM Services: Services like Azure or OpenAI provide access to pre-trained LLMs through APIs. AutoGen Studio facilitates integration with these services by requiring you to specify the service provider, API endpoint details, and relevant authentication credentials.

This flexibility allows users to tailor their development process to their existing resources. Once everything is configured, users can initiate AutoGen Studio through the command line. This user interface serves as their central hub for building and managing their AI agents.

2. Developing Skills

AutoGen offers a collection of Python functions, called skills, that include specific functionalities for agents. Each skill has a specific purpose, such as summarizing news articles or extracting key data from financial reports.

When creating a skill, the user defines its purpose within the function and implements the logic using Python code. This code may involve manipulating text data, interacting with external APIs, or utilizing the capabilities of the assigned LLM model.

AutoGen includes a library of pre-built skills for common tasks such as text summarization or sentiment analysis, which users can leverage to get a quick start. However, the true power lies in creating custom skills tailored to specific needs, allowing for the development of highly specialized agents that address unique business challenges.

3. Leveraging Models: The Brains Behind the Operation

As mentioned earlier, AutoGen provides users with a high degree of flexibility when it comes to hosting Language and Learning Models. Users have the option to use models hosted locally within their infrastructure or integrate with models provided by external services like Azure or OpenAI.

AutoGen Studio simplifies the process of integrating various LLM models into an application, whether users decide to use a local model or an external service. This allows users to focus on building their applications without worrying about complex integration tasks.

It’s important to note that different LLM models excel in different areas. AutoGen gives users the ability to choose the most suitable model for their specific application needs. For example, it would not be optimal to use a financial analysis model for legal document review. By carefully selecting the appropriate LLM, users can ensure that their agents have the necessary expertise to handle the tasks assigned to them.

4. Configuring Agents: Assigning Roles and Capabilities

Agents are the core components that carry out tasks and interact with users. They act as “bots” that execute instructions provided by the user. Each agent is configured with specific skills and models. Skills define how the agent approaches tasks, while models provide the underlying power for processing information and generating responses. Skills can be thought of as instructions, while models are the intelligent engines that carry out those instructions.

A primary model can be designated for each agent and act as the default engine for handling user inquiries and executing tasks within the agent’s area of expertise. AutoGen offers pre-built agent types like User Proxy Agents and Conversational Agents. The User Proxy Agents facilitate user interaction, while Conversational Agents handle natural language interactions. Custom agents can also be created to meet specific needs. This allows for a diverse team of AI assistants, each with unique functionalities.

5. Developing Workflows: Orchestrating Agent Collaboration

AutoGen utilizes JSON or JavaScript Object Notation to define workflows in a human-readable format. The format specifies the sequence of steps, the agents involved, and the data exchanged between them. Each step within the workflow is represented as a JSON object with properties that define the agent to be invoked, the specific skill to be executed, and any required input data.

Agents within a workflow communicate through asynchronous messaging. Each agent publishes messages containing processed data or task results to a central message queue. Other agents subscribed to the appropriate queues can retrieve these messages and use the data for their processing. AutoGen also allows for defining workflow state variables that persist across different steps, facilitating information sharing and context management across the entire workflow execution.

While AutoGen provides a high-level workflow design interface, experienced developers can leverage underlying Python libraries for more granular control. Libraries like asyncio can be used for asynchronous task coordination, while message queuing libraries like RabbitMQ or Apache Kafka can be integrated for robust communication between agents.

6. Leveraging AutoGen Playground: Testing and Refinement

The AutoGen Playground serves as a testing ground for your workflows. You can provide sample inputs, initiate workflow execution, and observe the interactions between agents.

The AutoGen Playground integrates seamlessly with Jupyter Notebook, a popular web-based environment for interactive computing. This allows developers to define workflows within notebook cells, provide sample inputs, and trigger execution using Python code within the same environment. This interactive environment allows you to test different configurations, identify potential issues, and refine your workflows before deployment.

The Playground provides detailed logs of agent activity within the workflow. You can see what data each agent processes, what skills are invoked, and the results generated. This visualization helps you understand the workflow execution flow and identify areas for improvement. Once you’ve finalized your workflows in the Playground, AutoGen Studio offers the ability to generate production-ready Python code. This code encapsulates the defined workflows and agent configurations, allowing you to integrate your AI application into your existing systems or deploy it as a standalone service.

Top 7 AI Agents Platforms

Here are the top AI agent platforms you should keep an eye on this year,

1. Dialogflow (by Google)

A popular platform from Google, Dialogflow offers a user-friendly interface and pre-built natural language understanding models. It integrates seamlessly with Google Cloud Platform services and allows for omnichannel deployment (web, mobile, messaging platforms).

- Strength in Google Integration: Seamless integration with Google Assistant and other Google products like Cloud Speech-to-Text for natural language understanding and text-to-speech for responses.

- Focus on Conversational Design: Offers a user-friendly interface for designing conversation flows with pre-built templates and rich message capabilities for engaging interactions.

- Scalability and Flexibility: Supports multiple agent types (CX and ES) catering to different needs, along with features for handling large volumes of queries and complex conversations.

2. Amazon Lex

A compelling solution from Amazon, Lex offers robust NLU capabilities and integrates seamlessly with other Amazon Web Services products. It’s well-suited for businesses already invested in the AWS ecosystem and looking for a scalable solution.

- Focus on Enterprise Needs: Designed for building chatbots within the AWS ecosystem, offering tight integration with other AWS services and features for secure data handling.

- Automatic Speech Recognition and Natural Language Understanding: Built-in ASR and NLU capabilities for understanding user intent from text or speech input.

- Multilingual Support: Supports a wide range of languages for creating chatbots that can interact with a global audience.

3. Microsoft Azure Bot Service

Part of the Microsoft Azure suite, Bot Service provides a comprehensive toolkit for building, deploying, and managing AI agents. It offers flexibility and integrates with various Microsoft products and third-party tools.

- Microsoft Integration Advantage: Integrates well with Microsoft products like Teams and Power Virtual Agents, allowing for easy bot deployment within the Microsoft ecosystem.

- Proactive Bot Management: Offers tools for proactive bot monitoring and analytics, enabling developers to optimize bot performance and user experience.

- Flexibility with Programming Languages: Supports development using various programming languages like C#, Python, and Java, providing flexibility for developers with different preferences.

4. IBM Watson Assistant

Leveraging IBM’s expertise in AI, Watson Assistant boasts advanced NLU capabilities and machine learning features. It excels at handling complex conversations and personalizing interactions based on user data.

- Focus on Machine Learning: Leverages IBM’s Watson machine learning engine for advanced intent recognition and context understanding, resulting in more natural and informative conversations.

- Customization Options: Offers a high degree of customization for building complex conversational experiences with features like custom entities and dialog workflows.

- Multilingual Capabilities: Supports a wide range of languages for creating chatbots that can interact across different regions.

5. Rasa Stack

Being an open-source platform, Rasa Stack offers developers greater control and customization. While requiring more technical expertise, Rasa provides a flexible framework for building complex AI agents with custom functionalities.

- Open-source and Customizable: An open-source platform offering full control over bot development and customization, allowing developers to tailor the platform to specific needs.

- Focus on Community and Developer Tools: Users can benefit from the large and active developer community providing support and pre-built components for faster development.

- Machine Learning Flexibility: Integrates with various machine learning frameworks like TensorFlow and spaCy, allowing developers to leverage their preferred tools for training custom NLU models.

6. ManyChat

Designed specifically for building chatbots for Facebook Messenger, ManyChat offers a user-friendly interface and drag-and-drop functionality. It’s a great option for businesses looking to establish a chatbot presence solely on Facebook Messenger.

- Ease of Use: Designed for non-technical users, offering a drag-and-drop interface for building chatbots without needing to write code.

- Focus on Social Media Integration: Specializes in building chatbots for Facebook Messenger, Instagram, and other social media platforms, making it ideal for social media marketing and customer engagement.

- Marketing Automation Features: Offers built-in marketing automation tools like email sequences and drip campaigns within the chatbot platform.

7. LivePerson Conversational AI Platform

A comprehensive solution from LivePerson, this platform goes beyond chatbots and offers a suite of tools for managing customer interactions across various channels. It caters to businesses seeking an omnichannel AI strategy with features like live chat and agent handover.

- Focus on Conversational Commerce: Designed for businesses looking to leverage chatbots for sales and customer support, offering features for product recommendations, order processing, and live agent handoff.

- Omnichannel Engagement: Enables building chatbots that can interact with customers across various channels like messaging apps, websites, and social media.

- AI-powered Agent Assist: Provides features for real-time agent assistance, suggesting responses and next best actions to human agents during customer interactions.

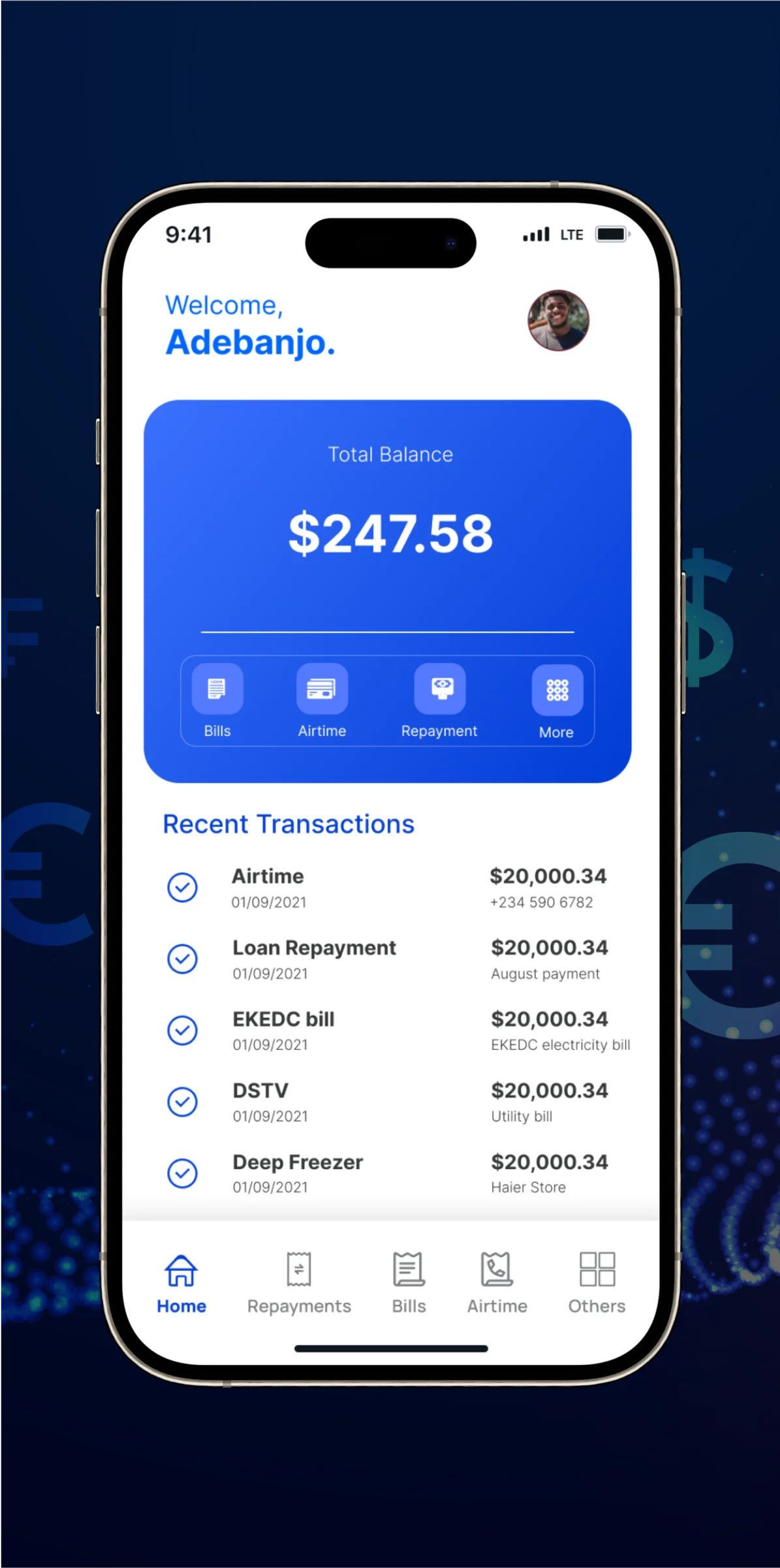

Some of Our Projects at Idea Usher

At Idea Usher, we’re driven by a relentless pursuit of innovation. We’re not just software developers – we’re a team of passionate creators who transform ideas into tangible solutions. We collaborate with forward-thinking clients across diverse industries, tackling unique challenges and shaping the future of technology.

Here are some interesting projects we’ve recently worked on,

1. Image AI

Our client came to Idea Usher with a unique idea for an AI-powered Image Generator, a design tool that would assist users in creating high-quality images. They wanted an application that would provide users with the freedom to customize their images using advanced tools and pre-built templates.

To bring their vision to life, we collaborated with the client to develop Image AI. We developed a user-friendly app that utilizes cutting-edge AI technology to generate images in real-time. The AI Image Generator offers a comprehensive range of customization tools and a library of templates, enabling users to unleash their creativity and streamline the image creation process.

2. Metaverse Retail Store

Our client had a groundbreaking vision for the future of retail and approached Idea Usher to bring it to life. They wanted to create a fully immersive metaverse store that would redefine the shopping experience. Our client aimed to build a virtual environment that would allow customers to explore products in 360 degrees, virtually try on items, and receive personalized recommendations.

To achieve this, we partnered with the client and combined our expertise in immersive technologies and innovative design. Together, we crafted a revolutionary metaverse retail store that offers features such as 360-degree product views, allowing customers to examine items in detail. Moreover, our virtual try-on capabilities and personalized recommendations further enhance the shopping experience, pushing boundaries and setting a new standard for the retail industry.

3. VR Office

Our client had a unique vision of using VR technology to revolutionize remote work. They wanted an immersive virtual office space that could boost productivity and collaboration for their team. They aimed to develop a visually appealing environment that could break down the barriers of traditional remote work.

To achieve this goal, Idea Usher partnered with the client and leveraged our expertise in VR technology and user-centric design. We developed a groundbreaking virtual office platform after conducting meticulous research and user testing. Our unwavering commitment to detail enabled us to create a solution that empowers organizations to flourish in the digital age.

Our immersive VR office space can foster seamless collaboration and enhance productivity, making it a game-changer for remote teams.

Conclusion

The development of AI agents is an exciting field that can revolutionize the way we interact with technology. Understanding the process involved in developing AI agents, which includes defining their purpose, training them with data, and testing their abilities, is key to appreciating the complexity of this field. The resulting features, such as natural language processing and decision-making algorithms, unlock a world of possibilities, ranging from intelligent virtual assistants to chatbots and more. As AI agent development continues to evolve, we can expect even more transformative applications across various industries.

Looking to Develop AI Agents?

With over 1000+ hours of experience crafting intelligent agents, our team can guide you through every step of the development process. We’ll tailor an AI agent to your specific needs, ensuring it possesses the perfect blend of features and functionalities. Let Idea Ushee turn your vision into a reality – contact us today and unlock the potential of AI!

FAQs

Q1: What are the features of an AI agent?

A1: AI agents are intelligent systems designed to perceive, reason, and act within their environment. These digital brains utilize sensors or data feeds to gather information, akin to eyes and ears. Machine learning algorithms empower them to continuously learn and improve, adapting to new data and situations. Decision-making algorithms analyze this information and choose the optimal course of action. Finally, there are actuators (like motors) or the ability to interact with other systems that allow them to influence the real world. In essence, AI agents perceive, reason, and act to fulfill their designated purpose.

Q2: What are the types of agents in AI?

A2: The field of AI agents comes with a variety of agents, each suited for specific tasks. Reactive agents are the simplest type, as they respond directly to stimuli in their environment. For example, a thermostat adjusts temperature based on the room’s current reading. On the other hand, Model-based agents have an internal world model that allows them to plan ahead and make informed decisions. For instance, a chess-playing AI considers the current board state and possible moves to strategize its next action. Goal-based agents are driven by specific objectives and actively seek information and take action to achieve them. A robot vacuum cleaner is an excellent example of this type of agent, constantly gathering information about its surroundings to clean efficiently. Additionally, some utility-based agents make choices based on a pre-defined measure of success.

Q3: What are some additional features that can be built into AI agents?

A3: Core functionalities of AI systems can be improved by adding extra features. Natural Language Processing allows these systems to understand and respond to human language, which makes their interactions with users more natural and effective. Furthermore, social intelligence equips these systems with the ability to understand social cues, emotions, and human behavior, which in turn leads to better communication and collaboration.

Q4: What are the uses of AI agents?

A4: AI agents have had a significant impact on our daily lives. Virtual assistants such as Siri or Alexa have made it easier for us to complete our daily tasks by responding to voice commands and offering assistance. Customer service chatbots provide 24/7 support on websites or apps, answering questions and resolving issues. Recommendation systems analyze user preferences and data to suggest relevant products or content. Fraud detection systems powered by AI agents analyze financial transactions to identify suspicious activity and prevent fraud.