- What is Retrieval Augmented Generation?

- The Concept of Parametric memory and Non-parametric memory

- Key Market Takeaways for Retrieval Augmented Generation

- How RAG Addresses The Challenges of LLMs?

- Key Benefits of Retrieval Augmented Generation

- The Core Working Mechanism of RAG - A Detailed Overview

- How to Develop a RAG Model?

- Tools and Frameworks Used for Developing a RAG Model

- Retrieval Augmented Generation vs. Traditional Approaches: A Showdown

- Interesting Applications of Retrieval Augmented Generation

- Top 5 RAG Based Platforms

- Conclusion

- Looking to Develop a RAG Model?

- FAQs

Nowadays, businesses are constantly bombarded with information from all sides. This can be a double-edged sword. While vast datasets offer valuable insights, extracting the most relevant information and using it effectively can be a challenge.

This is where Retrieval Augmented Generation steps in. RAG is an innovative AI technique that tackles this problem head-on. Think of it as a two-part system that includes a ” Retriever” and a “Generator.” The Retriever scours vast amounts of data to find the most relevant information for a specific task. This retrieved data then acts as a powerful “augmentation” for the Generator, a large language model tasked with completing the job.

This ability to leverage external knowledge sources makes RAG a game-changer for businesses. It improves the accuracy and efficiency of AI applications, leading to better customer experiences, more informed decision-making, and, ultimately, a significant competitive advantage.

This blog will explore everything you need to know to develop an RAG model, delve into its key features, and showcase how companies across various industries are harnessing its power to achieve remarkable results.

- What is Retrieval Augmented Generation?

- The Concept of Parametric memory and Non-parametric memory

- Key Market Takeaways for Retrieval Augmented Generation

- How RAG Addresses The Challenges of LLMs?

- Key Benefits of Retrieval Augmented Generation

- The Core Working Mechanism of RAG – A Detailed Overview

- How to Develop a RAG Model?

- Tools and Frameworks Used for Developing a RAG Model

- Retrieval Augmented Generation vs. Traditional Approaches: A Showdown

- Interesting Applications of Retrieval Augmented Generation

- Top 5 RAG Based Platforms

- Conclusion

- Looking to Develop a RAG Model?

- FAQs

What is Retrieval Augmented Generation?

Retrieval-augmented generation, or RAG, is a technique that addresses the issue of limited factual knowledge in large language models. It tackles this by fetching relevant information from external sources, allowing the model to generate more accurate and reliable text. In simpler terms, it gives large language models a way to consult an “external library” to double-check their information before responding to the questions.

RAG has two components,

- Retriever Component: This acts as a search engine, sifting through vast amounts of external data to find information relevant to a user’s query. It retrieves the most pertinent documents or text passages that can serve as a factual foundation for the generative component.

- Generative Component: This component leverages the retrieved information alongside its own internal knowledge to craft a response. The retrieved text provides context and ensures the generated response is grounded in factual accuracy.

RAG combines the data-finding “retrieval” component with a text-generating component. When a user submits a query, the retrieval component hunts for relevant external information. The “generative” component then analyzes this retrieved data alongside its own knowledge to craft a response that’s both informative and factually accurate.

RAG has many use cases, such as creating customer service chatbots that can refer to manuals or FAQs, generating informative product descriptions, and creating social media marketing posts that utilize industry trends or customer sentiment analysis.

The Concept of Parametric memory and Non-parametric memory

Businesses are relying more and more on large language models to perform tasks like text generation and information retrieval. However, traditional LLMs have limitations. Fine-tuning these models can be costly, and creating entirely new models can be time-consuming. Moreover, prompt engineering techniques, while effective, require significant expertise to use optimally.

Parametric memory refers to the traditional approach of storing knowledge directly within the model’s parameters. On the other hand, non-parametric memory allows the model to access and retrieve information from external sources in real time. This provides a significant advantage since the LLM can directly update, expand, and verify its knowledge base, overcoming the static nature of conventional parametric memory.

Early examples of this approach are also quite promising. Models like REALM and ORQA demonstrate the potential of combining masked language models with differentiable retrievers. These retrievers function like search engines within the Large Language Model, allowing them to access and process relevant information from external knowledge bases.

How RAG Took it a Step Further?

Sure, the concepts of parametric and non-parametric memory offer a significant leap forward in LLM capabilities. However, Retrieval-Augmented Generation (RAG) takes this concept a step further by providing a general-purpose fine-tuning approach. RAG essentially equips pre-trained language models with a powerful research assistant.

Here’s how it works: RAG leverages two memory systems – a pre-trained seq2seq model acts as the parametric memory, while a dense vector index of external knowledge sources, like Wikipedia, serves as the non-parametric memory. To bridge the gap and access this external knowledge, RAG employs a pre-trained neural retriever. This retriever, often a Dense Passage Retriever (DPR), acts like a super-powered search engine, sifting through the vast external knowledge base and delivering the most relevant information to the LLM.

This combined approach empowers RAG models to do two crucial things. First, they can condition their text generation on the retrieved information, ensuring their responses are grounded in factual accuracy. Second, they can dynamically incorporate real-world knowledge into their outputs, staying up-to-date and adaptable.

Key Market Takeaways for Retrieval Augmented Generation

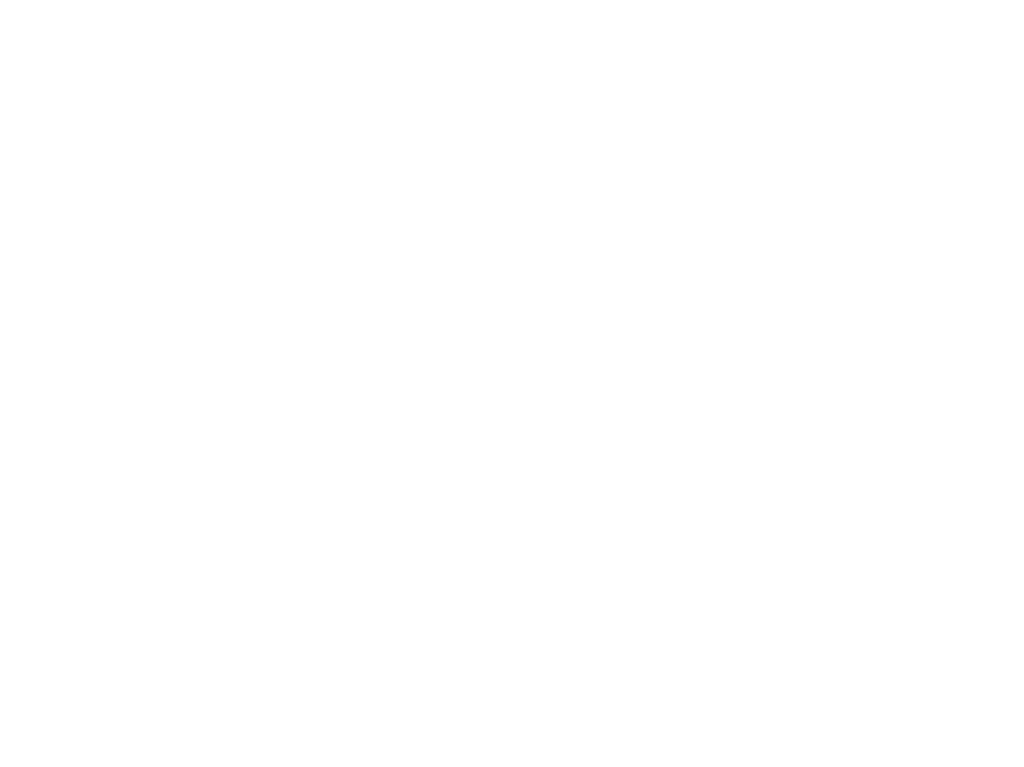

According to Grand View Research, The market for large language models is experiencing explosive growth, with a projected CAGR of 35.9% from 2024 to 2030. This surge is fueled by advancements like Retrieval Augmented Generation (RAG) technology, which significantly enhances the capabilities of LLMs.

Source: Grand View Research

A study by Meta AI demonstrates the power of RAGs. Compared to traditional LLMs, RAG models achieve a 10.4% increase in exact match accuracy and a 7.7% boost in F1 scores for factual question-answering tasks. These improvements translate directly to real-world benefits for businesses. For instance, customer service chatbots equipped with RAG technology can access and process relevant information from various sources, enabling them to provide accurate and up-to-date responses to customer inquiries. Not only does this enhance customer satisfaction, but it also lowers operational expenses.

The growing adoption of RAGs is evident in the actions of major tech companies. Google and Amazon are actively integrating RAG models into their cloud search services. Businesses of all sizes are recognizing the value of RAGs in areas like internal knowledge management, data-driven marketing content creation, and personalized customer interactions. As RAG technology matures further, we can expect even wider adoption across industries, empowering businesses to gain a significant competitive advantage.

How RAG Addresses The Challenges of LLMs?

Large Language Models have taken the world by storm, showcasing their ability to generate human-quality writing, translate languages with impressive fluency, and even craft creative text formats. However, despite their remarkable capabilities, LLMs face some inherent limitations that hinder their effectiveness in real-world business applications.

Let’s see how RAG acts as a powerful solution, addressing these shortcomings of LLMs and paving the way for a new era of reliable and impactful language models that empower businesses across various sectors.

1. Memory constraints

One key hurdle for LLMs is memory constraint. Trained on massive datasets, LLMs can struggle to retain and access all the information they’ve been exposed to. This can lead to factual inconsistencies or irrelevant responses. RAG tackles this by incorporating a retrieval component. This component acts like a search engine, sifting through external data sources to find the most pertinent information related to a specific query. By leveraging these external sources, RAG ensures the generated text is grounded in up-to-date and relevant information, even if it wasn’t explicitly stored within the LLM itself.

2. Lack of provenance

Another challenge for LLMs is the lack of provenance, making it difficult to track the source of the information they generate. This can be problematic for organizations that need verifiable and trustworthy content. RAG addresses this by providing a clear audit trail. The retrieval component identifies the specific external sources used to inform the generated text. This transparency allows businesses to ensure the factual accuracy of the content and builds trust with their audience.

3. Potential for “hallucinations”

Perhaps the most well-known limitation of LLMs is their tendency to hallucinate or fabricate information that sounds plausible but is factually incorrect. This can be particularly detrimental for customer service chatbots or any application where accuracy is paramount. RAG mitigates this risk by grounding the generation process in real-world data. By relying on retrieved information from credible sources, RAG ensures the generated text is not only creative and informative but also factually reliable.

4. Lack of domain-specific knowledge

Finally, LLMs often lack domain-specific knowledge, limiting their ability to handle specialized tasks or cater to specific industries. For businesses requiring industry-specific content or applications, this can be a significant barrier. RAG offers a solution by allowing for the integration of domain-specific knowledge bases into the retrieval component. This enables RAG to access and utilize targeted information, empowering businesses to develop LLMs that excel in their specific fields.

Key Benefits of Retrieval Augmented Generation

Let us discuss some key benefits of RAG,

1. Understanding the User’s Nuances

One of the important advantages of RAG is its ability to generate context-aware responses. By retrieving and incorporating relevant information alongside user queries, RAG-powered AI systems can grasp the user’s intent and provide answers that consider the specific context and underlying nuances of the question. This is particularly valuable for companies in the customer service domain, where addressing customer inquiries with precision and empathy is crucial for building trust and loyalty.

2. Accuracy You Can Trust

RAG takes AI accuracy to a new level. By granting access to real-time data and domain-specific knowledge, RAG ensures that AI responses are grounded in factual information and relevant to the specific industry or field. This enhanced accuracy is particularly beneficial in sectors like healthcare or finance, where even minor errors can have significant consequences.

3. Efficiency & Cost Savings

Implementing RAG can lead to significant cost savings for businesses. Unlike traditional approaches that may require frequent model adjustments, extensive data labeling, and resource-intensive fine-tuning processes, RAG leverages retrieved information to streamline the development and maintenance of AI models. This translates to faster development cycles, reduced development costs, and a more efficient allocation of resources.

4. A Solution for Diverse Needs

The versatility of RAG is another key strength, as this technology can be applied to a diverse range of applications, including customer support chatbots, content generation for marketing campaigns, research assistance tools, and many more. This allows companies across various industries to leverage RAG’s capabilities to address their specific needs and unlock new avenues for growth.

5. Building User Trust

Users who interact with AI systems powered by RAG experience a significant improvement in terms of response accuracy and relevance. This leads to a more positive user experience, resulting in increased trust in AI-powered solutions. In today’s digital age, building trust with customers is of utmost importance, and RAG can be a valuable tool in achieving this goal.

6. Adapting to a Changing World

The business world is constantly evolving, and the ability to adapt is critical for success. RAG allows AI models to learn and adapt from new data in real time, eliminating the need for frequent retraining or model rebuilding. This ensures that AI systems remain relevant and effective even in dynamic environments where information is constantly changing.

7. Reduced Data Labeling Burden

Traditional AI development often requires vast amounts of manually labeled data, a time-consuming and expensive process. RAG significantly reduces this burden by leveraging existing data sources and retrieved information to supplement its learning process. This allows companies to develop and deploy AI models faster and more cost-effectively.

The Core Working Mechanism of RAG – A Detailed Overview

Let us discuss the intricate workings of RAG and dissect its core mechanism,

The Retrieval Process

First comes the retrieval process,

1. Reaching Beyond the LLM’s Knowledge Base

Unlike traditional LLMs confined to their internal knowledge, RAG incorporates an external information retrieval process. This grants businesses access to a rich ecosystem of data sources, encompassing databases, documents, websites, and even APIs. By venturing beyond the LLM’s internal data stores, RAG ensures that AI responses are grounded in factual information relevant to the specific business domain.

2. Chunking for Efficiency

The retrieved data can be vast and complex. To facilitate efficient processing, RAG employs a technique called chunking. This involves breaking down the retrieved data into smaller, more manageable pieces. Each chunk becomes a potential building block for the final response. Think of chunking as chopping a large research paper into sections on specific topics. This allows RAG to identify the most relevant information quickly.

3. Unlocking the Meaning Within

After breaking down the text into smaller pieces, RAG converts each piece into a numerical format known as a vector. These vectors contain the semantic meaning of the text and enable RAG to comprehend the content and find relevant information based on the user’s query. Think of a vector as a digital signature that captures the essence of a text fragment. By analyzing these digital signatures, RAG can efficiently match the retrieved information with the user’s specific requirements.

4. Adding Context with Metadata

In addition to chunking and vectorization, RAG meticulously creates metadata for each chunk. This metadata acts like a digital label, containing details about the source and context of the information. This becomes crucial for citing sources and ensuring transparency within the AI-generated response. Consider a news article about a company’s financial performance. The metadata might include details like the publication date, author, and specific section of the article the chunk originates from. This allows for clear attribution and fosters trust in the information presented.

The Generation Process

Having explored RAG’s retrieval process, let’s delve into the generation phase, where the gathered information is transformed into a comprehensive response. This stage highlights how RAG leverages both external data and the LLM’s internal knowledge to deliver informative answers.

1. Understanding the User’s Intent

The process starts when the user enters a query or prompt. This serves as the foundation for generating a response. RAG doesn’t simply process the query literally; it employs a technique called semantic search. Here, the user’s query is converted into a vector format, similar to how the retrieved data chunks were transformed. This vector captures the meaning and intent behind the user’s question, allowing RAG to identify the most relevant information from the retrieved data.

2. Finding the Perfect Pieces

With both the user’s query and the data chunks represented as vectors, RAG can embark on its core function – information retrieval. The system searches through the preprocessed data chunks, comparing their vector representations to the user’s query vector.

This allows RAG to pinpoint the chunks containing the most relevant information for addressing the user’s specific needs. Imagine a customer service chatbot receiving a question about a product’s warranty. RAG would search through product information chunks, warranty policy documents, and even customer forum discussions (if included in the data sources) to identify the most relevant details for crafting a comprehensive response.

4. Bridging the Gap Between Retrieval and Generation

RAG doesn’t simply present the retrieved chunks to the user in a raw format. Instead, it combines the most relevant information from these chunks with the user’s original query. This combined input is then presented to a foundation model, such as GPT-3. These foundation models are powerful language generation tools that can leverage the combined query and retrieved information to create a human-quality response that is contextually relevant to the user’s question.

How to Develop a RAG Model?

While Retrieval Augmented Generation (RAG) offers immense potential for businesses, developing a model from scratch requires a multi-step approach. Here’s how to develop an RAG model from scratch,

1. Data Wrangling: The Bedrock of Success

A RAG model thrives on high-quality data. Businesses need to identify the information sources most relevant to their goals. This could involve customer service transcripts for chatbots, industry reports for financial analysis or scientific publications for healthcare diagnostics. Data wrangling becomes crucial here. This involves cleaning and structuring the data to ensure smooth retrieval by the model. Businesses can leverage data cleaning tools and explore options like named entity recognition (NER) to categorize information within the data for more precise retrieval later.

2. Embedding Magic: Transforming Text into Numbers

At its heart, RAG relies on the ability to compare a user’s query with the information stored within the data source. Here’s where embedding models come into play. These models convert text data into numerical representations, allowing for efficient comparison based on similarity scores. Businesses can choose from pre-trained embedding models like Word2Vec or GloVe, readily available online, or develop custom ones tailored to their specific domain and data characteristics.

3. The Search Party: Unearthing the Most Relevant Information

Once the data is prepared and encoded, a robust retrieval system is needed. This system acts as a search engine for the RAG model. It takes a user query as input and delves into the encoded data, searching for the most relevant passages based on the calculated similarity scores. Businesses can explore various retrieval techniques like Faiss, an open-source library for vector similarity search, or consider established solutions like Apache Lucene for managing and searching massive datasets.

4. The Powerhouse: Integrating a Large Language Model

The retrieved information serves as a knowledge base for the Large Language Model (LLM). Businesses can leverage pre-trained LLMs like GPT-3 or BART, which are readily available through cloud platforms. However, to truly harness the power of RAG, consider fine-tuning the LLM on your specific data and tasks. This involves training the LLM on your prepared data, enabling it to understand the nuances of your domain and generate more relevant responses.

5. Continuous Learning: Refining the Model for Peak Performance

Developing an RAG model is an ongoing journey, not a one-time destination. Businesses need to evaluate the model’s performance on real-world tasks continuously. This involves monitoring outputs for accuracy, identifying potential biases in retrieved information, and refining retrieval strategies or LLM prompts. By iteratively improving the model, businesses can ensure it delivers optimal results that drive tangible growth.

Tools and Frameworks Used for Developing a RAG Model

Developing Retrieval-Augmented Generation (RAG) models requires a specific set of tools and frameworks to handle various functionalities.

These tools ensure efficient data processing, accurate vector creation, effective information retrieval, and seamless interaction with foundation models. Let’s explore some of the key components businesses can leverage to build their RAG systems:

1. Deep Learning Frameworks

Libraries like PyTorch and TensorFlow provide the foundation for building and training custom models for various NLP tasks, including RAG. These frameworks offer the infrastructure needed to develop and fine-tune models specifically for a business’s needs.

2. Pre-trained Models

The Hugging Face Transformers library provides a treasure trove of pre-trained models for a wide range of NLP tasks. Businesses can leverage these models and fine-tune them for RAG applications, accelerating the development process.

3. Semantic Search Tools

Tools like Faiss, Elasticsearch, and Apache Lucene play a vital role in RAG’s information retrieval process. These libraries enable efficient similarity search and clustering of dense vectors, allowing the system to identify the most relevant data chunks based on the user’s query.

4. Deployment Tools

PyTorch Lightning and TensorFlow Serving empower businesses to deploy their RAG models in production environments. These tools ensure scalable and efficient inference, enabling businesses to handle real-world user queries effectively.

5. Complementary Libraries

Scikit-learn offers a comprehensive suite of machine-learning tools that can be integrated with RAG implementations. Businesses can utilize functionalities like clustering and dimensionality reduction to enhance the model’s performance.

6. Data Preprocessing Tools

Open-source tools like LangChain can be valuable assets for businesses. LangChain streamlines data preparation by facilitating the chunking and preprocessing of large documents into manageable text chunks, ensuring efficient information processing for RAG.

7. Cloud-Based Resources

For businesses working on the Azure platform, Azure Machine Learning provides resources and services specifically designed for managing and deploying RAG models within the cloud environment.

8. Foundation Model Integration

Businesses can leverage OpenAI’s GPT-3 or GPT-4 models as their foundation model. The OpenAI API facilitates the interaction and integration of these models into the RAG system, enabling the generation of human-quality responses.

9. Custom Script Development

Depending on the specific data sources and requirements, businesses may need to develop custom scripts for data preprocessing, cleaning, and transformation to ensure the data is optimized for RAG’s functionalities.

10. Version Control Systems

Implementing version control systems like Git and utilizing platforms like GitHub is crucial for managing code effectively. These tools enable collaboration, track changes, and ensure a smooth development process for businesses building RAG models.

11. Development Notebooks

Jupyter notebooks provide a valuable environment for businesses to experiment, prototype, and document their RAG development process. This allows for clear communication and efficient iteration throughout the development cycle.

12. Real-time Search Acceleration

Pinecone, a vector database designed for real-time similarity search, can be integrated with RAG systems. This integration can significantly accelerate the information retrieval process by enabling faster and more efficient semantic search on vector embeddings.

Retrieval Augmented Generation vs. Traditional Approaches: A Showdown

| Feature | Retrieval Augmented Generation (RAG) | Traditional Approaches |

| Retrieval Mechanism | * Leverages vector embeddings for semantic search of preprocessed data chunks. * Employs techniques like Faiss or Elasticsearch for efficient retrieval. | * Relies on keyword matching or rule-based information extraction. * May struggle with synonyms or paraphrases in the query. |

| Information Extraction | * Utilizes retrieved information and query context to generate a response. * Can leverage techniques like Transformers for contextual understanding. | * Extracts information based on predefined rules or patterns. * Lacks the ability to consider the broader context. |

| Contextual Understanding | * Employs attention mechanisms within models like Transformers to understand relationships between query, retrieved information, and relevant context. * Generates responses that are not only factually correct but also relevant to the user’s intent. | * Limited ability to properly understand the language and context. * May lead to responses that are factually accurate but miss the mark on user intent. |

| Paraphrasing & Abstraction | * Can paraphrase and abstract retrieved information during response generation. * Improves readability and comprehension of the response. | * Often presents information in its original form without modification. * Can result in clunky or hard-to-understand responses. |

| Adaptability & Fine-tuning | * Can be fine-tuned for particular domains or tasks by adjusting model hyperparameters or training data. * Offers a flexible framework for various information retrieval needs. | * Significant custom engineering is required for each new task. * Less adaptable and more time-consuming to implement for different use cases. |

| Efficiency with Large Knowledge Bases | * Utilizes techniques like chunking and vector embeddings to navigate large datasets efficiently. * Scales well with increasing data volume. | * May experience performance bottlenecks when dealing with massive knowledge bases. * Retrieval times can become slow as data size grows. |

| Real-time Updates | * Can be integrated with real-time data sources for continuous knowledge base updates. * Ensures access to the most up-to-date information for response generation. | * Updating knowledge bases in traditional approaches can be cumbersome and time-consuming. * May lead to outdated information being used for response generation. |

| Knowledge Representation | * Employs vector embeddings to capture semantic relationships and nuances within the knowledge base. * Enables the model to understand complex relationships between concepts. | * Knowledge representation is often shallow and limited to keyword matching or simple relationships. * Limits the model’s ability to understand the full context of information. |

| Citation Generation | * Can generate citations for the retrieved information, providing transparency about information sources. * Improves trust and accountability in AI-generated responses. | * Lacks mechanisms for source attribution or citation generation. * Makes it difficult to verify the provenance of information used in the response. |

| Performance on Knowledge-intensive Tasks | * Achieves state-of-the-art results on tasks requiring deep understanding and information retrieval. * Well-suited for complex information retrieval scenarios. | * Performance may be lower on knowledge-intensive tasks due to limitations in contextual understanding and information extraction. * May not be ideal for tasks requiring in-depth analysis and reasoning over large knowledge bases. |

Interesting Applications of Retrieval Augmented Generation

As we have already discussed previously, by combining the strengths of Large Language Models with targeted data retrieval, RAG can unlock a new level of accuracy, efficiency, and personalization across various departments. Let’s dive into some of the key applications of RAG and how they’re empowering businesses to thrive.

1. Data-Driven Decisions for Healthcare

In the healthcare sector, RAG models play a crucial role in diagnosis and treatment planning. Doctors can now access the latest medical research and a patient’s entire medical history simultaneously while formulating a treatment plan. RAG systems achieve this by retrieving relevant medical literature, patient data, and treatment guidelines, providing a holistic view for improved patient care. Companies like IBM Watson Health are utilizing RAG to develop AI tools that analyze patient data and suggest treatment options.

2. Revolutionizing Legal Research

Legal professionals are no longer required to waste countless hours sifting through piles of legal documents. RAG systems are here to rescue them by retrieving relevant case law, statutes, and legal articles based on a specific case or query. This not only expedites legal research but also ensures accuracy in arguments. As a result, lawyers can now save valuable time and concentrate on developing strategies. Nowadays, law firms are increasingly adopting RAG-powered legal research platforms to streamline their workflows and gain a competitive edge.

3. Boosting Customer Satisfaction

Customer support is a crucial aspect of any business. Chatbots powered by RAG technology can greatly improve the overall customer experience. These chatbots are capable of accessing real-time data from knowledge bases, which enables them to provide accurate answers to customer inquiries, resolve issues efficiently, and even offer personalized solutions. Companies such as Sephora have already adopted RAG-powered chatbots to provide round-the-clock customer support, leading to increased customer satisfaction and lower operational costs.

4. Financial Acuity

Always having access to the latest information is crucial for making informed financial decisions. RAG models can assist businesses in retrieving live market data, news articles, and economic reports. This empowers investors to make data-driven decisions, while financial analysts can leverage RAG to generate insightful reports with greater speed and accuracy. Investment firms are actively exploring RAG to gain an information edge in the ever-changing financial landscape.

5. Academic Research Made Easy

The academic community heavily relies on the steady stream of new research. RAG-based academic search engines play a significant role in assisting researchers by retrieving and summarizing relevant academic papers based on their area of study. This not only saves researchers time but also ensures that they can be updated with all the latest advancements in their respective fields. Many universities have partnered with AI companies to develop RAG-powered research tools, which are accelerating the pace of scientific discovery.

6. Content Creation with Depth

Compelling content will always be the backbone of successful marketing strategies. RAG models can be of great help to content creators by gathering relevant news articles and historical background information. This allows writers to develop content that is not only factually correct but also has context and depth. Major news organizations are already leveraging RAG to produce targeted content that resonates with their audience and boosts their online presence.

7. Personalized E-commerce Experiences

Modern-day customers desire personalized shopping experiences. RAG models are a way to provide tailored product recommendations by gathering user-specific data and product information. This enables e-commerce businesses to suggest products that meet each customer’s unique needs and preferences, ultimately resulting in higher sales and customer loyalty. Amazon and other companies are leading the way in adopting RAG-powered recommendation systems, resulting in considerable growth in their online marketplaces.

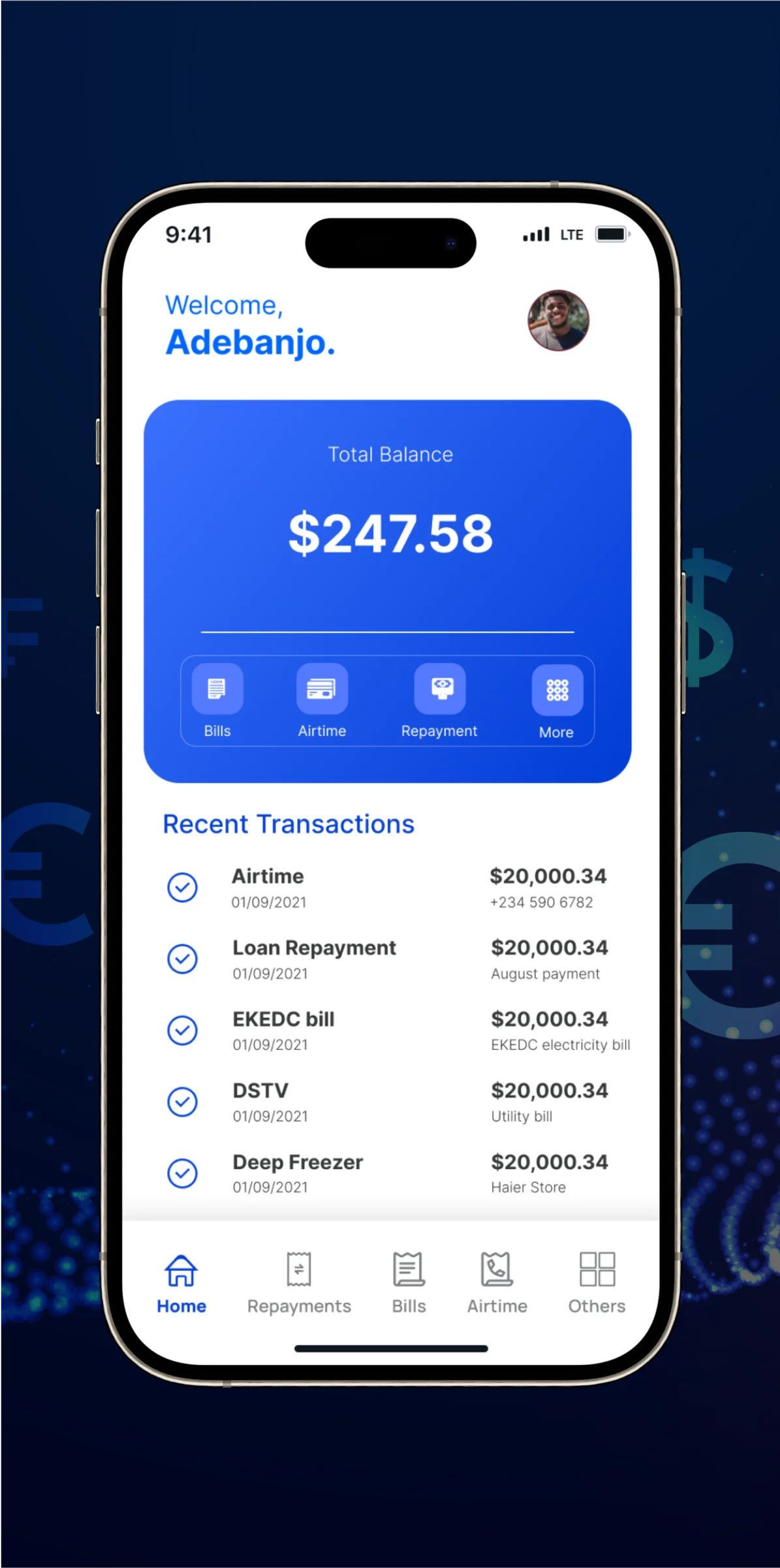

Top 5 RAG Based Platforms

Here are some popular RAG-based platforms this year,

1. Cohere

This platform provides access to powerful RAG models pre-trained on massive datasets of text and code. Businesses can leverage Cohere’s RAG capabilities for tasks like generating different creative text formats, translating languages, and writing different kinds of creative content, all informed by retrieved factual information.

This platform likely utilizes techniques like transformer-based encoders for both the retrieval and LLM components. These encoders excel at capturing semantic relationships within text data, enabling the model to retrieve highly relevant information for various creative text generation tasks.

2. Rephrase.ai

This platform focuses on creating marketing copy and website content. Rephrase’s RAG models analyze a company’s existing content and target audience data to generate creative and informative marketing materials. By combining retrieved information with the company’s brand voice, Rephrase ensures content resonates with target customers.

Rephrase’s RAG models likely employ a combination of techniques. The retrieval component might leverage techniques like document similarity scoring or named entity recognition (NER) to identify relevant information about a company’s existing content and target audience data. This information is then fed into the LLM, potentially a pre-trained model like GPT-3, which can be fine-tuned on marketing copy datasets to generate content that aligns with the brand voice and resonates with target customers.

3. Yoon.ai

Specializing in the legal domain, Yoon.ai’s RAG-based platform assists lawyers with legal research and document review. The platform retrieves relevant legal cases, statutes, and precedents based on a specific query, saving lawyers valuable time and ensuring the accuracy of their legal arguments.

The platform’s retrieval system likely involves specialized techniques for legal documents, potentially leveraging legal ontologies or keyword extraction tailored to legal terminology. This ensures accurate retrieval of relevant cases, statutes, and precedents based on a specific legal query. Yoon.ai might utilize a pre-trained LLM fine-tuned with legal text data to summarize retrieved information or even generate legal arguments informed by the retrieved data.

4. Jarvis

This platform offers various AI-powered tools, including a RAG-based content creation option. Businesses can leverage Jarvis to generate different creative text formats like blog posts, social media captions, and marketing copy. Jarvis’ RAG models access and integrate relevant information to ensure the content is not only creative but also factually grounded.

Similar to Rephrase.ai, Jarvis’ RAG model likely utilizes a combination of retrieval techniques and an LLM. The retrieval component might focus on relevant information based on the chosen content format (e.g., blog post, social media caption) and target audience data. The LLM, potentially a model like BART, could be fine-tuned on various creative writing datasets to generate content that is not only informative but also stylistically appropriate for the chosen format.

5. Writesonic

This platform caters to e-commerce businesses by offering RAG-powered product descriptions and marketing copy generation. Writesonic’s RAG models analyze a product’s features and target audience data to craft compelling descriptions that are both informative and persuasive. This can lead to better sales and customer conversions.

Writesonic’s RAG model likely incorporates techniques like entity recognition to identify product features from data sources and utilizes audience data for targeting. This retrieved information is then fed into the LLM, which could be a model fine-tuned on product descriptions and marketing copy, allowing Writesonic to generate persuasive and informative content that drives sales and conversions.

Conclusion

Retrieval Augmented Generation has the potential to revolutionize business growth across industries. By bridging the gap between powerful LLMs and targeted data retrieval, RAG empowers businesses with unprecedented levels of efficiency, accuracy, and personalization. This technology can streamline healthcare diagnostics and personalize e-commerce experiences, and its applications are vast and continually evolving.

By adopting RAG technology, businesses can take advantage of data-driven decision-making, improve customer experiences, and speed up innovation. This not only helps them succeed in the current market but also provides them with the necessary tools to navigate the constantly evolving business landscape of the future.

Looking to Develop a RAG Model?

Idea Usher can help. With over 1000 hours of experience crafting Retrieval Augmented Generation models, we can unlock the hidden potential within your information. Our custom RAG models will empower you to make smarter decisions, personalize experiences, and drive significant growth for your business. Contact Idea Usher today and see the future of AI-powered innovation.

FAQs

Q1: What is the primary objective of the rag model?

A1: The core objective of a Retrieval Augmented Generation (RAG) model is to bridge the gap between a large language model’s (LLM) capabilities and real-world data. RAG achieves this by strategically retrieving relevant information from external sources to inform the LLM’s outputs. This empowers businesses with AI-powered solutions that are not only creative but also grounded in factual accuracy, ultimately enhancing decision-making and driving growth.

Q2: What are the best practices for rag?

A2: Optimizing RAG models requires a focus on data and fine-tuning. Businesses must prioritize high-quality, well-organized data sources to ensure that retrieved information is accurate and relevant. Additionally, continuous evaluation and refinement are crucial. This includes experimenting with retrieval strategies, tailoring prompts, and monitoring outputs to optimize RAG’s performance for specific business goals, maximizing its impact on growth.

Q3: What are the limitations of the rag approach?

A3: While Retrieval Augmented Generation (RAG) offers a powerful toolkit, it does have limitations. RAG’s accuracy relies heavily on the quality and organization of the data it retrieves. Additionally, current RAG models lack robust reasoning capabilities, potentially leading to misinterpretations of retrieved information. Furthermore, ensuring retrieved data is unbiased and up-to-date can be challenging. However, as RAG technology evolves, these limitations are being actively addressed, paving the way for its continued impact on business growth.

Q4: What is the rag model?

A4: The RAG model, or the Retrieval-Augmented Generation model, is a game-changer for businesses. It acts as a bridge between powerful language models and real-world data. RAG retrieves relevant information from reliable sources, like research papers or customer data, and feeds it to the language model. This empowers the model to generate outputs that are not only creative but also grounded in facts, leading to improved decision-making, personalized experiences, and, ultimately, business growth.