- What Is Generative AI?

- How Generative Modeling Works?

- Generative AI Stack: Overview

- Importance Of A Comprehensive Tech Stack In Building Effective Generative AI Systems

- Generative AI Tech Stack: Detailed Overview

- Selecting The Right Generative AI Tech Stack: Key Considerations

- Benefit For Businesses From Robust Generative AI Tech Stack

- Applications Of Generative AI In Real-world Scenarios

- Potential Risks And Challenges Of Generative AI

- Conclusion

- FAQ

Generative AI, a revolutionary force in the world of artificial intelligence, has firmly established itself in our digital landscape. Its influence is noticeable, from the conversational abilities of ChatGPT to the captivating avatars appearing on our social media timelines. This transformative technology has driven content creation into uncharted territories, sparking a wave of innovation and expansion across industries.

The remarkable financial investments, with over $2 billion invested in generative AI in 2022, underscore its growing prominence. The valuation of OpenAI at $29 billion, as reported by The Wall Street Journal, serves as evidence of the strong interest corporations and investors have in this AI frontier. Generative AI is no longer a niche but a powerful tool reshaping the business landscape.

With its applications ranging from marketing, customer service, and education, generative AI is becoming widespread. It creates marketing materials, formulates persuasive pitches, devises intricate advertising campaigns, and more, all with unmatched customization. Notable platforms like OpenAI’s ChatGPT, DeepMind’s Alpha Code, DALL-E, GoogleLab, Jasper, MidJourney, etc. are driving this transformation.

Generative AI finds utility in various domains, from business solutions to digital healthcare and software engineering. Its evolution knows no bounds, promising countless possibilities for self-operating enterprises. In this article, we commence our exploration to unravel the workings of generative AI, shedding light on its dynamic technology stack and a perspective on this groundbreaking technology.

- What Is Generative AI?

- How Generative Modeling Works?

- Generative AI Stack: Overview

- Importance Of A Comprehensive Tech Stack In Building Effective Generative AI Systems

- Generative AI Tech Stack: Detailed Overview

- Selecting The Right Generative AI Tech Stack: Key Considerations

- Benefit For Businesses From Robust Generative AI Tech Stack

- Applications Of Generative AI In Real-world Scenarios

- Potential Risks And Challenges Of Generative AI

- Conclusion

- FAQ

What Is Generative AI?

Generative AI, also known as Generative Artificial Intelligence, is a remarkable technology that has garnered significant attention in recent years due to its transformative capabilities.

At its core, Generative AI is a subset of artificial intelligence (AI) that specializes in creating diverse forms of content, including text, images, audio, and synthetic data. You can consider it as an innovative AI artist or composer that can learn from existing examples and then generate entirely new and realistic content that closely resembles what it has learned. However, it doesn’t merely replicate the past; instead, it combines and reinterprets learned elements to create something fresh and innovative.

The potential applications of Generative AI are extensive and span a multitude of fields. It can be employed to create art, generate code, compose music, craft marketing content, and even aid in drug discovery. However, it’s essential to acknowledge that while Generative AI has made remarkable progress, it still faces challenges. Issues related to accuracy, bias, and occasional unexpected outputs are areas of ongoing research and refinement.

Types Of Generative AI Models

Generative AI models, including Generative Adversarial Networks (GANs), Transformers, Variational Autoencoders (VAEs), and Multimodal Models, represent the forefront of artificial intelligence innovation. Each of these models brings a unique set of capabilities to the table.

1. Generative Adversarial Networks (GANs)

Generative Adversarial Networks, or GANs, represent a pivotal breakthrough in Generative AI. They consist of two critical components: a generator and a discriminator. These two parts work in tandem but against each other in a fascinating dance.

The generator’s role is to create synthetic data, whether it’s images, text, or any other form, that closely mimics real-world data. On the other hand, the discriminator’s job is to scrutinize this generated data and distinguish it from authentic data. As they engage in this adversarial process, both components improve over time. The generator becomes more adept at creating convincing data, while the discriminator becomes better at detecting fakes.

This interplay results in GANs being exceptionally adept at creating content that closely resembles real-world data. For example, they have been instrumental in generating lifelike images of both people and objects. GANs have also found applications in art generation, where they can produce artworks that are virtually indistinguishable from those created by human artists.

2. Transformers

Transformers are another significant player in the Generative AI landscape. They are known for their prowess in handling sequential data, such as text and speech. These models have ushered in a new era of human-machine interaction and performance improvement.

Unlike GANs, transformers rely on a single neural network. Their architectural flexibility and scalability make them adaptable to a wide array of applications. One of their key features is their ability to capture long-range dependencies in data. This means they can understand not only individual words but also the intricate relationships between them, making them highly versatile.

Transformers have proven invaluable in machine translation, text summarization, question answering, and various other text-related tasks. They have transformed the way we process and generate sequential data.

At their core, transformers embrace an encoder/decoder architecture, a dual-pronged approach that fuels their remarkable capabilities. The encoder meticulously distills salient features from an input sentence, akin to deciphering the essence of a narrative. This treasure trove of insights is then seamlessly passed through a series of encoder blocks. The output of the final block serves as the foundational input for the decoder.

On the decoder side, a collaboration of decoder blocks comes into play, each ready to receive the encoded features granted by the encoder. This cooperative process results in the creation of an output sentence, often similar to translation but extending beyond linguistic borders.

Transformers demonstrate the fusion of context, sequence, and understanding in the realm of generative AI, facilitating the transformation of natural language requests into tangible commands, whether they summon images or text, guided by the subtle details of user descriptions.

3. Variational Autoencoders (VAEs)

Variational Autoencoders, or VAEs, take a unique approach to Generative AI. They consist of two interconnected networks: an encoder and a decoder. The encoder compresses input data into a simplified format known as the latent space. This latent representation is then manipulated to generate new data that resembles the original but isn’t identical.

For example, suppose you are training a VAE to generate human faces. Over time, it learns to distill the essential characteristics of faces from photos. Then, it can take these characteristics and generate new faces, each with its own unique attributes.

VAEs are versatile in applications such as data generation, data compression, feature learning, and anomaly detection. They excel in scenarios where data needs to be transformed into a more concise yet expressive format.

4. Multimodal Models

Multimodal models stand out for their ability to understand and process multiple types of data simultaneously. This includes text, images, audio, and more. This capability enables them to create more sophisticated outputs by integrating information from different modalities.

For instance, a multimodal model can generate an image based on a text description or vice versa. This cross-modal functionality opens up a world of possibilities, from image translation to text-to-speech conversion.

These models, such as DALL-E 2 and OpenAI’s GPT-4, are at the forefront of Generative AI. They are pioneers in generating content that spans various media types, from text and graphics to audio and video. However, they also face challenges in handling the complexity of multimodal interactions and interpretations.

| Feature | GANs | Transformers | Variational autoencoders | Multimodal models |

| Architecture | Two neural networks: generator and discriminator | Single neural network | Two neural networks: encoder and decoder | Single neural network |

| Data type | Images, text, audio, video | Text | Images, text, audio, video | Multiple data modalities |

| Strength | Good at generating realistic data | Good at understanding and generating sequential data | Good at learning latent representations of data | Good at learning from multiple data modalities |

| Weakness | Can be unstable to train | Can be computationally expensive to train | Can be computationally expensive to train | Can be difficult to train on large datasets |

| Applications | Image generation, text generation, audio generation, video generation, data augmentation, style transfer | Machine translation, text summarization, question answering, drug discovery | Two neural networks: generator and discriminator | Image translation, text-to-speech, machine translation |

How Generative Modeling Works?

Generative modeling, a subset of unsupervised machine learning, is a fascinating field where AI models learn to identify patterns in input data and use this understanding to generate new data that mirrors the original dataset.

Generative AI models are neural networks trained to recognize patterns and structures in existing data. These models can utilize various learning approaches, including unsupervised or semi-supervised learning, enabling organizations to leverage large volumes of unlabeled data to build foundational models.

A wide array of generative models are available, each with its own strengths. By combining these strengths, we can create even more potent models. For instance, denoising diffusion probabilistic models (DDPMs), or diffusion models, use a two-step process during training to determine vectors in latent space. The first step, forward diffusion, gradually introduces random noise to the training data. The second step, reverse diffusion, reverses this noise to reconstruct the data samples.

Generative modeling operates by learning from existing data and leveraging that knowledge to generate new, similar data. It employs neural networks and various learning approaches to discern patterns and structures within the data, which are then used to create fresh content.

Generative AI Stack: Overview

Generative AI is rapidly gaining ground across various industries, revolutionizing the way we develop innovative solutions. To delve into the specifics of a foundational tech stack in Generative AI, it’s essential to first grasp the significance of this core framework. Let’s explore why understanding the foundational tech stack is crucial and how it can serve as a solid foundation for your Generative AI endeavors:

A. The Significance of a Foundational (Generic) Tech Stack

Before diving into the specifics of the tech stack, it’s crucial to grasp the significance of a Foundational tech stack in the context of Generative AI:

1. Foundation Of Knowledge

At its core, the foundational tech stack provides a bedrock of knowledge about the fundamental components, frameworks, and technologies that underpin Generative AI. It acts as a comprehensive knowledge base, setting the stage for more specialized tech stacks.

2. Guiding Development Decisions

Developers embarking on Generative AI projects can make informed decisions by delving into the foundational tech stack. This guidance aids in selecting the right mix of technologies and tools tailored to different stages of development. It ensures alignment with project objectives and goals.

3. Interoperability And Integration

Proficiency in the foundational tech stack streamlines the process of interoperability and integration within the Generative AI system. It helps identify key integration points with other systems, fostering seamless collaboration among various technologies and tools.

4. Flexibility And Adaptability

A firm grasp of the foundational tech stack empowers developers with the flexibility to adapt and transition between specific tech stacks effectively. It allows them to understand the underlying principles that transcend individual implementations, enabling more informed tool selection.

5. Future-Proofing

Since Generative AI is evolving every day, being prepared for the future is paramount. A strong foundation in the foundational tech stack equips developers to stay updated with emerging technologies and industry trends. This preparedness makes it easier to adopt new tools and best practices as Generative AI continues to evolve.

6. Basis For Specialization

Once you’ve comprehended the foundational stack, you can delve deeper into specific technologies, tools, and techniques tailored to your unique Generative AI stack. This specialization leads to a more detailed understanding of how these tools can be applied effectively within specific contexts.

By understanding the foundational tech stack, you establish a solid footing for your Generative AI journey. It not only enhances your knowledge but also guides your development decisions, fosters integration, and ensures adaptability in an ever-changing field. With the right foundation, you’re well-equipped to navigate the exciting possibilities of Generative AI.

B. Components Of The Foundational Tech Stack

Here is an intricate exploration of the core components within the foundational tech stack for Generative AI development:

1. Application Frameworks: Fundament Of The Generative AI Stack

Application frameworks serve as the foundational layer of the tech stack, providing a structured programming model that rapidly integrates innovations. Frameworks such as LangChain, Fixie, Microsoft’s Semantic Kernel, and Google Cloud’s Vertex AI empower developers to create applications capable of autonomously generating content, developing semantic search systems, and enabling AI agent task performance.

2. Models: Generative AI’s Cognitive Core

At the core of the Generative AI stack reside the Foundation Models (FMs), aptly referred to as the ‘brain’ of the system. These models, which can be proprietary or open-source (developed by organizations like OpenAI, Anthropic, or Cohere), enable human-like reasoning. Developers can even train their own models, optimizing applications by employing multiple FMs. Hosting these models on servers or deploying them on edge devices and browsers enhances security, reduces latency, and optimizes costs.

3. Data: Fueling Generative AI with Knowledge

Language Learning Models (LLMs) are integral in reasoning about the data they’ve been trained on. To enhance the precision of these models, developers operationalize their data. Data loaders and vector databases play pivotal roles by facilitating the ingestion of structured and unstructured data and efficient storage and retrieval of data vectors. Techniques like retrieval-augmented generation are leveraged to personalize model outputs.

4. The Evaluation Platform: Measuring and Monitoring Performance

Balancing model performance, cost, and latency is a critical challenge in Generative AI. Developers employ various evaluation tools to identify optimal prompts, track online and offline experimentation, and monitor real-time model performance. Tools such as WhyLabs’ LangKit, prompt engineering, and observability, along with No Code / Low Code tooling and tracking tools, are indispensable in this phase.

5. Deployment: Transitioning to Production

In the deployment phase, developers aim to move their applications into production. They can choose self-hosting or third-party services for deployment. Tools like Fixie facilitate seamless development, sharing, and deployment of AI applications.

The Generative AI Tech Stack:

| APPLICATION | Content generation | Semantic search | Agents | – | – |

| APPLICATION FRAMEWORK | griptape | Vertex AI | Semantic Kernel | FIXIE | LangChain |

| DEPLOYMENT | gradio | FIXIE | Steamship | D.I.Y | – |

| MODEL | ||||||

| Foundation Model | Open AI | PaLM2 | Anthropic | stability.ai | EleutherAI | – |

| Hosting | aws Bedrock | Vertex AI | Replicate | Modal | GooseAI | Hugging Face |

| Training | mosaic | Modular | cerebras | TOGETHER | – | – |

| DATA | ||||||

| Data Loader | Unstructured.IO | Databricks | Airbyte | AWS | Azure | Notion |

| Vector Database | Pinecone | chroma | pgvector | edis | momento | – |

| Context Window | LangChain | llamaindex | – | – | – | – |

| EVALUATION | ||||||

| Prompt Engineering | PromptLayer | Aim | scale | Humanloop | – | – |

| Experimentation | comet | STATSIG | mlflow | Clear ML | Weights & Biases | – |

| Observability | arize | DATADOG | OBSERVE | WhyLabs | Helicone | graphsignal |

The Generative AI Tech Stack embodies a comprehensive ecosystem supporting the development, testing, and deployment of AI applications. It reshapes how we synthesize information, ushering in transformative possibilities across industries. Understanding the intricacies of this tech stack equips developers to navigate the evolving landscape of Generative AI effectively and harness its boundless potential.

Importance Of A Comprehensive Tech Stack In Building Effective Generative AI Systems

Building a generative AI system that achieves excellence and innovation requires more than just algorithms and data. It necessitates the construction of a comprehensive tech stack, a strategic amalgamation of technologies, frameworks, and tools. In this section, you will find the pivotal role of a robust tech stack in crafting effective generative AI systems and essential components.

1. A Foundation Of Knowledge And Informed Decisions

Understanding the intricate components of the generic generative AI tech stack serves as the bedrock for making well-informed decisions. Developers gain a comprehensive grasp of the essential elements, frameworks, and technologies that constitute the generative AI landscape. This profound understanding becomes instrumental in judiciously selecting specific tools and technologies throughout the various phases of generative AI development.

The tech stack guides development decisions by offering insights into the technologies and tools best suited for different stages of generative AI development. With this knowledge, developers can tailor their approach to maximize precision, scalability, and trustworthiness. The tech stack becomes a strategic asset, enabling rapid development and deployment of generative AI applications.

2. Precision, Scalability, And Trustworthiness

A thoughtfully curated tech stack significantly elevates the precision, scalability, and reliability of generative AI systems. These enhancements expedite the journey from development to deployment of generative AI applications. When the right tools are seamlessly integrated, the possibilities for achieving precision and scalability within generative AI become boundless.

Different tools and technologies within the stack cater to various aspects of AI system development. For instance, machine learning libraries like TensorFlow and PyTorch are instrumental in model development, while Docker facilitates efficient deployment. GPU-accelerated computing with CUDA can dramatically accelerate training times, optimizing efficiency. Moreover, the tech stack ensures interoperability among different components of an AI system, facilitating effective communication.

Maintenance and debugging tools, including Git for version control, aid in codebase upkeep, change tracking, and issue resolution. In an era of heightened data privacy and security concerns, the tech stack also includes technologies that protect sensitive information and ensure compliance with regulations like GDPR.

Undoubtedly, an adeptly chosen tech stack significantly enhances the precision, scalability, and reliability of generative AI systems. These enhancements expedite the development and deployment of generative AI applications. With the right tools in place, the potential for precision and scalability becomes boundless.

3. Breakdown Of The Key Components In Generative AI Techstack

3.1. Machine Learning Frameworks: The Pillars

In the generative AI tech stack lie the pillars upon which AI models are constructed. These are the machine learning frameworks like TensorFlow, PyTorch, and Keras. These frameworks serve as the launchpad for creativity, equipped with pre-built models tailored for diverse tasks such as image generation, text synthesis, and music composition. What sets them apart is their flexibility, allowing developers to sculpt and fine-tune models according to their vision.

3.2. Programming Languages: The Linguistic Bridge

Programming languages serve as the linguistic bridge between human ingenuity and machine intelligence. Python emerges as the widely used language in this context due to its blend of simplicity, readability, and extensive library support. It strikes a balance between ease of use and model performance. While Python takes the lead, languages like R and Julia find their niche, offering specialized capabilities in specific applications within generative AI.

3.3. Cloud Infrastructure: The Backbone

In generative AI, computational demands can be monumental. This is where the cloud infrastructure steps in as the robust foundation. Providers like AWS, GCP, and Azure provide essential support to developers, offering the scalability and flexibility required for deploying generative AI systems. With their array of services, they empower the handling of massive datasets and the execution of resource-intensive computations.

3.4. Data Processing Tools

Before data contributes to AI models, it undergoes a transformative journey. Data processing tools like Apache Spark and Apache Hadoop serve as the wizards of this process. They efficiently preprocess, cleanse, and adapt data into a format suitable for training models. These tools do not merely manage large datasets; they also reveal the hidden patterns within, enriching our understanding of the data intricacies.

| Component | Technologies |

| Machine learning frameworks | TensorFlow, PyTorch, Keras |

| Programming languages | Python, Julia, R |

| Cloud services | AWS, GCP, Azure |

| Data preprocessing | NumPy, Pandas, OpenCV |

| Generative models | GANs, VAEs, Autoencoders, LSTMs |

| Visualization | Matplotlib, Seaborn, Plotly |

| Deployment | Flask, Docker, Kubernetes |

| Other tools | Jupyter Notebook, Anaconda, Git |

Generative AI Tech Stack: Detailed Overview

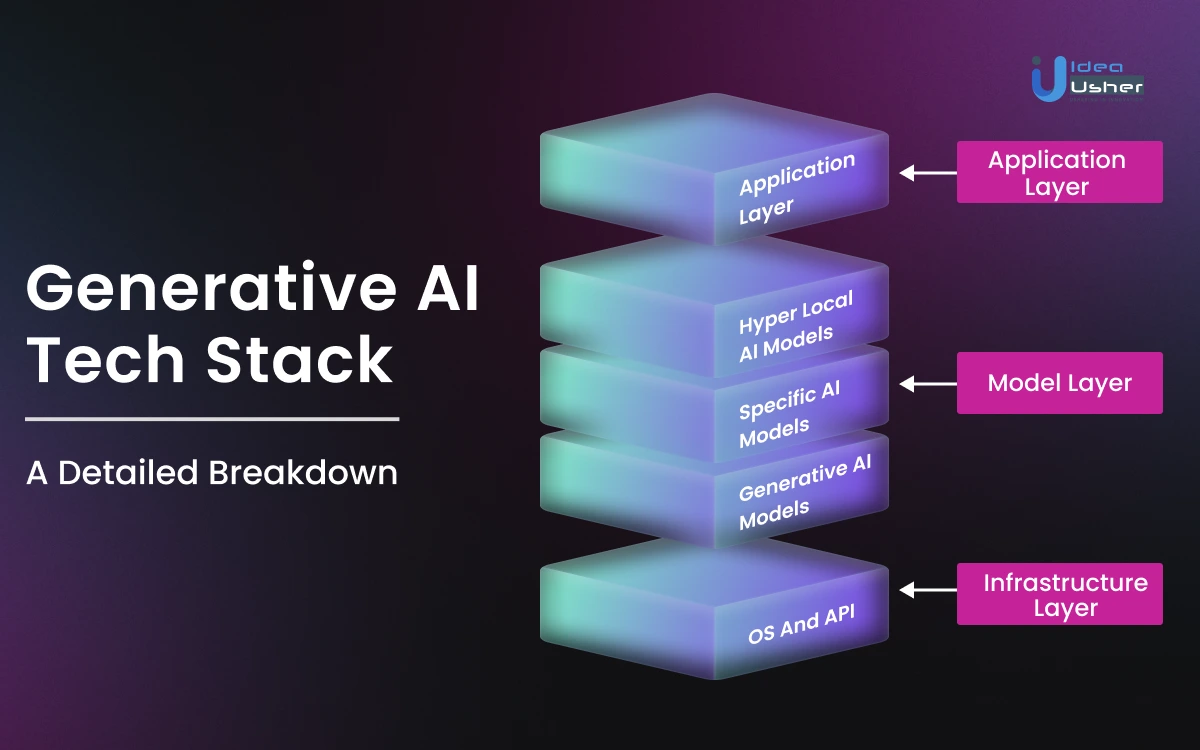

The generative AI technology stack consists of three essential layers that work harmoniously to drive innovation. At the surface lies the Applications Layer, where user-facing apps and third-party APIs seamlessly integrate generative AI models, enriching user interactions and enhancing products. Delving deeper, we encounter the Model Layer, housing both proprietary APIs and open-source checkpoints, providing the computational power behind AI-driven solutions. This layer, however, demands a robust hosting solution for effective deployment. Beneath it all, the Infrastructure Layer takes center stage, embracing cloud platforms and hardware manufacturers that efficiently manage the complex training and inference workloads essential for generative AI models, ensuring scalability and optimal performance. Together, these layers form a cohesive ecosystem powering the evolution of generative AI and its transformative impact on technology and user experiences.

1. Applications Layer: Fusing Human And AI Interaction

The Applications Layer is the most visible and user-centric aspect of the Generative AI Tech Stack, where AI meets practical use cases. It comprises:

1.1. End-To-End Applications With Proprietary Models

These applications are a powerhouse of AI innovation. They encompass the entire generative AI pipeline, from collecting data to training models and deploying them in production. These proprietary generative AI models are often developed by companies with specialized domain expertise. For instance, in computer vision, these models can generate lifelike images or videos with a high degree of realism. They also find applications in natural language processing, automating customer service, and personalizing recommendations.

1.2. Applications Without Proprietary Models

On the other hand, some applications leverage open-source generative AI frameworks like TensorFlow, PyTorch, or Keras. Developers use these frameworks to create custom generative AI models tailored to specific needs. These applications are widespread in both business-to-business (B2B) and business-to-consumer (B2C) domains. Developers can access extensive resources and support communities, fostering innovation and enabling highly specialized outputs. These tools democratize AI, making it accessible to a broader audience.

2. Model Layer: The Engine Of AI Creativity

The Model Layer forms the heart of the Generative AI Tech Stack, where AI models are developed, trained, and fine-tuned for various tasks. It consists of:

2.1. General AI Models

General AI Models are groundbreaking in their versatility. Unlike narrow AI models designed for specific tasks, these models, such as GPT-3, DALL-E-2, Whisper, and Stable Diffusion, aim to replicate human-like thinking and adaptability. They can handle a wide range of outputs, including text, images, voice, and games. These models are designed to be user-friendly and open-source, ushering in a new era of AI innovation. They have the potential to automate tasks across industries, enhance productivity, and improve predictions, especially in fields like healthcare, where they can analyze vast patient data for precise diagnoses and treatment recommendations.

2.2. Specialized AI Models

Specialized AI Models, also known as domain-specific models, excel in specific tasks. They are trained on highly specific and relevant data, enabling them to perform with greater nuance and precision than general AI models. For example, AI models trained on e-commerce product images understand the shades of effective product photography, considering factors like lighting, composition, and product placement. In songwriting, these models can generate lyrics tailored to specific genres or artists, capturing the stylistic variation of each. These specialized models empower businesses to achieve tailored, high-quality outputs in domains ranging from e-commerce to creative arts.

2.3. Hyperlocal AI Models

At the top of generative technology are Hyperlocal AI Models. These models leverage proprietary data to achieve unparalleled accuracy and specificity in their outputs. For example, they can generate scientific articles adhering to the style of specific academic journals or create interior design models aligned with individual aesthetic preferences. These models represent the epitome of AI specialization and customization. They have the potential to transform industries by providing outputs that precisely align with specific business needs, driving efficiency, productivity, and profitability.

3. Infrastructure Layer: The Backbone Of Scalability And Precision

The Infrastructure Layer, often hidden from view, provides the foundational support for generative AI. It includes:

3.1. Hardware Components

Specialized processors like GPUs (Graphics Processing Units) and TPUs (Tensor Processing Units) handle the complex computations required for AI training and inference. These processors significantly accelerate data processing and model training, enabling faster experimentation and more efficient resource utilization. Additionally, storage systems play a crucial role in managing and retrieving vast datasets used in AI applications.

3.2. Software Tools

Leading AI frameworks such as TensorFlow and PyTorch equip developers with the tools needed to build, train, and optimize generative AI models. These frameworks offer a wide range of libraries and pre-built modules, simplifying the development process. Beyond frameworks, data management tools, data visualization tools, optimization tools, and deployment tools are essential components that streamline AI workflows. They ensure data is prepared and cleaned effectively, monitor training and inference, and enable the deployment of trained models in production environments.

3.3. Cloud Computing Services

Cloud providers offer organizations instant access to extensive storage capacity and computing resources. These services are scalable, cost-effective, and eliminate the need for organizations to maintain and manage their physical infrastructure. The cloud-based infrastructure allows organizations to quickly and efficiently scale their AI capabilities, making it an invaluable component of the Generative AI Tech Stack.

Selecting The Right Generative AI Tech Stack: Key Considerations

When venturing into the world of generative AI, selecting the right tech stack is a pivotal decision that can either propel your project to success or hinder its growth. To make informed choices, it’s vital to explore a multitude of factors, each playing a unique role in shaping your tech stack. Let’s dive into these aspects in detail:

1. Project Scale And Objectives

1.1. Tailored Tech for Project Size

Tailoring your technology stack to your project’s size and significance is crucial. Smaller projects can often benefit from streamlined stacks, focusing on simplicity and quick development. On the other hand, medium to large-scale projects demand a more intricate approach involving multiple layers of programming languages and frameworks. For instance, complex projects may involve the integration of front-end and back-end technologies, necessitating expertise in both areas.

For smaller projects, consider lightweight frameworks like Scikit-Learn or Fastai. Medium-scale projects might benefit from TensorFlow or PyTorch, while large projects may require distributed computing with Spark for efficient data processing.

1.2. Data Determines Technique

The type of data you aim to generate profoundly impacts your choice of generative AI technique. Different techniques excel in different domains. For example, if you’re working with image and video data, Generative Adversarial Networks (GANs) are a popular choice due to their ability to generate realistic visual content. It uses a generator and discriminator network for image generation. Conversely, Recurrent Neural Networks (RNNs) are well-suited for sequential data like text and music generation since their LSTM or GRU cells are well-suited for sequential data like text and music.

1.3. Navigating Complexity

The complexity of your project demands careful consideration. This includes factors like the number of input variables, the depth of your neural network layers, and the size of your dataset. Complex projects often require more robust hardware, such as Graphics Processing Units (GPUs) or even specialized hardware like Tensor Processing Units (TPUs). However, the choice between GPUs or TPUs depends on the model’s complexity and dataset size.

Furthermore, advanced deep learning frameworks like TensorFlow or PyTorch have become essential for managing intricate neural network architectures. Deep neural networks may involve convolutional layers for images or recurrent layers for sequences.

1.4. Scalability Essentials

Scalability is a crucial aspect, particularly if your project aims to generate a large number of variations or serve a substantial user base. Choosing a scalable generative AI tech stack is vital. Cloud-based solutions, like Amazon Web Services (AWS), Google Cloud Platform (GCP), or Microsoft Azure, offer elasticity and can seamlessly scale to meet growing demands. They provide tools for auto-scaling and distributing workloads across multiple servers, ensuring your system remains responsive even under heavy loads. You can Implement Kubernetes or Docker for containerization and orchestration, making it easier to scale components of your system.

2. Leveraging Experience And Resources

2.1. Team Proficiency

Capitalize on your development team’s expertise. If your team possesses extensive experience in a specific programming language or framework like Python, TensorFlow, or PyTorch, it’s advisable to align your tech stack with their proficiency. This not only expedites development but also enhances the quality and reliability of your generative AI system. You can also encourage continuous learning through online courses and workshops.

2.2. Resource Accessibility

The availability of hardware resources is pivotal. Access to high-performance hardware like GPUs significantly accelerates model training and inference. Moreover, it opens doors to leveraging advanced deep learning frameworks such as TensorFlow or PyTorch. These frameworks are optimized to harness the parallel processing capabilities of GPUs, resulting in faster and more efficient AI model development. It is notable that GPUs and TPUs can accelerate training. So, you can explore cloud providers offering GPU/TPU instances, such as AWS EC2, GCP, or Azure VMs.

2.3. Training And Support

Access to training materials and robust support communities can greatly facilitate tech stack adoption. When selecting a generative AI tech stack, consider platforms that provide comprehensive documentation, tutorials, and forums. This ensures that your development team can quickly overcome challenges and harness the full potential of the chosen technology. Since TensorFlow and PyTorch have extensive documentation, online courses, and vibrant user communities, you can utilize platforms like Coursera, edX, or Udacity for additional training resources.

2.4. Budget Constraints

Project budgets can be restrictive. Advanced hardware and frameworks often come with substantial costs. To mitigate budget constraints, explore cost-effective alternatives that align with your project’s requirements. This might involve opting for less resource-intensive hardware or choosing open-source frameworks and libraries that offer powerful generative capabilities without the associated licensing fees and community-supported tools to reduce costs. You can also optimize cloud resource usage with reserved instances or spot instances.

2.5. Maintenance And Support

Generative AI systems require continuous updates, fine-tuning, long-term maintenance, and support. Selecting a tech stack with an active and reliable support community can ease the burden of maintenance. Communities provide insights, bug fixes, and best practices, ensuring your system remains robust and secure over time. You can implement continuous integration and deployment (CI/CD) pipelines with tools like Jenkins or GitLab CI for automated updates. Further, it is advisable to leverage GitHub or GitLab for version control and collaboration.

3. Navigating Scalability Challenges

3.1. Dataset Dimensions

The size of your dataset significantly impacts scalability. Large datasets require efficient data processing capabilities. Distributed computing frameworks, such as Apache Spark, are invaluable for handling extensive data. These frameworks allow you to distribute data processing tasks across multiple nodes or servers, reducing processing time and resource usage. Moreover, you can implement data preprocessing techniques such as data sharding or parallelization for efficient data processing.

3.2. User Interaction

Consider the volume of user interactions your system will encounter. If your project anticipates a large user base or high request volumes, your tech stack should be capable of handling these loads efficiently. Cloud-based solutions, microservices architecture, and load-balancing mechanisms become essential for ensuring seamless user experiences. You can implement load balancing using tools like Nginx or HAProxy to distribute user requests evenly. Microservices architecture can also help you modularize your system for scalability.

3.3. Real-Time Performance

Real-time processing requirements demand high scalability. Applications like live video generation or chatbots must process requests swiftly. Optimizing code for performance and employing lightweight models can ensure rapid response times. Additionally, real-time systems can benefit from asynchronous processing to handle concurrent user requests efficiently. You can optimize your codes with JIT (Just-In-Time) compilation, profile your code for bottlenecks, and use GPU acceleration where possible for real-time processing.

3.4. Batch Processing

For scenarios where batch processing is essential, such as generating multiple variations of a dataset, efficient batch processing capabilities are indispensable. Distributed computing frameworks, like Apache Spark, excel in processing large-scale batch jobs. They parallelize tasks, enabling the efficient generation of dataset variations. You can also design batch-processing pipelines using Apache Spark or Apache Beam for efficient data transformations and model training.

3.5. Cloud-Based Scalability

Cloud-based solutions like AWS, GCP, and Azure offer unparalleled scalability. They provide resources on-demand, allowing your system to scale up or down based on requirements. Autoscaling features automatically adjust server capacity to accommodate varying workloads, making them a top choice for highly scalable generative AI systems. You can utilize cloud providers’ auto-scaling features and serverless computing to adapt to varying workloads. It is advisable to implement cloud-native services like AWS Lambda or Google Cloud Functions for serverless architecture

4. Fortifying Security Measures

4.1. Data Security

Protecting data integrity is paramount. Choose a tech stack with robust security features like encryption, access controls, and data masking to safeguard sensitive information. Employ encryption libraries like PyCryptodome for data encryption. Encryption ensures that data remains confidential during storage and transmission. Implement robust access control mechanisms like OAuth2 for API security. Access controls and data masking restrict unauthorized access and exposure of sensitive data.

4.2. Model Protection

Generative AI models often represent valuable intellectual property. Prevent unauthorized access or misuse by selecting a tech stack with stringent security measures. Use version control systems (VCS) like Git with access controls to safeguard model versions. Implement APIs with rate limiting to prevent misuse, model versioning, and access controls to track changes and manage model access rights effectively.

4.3. Infrastructure Security

Secure your system’s infrastructure to thwart unauthorized access and cyberattacks. A well-structured tech stack should include security measures such as firewalls, intrusion detection systems, and monitoring tools. Regularly audit system logs and employ real-time monitoring to detect and respond to security threats promptly. Utilize Virtual Private Clouds (VPCs) or Virtual Networks (VNets) to isolate resources and implement intrusion detection systems (IDS) like Snort or Suricata for real-time threat detection.

4.4. Compliance Considerations

Depending on your application, you may need to adhere to specific industry regulations or standards. For example, healthcare applications must comply with HIPAA, while financial systems must meet PCI-DSS requirements. Choose a tech stack with built-in compliance features to simplify the process of meeting regulatory obligations. Adhere to industry-specific compliance standards by encrypting sensitive data at rest and in transit. Regularly audit logs and maintain compliance documentation.

4.5. User Access Control

Robust user authentication and authorization mechanisms are essential for controlling system and data access. Ensure that your tech stack offers fine-grained access controls and supports authentication protocols like OAuth or LDAP. Implement role-based access control (RBAC) to define user permissions based on their roles within the system. Implement fine-grained Role-Based Access Control (RBAC) systems using frameworks like Keycloak or Auth0 and ensure multi-factor authentication (MFA) for user access.

By delving deeper into these considerations and integrating specific technical details, you can make informed decisions when selecting a generative AI tech stack. This comprehensive approach ensures that your project is well-equipped to meet its objectives while maintaining scalability, security, and efficiency throughout its lifecycle.

Benefit For Businesses From Robust Generative AI Tech Stack

Incorporating a robust generative AI tech stack can usher in a multitude of advantages for businesses, reshaping operations and driving innovation across industries. These transformative benefits encompass:

1. Heightened Productivity

Generative AI automates mundane, repetitive tasks, allowing human talent to channel their energies into more creative and strategic pursuits. This leads to improved work efficiency and resource allocation.

2. Streamlined Operations

Businesses can optimize processes through generative AI, such as enhancing customer service interactions, product development workflows, and supply chain management. This streamlining leads to cost savings and faster delivery times.

3. Amplified Creativity

Generative AI fosters creativity by generating novel ideas, designs, and concepts. Companies can leverage this capability to continuously innovate, maintain a competitive edge, and adapt swiftly to evolving market demands.

4. Personalized Experiences

Tailoring products and services to individual customer preferences is made possible through generative AI. Businesses can offer personalized recommendations, content, and marketing campaigns, bolstering customer loyalty and satisfaction.

5. Cost Reduction

Generative AI can cut costs across various aspects of business operations. This includes lowering customer acquisition expenses, optimizing resource utilization, and minimizing errors in production processes.

6. Informed Decision-Making

Data analysis and predictive capabilities of generative AI assist in making informed, data-driven decisions. Businesses gain deeper insights into market trends, customer behavior, and operational efficiency, enabling better strategic planning.

7. Revenue Generation

Generative AI opens up new revenue streams. Businesses can offer generative AI-powered products and services, tapping into emerging markets and monetizing their AI capabilities.

Industry-Specific Applications

a. E-commerce

Online retailers harness generative AI to provide customers with product recommendations that align with their preferences, personalize product listings, and even create photorealistic product images, enhancing the online shopping experience.

b. Customer Service

Enterprises in the customer service sector deploy generative AI-driven chatbots that promptly address customer inquiries, resolve issues, and provide assistance, resulting in improved customer satisfaction and operational efficiency.

c. Marketing

Marketing agencies leverage generative AI to craft highly personalized marketing campaigns, precisely target advertisements, and generate creative content that resonates with diverse audiences, maximizing engagement and conversion rates.

d. Finance

Financial institutions employ generative AI for data analysis, predictive modeling, and developing algorithmic trading strategies, thereby optimizing investment decisions, managing risk, and boosting financial performance.

e. Manufacturing

Manufacturers use generative AI to design products with optimal efficiency, refine production processes, and enhance quality control through anomaly detection, ultimately driving down production costs and improving product quality.

Applications Of Generative AI In Real-world Scenarios

Generative AI, a dynamic field at the intersection of machine learning and creativity, has rapidly evolved to find applications across a wide range of real-world scenarios. These applications not only enhance efficiency but also foster innovation in various industries:

1. Healthcare

Generative AI plays a pivotal role in drug discovery by generating molecular structures with desired properties. It also aids in medical imaging interpretation by enhancing images and detecting anomalies.

2. Art And Design

In the realm of art and design, generative AI is leveraged to craft intricate designs for fashion, interior decor, and graphic arts. It can even simulate the style of renowned artists, producing digital masterpieces.

3. Entertainment

Entertainment benefits greatly from generative AI. It creates realistic virtual actors, generates dialogues, and even assists in screenplay writing. The music industry sees the generation of new compositions and the replication of iconic artist styles.

4. Finance

Generative AI aids in risk assessment by simulating market scenarios. It develops trading algorithms, predicts financial trends, and generates financial reports, empowering investors and financial institutions.

5. Manufacturing

Manufacturing processes have been streamlined with generative AI. It assists in product design optimization, creating lighter and more efficient components through generative design. Quality control is enhanced through anomaly detection.

6. Customer Service

Generative AI-powered chatbots deliver superior customer service by generating human-like responses to customer queries. Personalized marketing campaigns, tailored product recommendations, and targeted ads further enhance customer engagement.

7. Education

In education, generative AI customizes learning materials to suit individual needs, adapting content difficulty and format. It also automates grading and feedback, reducing the administrative burden on educators.

8. Research

Generative AI assists researchers in hypothesis generation, exploring vast datasets, and identifying previously unnoticed patterns. It accelerates the scientific discovery process across diverse fields.

Potential Risks And Challenges Of Generative AI

While the potential of generative AI is immense, several critical risks and challenges must be addressed to ensure responsible and ethical use:

1. Bias

Generative AI models may perpetuate biases present in the training data, resulting in unfair outcomes and discrimination in applications like hiring, lending, and content generation. Mitigation involves thorough data preprocessing and model evaluation for fairness.

2. Misinformation

Generative AI can be exploited to create convincing fake content, including deepfake videos and misleading text. This poses a significant challenge to online trust and digital integrity. Content verification tools and stricter content regulation are necessary countermeasures.

3. Security

Generative AI models can be hacked to produce malicious content or deceive security systems, impacting privacy and safety. Ensuring robust security measures for AI models and data is crucial.

4. Intellectual Property

Generative AI raises complex questions about intellectual property rights. As AI generates creative content, determining ownership and copyright becomes a legal challenge that requires new legislation and regulations.

5. Regulation

Governments are grappling with the need to develop regulations to govern generative AI. Striking a balance between innovation and ethical use is essential. Regulatory frameworks should be adaptive and considerate of technological advancements.

Conclusion

The strategic implementation of a generative AI tech stack is a game-changer for businesses aiming to integrate AI into their operations. It’s not just about automating tasks or creating outputs; it’s about harnessing the power of AI to drive efficiency, reduce costs, and tailor solutions to specific business needs. With the right combination of hardware and software, businesses can leverage cloud computing services and specialized processors to develop and deploy AI models at scale. Open-source frameworks like TensorFlow, PyTorch, or Keras equip developers with the tools they need to build custom models for unique use cases, enabling businesses to create industry-specific solutions.

In the fast-paced business world, those who fail to capitalize on the potential of generative AI risk falling behind. A robust generative AI tech stack can keep businesses at the forefront of innovation, opening up new avenues for growth and profitability. Therefore, it’s crucial for businesses to invest in the right infrastructure and tools for the successful development and deployment of generative AI models.

Embrace the transformative power of generative AI and stay ahead in your business journey. Reach out to the team of AI experts at Idea Usher today and explore the endless possibilities together!

Contact Idea Usher at [email protected]

FAQ

Q. What is a generative AI tech stack?

A. A generative AI tech stack is a comprehensive set of technologies, tools, and frameworks used to build and deploy generative AI systems. It includes everything from the underlying infrastructure (like servers and storage) to the machine learning models (like GANs or transformers), programming languages, and deployment tools.

Q. Why is a generative AI tech stack important?

A. A generative AI tech stack is crucial for unlocking the full potential of generative AI models. It enables businesses to streamline their workflows, reduce costs, improve overall efficiency, and create highly customized outputs that meet specific business needs.

Q. What are the key components of a generative AI tech stack?

A. The key components of a generative AI tech stack include the Applications Layer (end-to-end apps or third-party APIs), Model Layer (proprietary APIs or open-source checkpoints), and Infrastructure Layer (cloud platforms and hardware manufacturers).

Q. What factors should be considered when choosing a generative AI tech stack?

A. When choosing a generative AI tech stack, consider factors like project specifications, quality and type of data, complexity of the project, and cost and carbon emissions.

Rebecca Lal