The way children interact with technology is shifting so quickly that many classrooms can barely keep pace. Young learners now move between devices and apps with expectations that feel very different from just a few years ago. This shift is driving interest in adaptive platforms such as KidSense AI that can listen and respond in a way that feels natural to a child. It uses child-specific speech models and on-device processing to keep interactions private while remaining responsive.

These capabilities allow the system to adjust to each child through accurate intent detection and real-time feedback loops that refine every response. The goal is not only accuracy but also a design that truly understands how children learn.

Over the past decade, we’ve built numerous child-focused adaptive learning solutions powered by technologies like specialized speech AI and edge intelligence. Drawing on this expertise, we’re writing this blog to outline the steps to develop an adaptive learning platform like KidSense AI. Let’s start.

Key Market Takeaways for Speech Recognition for Kids

According to MarketUS, the kid-focused speech recognition market is gaining momentum as AI models improve at interpreting children’s unique vocal patterns. The broader speech and voice recognition sector is expected to grow from about USD 17 billion in 2023 to roughly USD 83 billion by 2032, at a CAGR of around 20%, with a rising share of that growth driven by child-centered learning and entertainment tools.

Source: MarketUS

Developers are increasingly using engines trained specifically on children’s voices, allowing products to detect mispronunciations, skipped words, and pacing issues as kids read aloud. These models generate actionable insights, such as words correct per minute and hesitation tracking, that support more personalized instruction and provide teachers with real-time visibility.

Examples such as Readability Tutor and the child-specific engine from SoapBox Labs demonstrate how this technology is becoming core infrastructure for literacy solutions.

The multi-year partnership between Scholastic and SoapBox Labs further illustrates this shift, as Scholastic voice-enables programs such as Ready4Reading to deliver practice opportunities and instant fluency analytics directly within its digital curriculum.

What Is the KidSense AI Platform?

KidSense AI is a specialized speech recognition system developed by Kadho Inc. to understand how children speak accurately accurately. First introduced in beta in 2017 and later released as an embedded, fully offline version in 2018, the platform was built from the ground up for real-time interpretation of kids’ voices ages 4 to 12.

Unlike repurposed adult ASR engines, KidSense AI blends neuroscience research on early language development with advanced machine-learning models.

Key Features of the KidSense AI Platform

The platform handles child speech across languages with models tuned for young voices and delivers fast, real-time results. It can run fully offline on devices where privacy must be protected and where data should never leave the hardware. Developers could integrate it into many products because the system is flexible and it performs reliably in different environments.

Multi-Language Understanding

KidSense AI can recognize children’s speech in multiple languages through its cloud engine, enabling global products to deliver consistent, accurate voice experiences.

Designed for Growing Voices

Because children’s pitch, pronunciation, and speech patterns differ from those of adults, the system is tuned specifically for ages 4–12. This age-focused optimization enables it to outperform general-purpose ASR models.

Instant, Real-Time Results

Whether running in the cloud or on a device, the platform delivers immediate transcriptions and responses, enabling smooth, interactive voice experiences.

Fully Offline Embedded Mode

The embedded version works without an internet connection. It processes audio locally on devices such as educational robots, learning tablets, AR/VR headsets, and smart toys, ensuring fast, responsive interactions.

Strict Privacy Protection

To support environments where children’s data must be safeguarded, the offline version does not collect, save, or transmit voice recordings. This design aligns with COPPA, GDPR, and other child-data regulations.

Flexible Integration for Product Teams

Developers can embed KidSense AI into a wide variety of platforms, from mobile apps to toys and immersive environments. Its versatility enables it to integrate with classroom tools, consumer products, and interactive learning systems.

AI Features That Can Enhance an Adaptive Platform

An adaptive speech platform for children should use models trained on child-specific audio to interpret speech patterns with higher accuracy. It also adjusts responses based on context and emotional cues so the interaction feels supportive and technically precise. The system should run efficiently on a device with robust privacy controls, enabling it to deliver fast feedback and adapt its learning logic in real time.

1. Age-Adaptive Speech Models

These models are trained specifically on children’s vocal patterns so the system can understand high-pitched voices, evolving articulation, and age-based language usage. By adapting to developmental differences, accuracy improves significantly compared to adult-trained models.

Example: KidSense.ai uses age-specific acoustic models designed exclusively for children’s speech.

2. Emotion & Sentiment Detection

Emotion-aware AI identifies cues such as frustration, excitement, or confusion based on tone and rhythm. This allows the system to adjust responses, offer encouragement, or slow down the pace to maintain an engaging experience.

Example: Miko 3 Robot uses emotion detection to adapt conversational tone and maintain positive interactions with kids.

3. Personalized Learning & Interaction Engine

The platform builds a profile for each child based on speech habits, learning progression, and behavior patterns. It then customizes interactions, lessons, and challenges to fit the child’s individual needs and development.

Example: Roybi Robot personalizes language-learning sessions using each child’s speaking patterns and performance data.

4. On-Device AI Optimization

Running recognition locally reduces latency and enhances privacy by avoiding cloud processing. This is especially important for children’s devices, which need to respond instantly while protecting sensitive voice data.

Example: LeapFrog Learning Devices use embedded speech processing so kids can interact with learning apps without requiring an internet connection.

5. Context-Aware Language Understanding

This feature enables the system to remember previous questions, follow conversational themes, and interpret meaning from multi-turn context. It makes interactions feel more natural and intuitive for kids.

Example: Amazon Kids+ on Echo Dot Kids Edition uses context-aware natural language understanding tailored for child-friendly dialogues.

6. Adaptive Feedback & Speech Coaching

AI analyzes pronunciation and fluency, giving real-time corrections and adjusting lesson difficulty to match the child’s progress. It supports language learning, reading practice, and speech development.

Example: Vosyn Kids Speech Therapy App provides corrective feedback and adaptive speech exercises based on each child’s pronunciation patterns.

7. Safety and Compliance Intelligence

Advanced filtering and compliance logic ensure child-safe interactions by adhering to regulations such as COPPA and GDPR-K. The system controls data retention, detects harmful content, and protects user privacy.

Example: Google Assistant for Families (Family Link) applies strict privacy controls and child-safe filtering to all kid-directed voice interactions.

How Does the KidSense AI Platform Work?

The KidSense AI platform combines a tiny wake-word engine, a child-tuned speech-recognition core, and an adaptive learning layer that analyzes how kids speak. It processes audio in real time in the cloud or directly on the device, enabling quick, reliable responses.

This design enables the system to handle a wider pitch range and variable articulation with technical precision while protecting user privacy.

Layer 1: Triggly – The Wake-Word Engine

A small, efficient module that listened for specific trigger words on kid-focused devices. It acted as the first point of interaction, ensuring devices responded quickly and naturally to a child’s voice.

How it works:

- Always-on, low-power listening: Triggly uses compact acoustic models that run continuously without draining a device’s battery.

- Tuned for children’s voices: Its training emphasized the pitch ranges and pronunciation quirks typical of children rather than adults.

- Smart noise handling: It could recognize intentional wake-word use while ignoring background noise, classroom chatter, or faint speech from across the room.

- Tiny deployment footprint: The engine could run on microcontrollers with roughly 100 KB of RAM, enabling even simple toys or handheld devices to support voice activation.

Layer 2: Vocally – The Core ASR Engine

A full automatic speech recognition system engineered for the acoustic and developmental patterns of children’s speech and vocally served as the platform’s main intelligence layer, turning raw sound into accurate, interpretable language data.

Pediatric Acoustic Modeling

- Trained on a large multilingual dataset of children’s recorded speech.

- Applied techniques like Vocal Tract Length Normalization, helping the system account for smaller vocal anatomy and higher pitch frequencies.

- Included feature extraction methods designed to handle the rapid pitch movement and less stable articulation typical of young speakers.

Neuroscience-Informed Processing

Integrated insights from early language development to better interpret immature sound production. Considered common speech phenomena in young children such as:

- Sound substitutions

- Simplified consonant clusters

- Inconsistent pacing

This allowed the system to understand speech that would confuse a conventional adult-trained model.

Real-Time Adaptation

Over time, the engine could become more familiar with an individual child’s accent or speech patterns. Adaptation happened locally on the device whenever possible, keeping personal audio data private.

Layer 3: Adaptive Learning Intelligence

The layer that turned recognition accuracy into meaningful, educational feedback. It bridged the gap between simple transcription and actionable learning insights.

What it enabled:

- Phoneme-level insight: The system could break down spoken words into their component sounds, enabling identification of specific pronunciation challenges.

- Personalized progress tracking: Each child developed a profile that reflected their speaking patterns, strengths, and areas needing support.

- Dynamic content adjustment: Educational apps could automatically adjust difficulty, focus attention on specific phonemes, or redirect learning paths based on real-time speech performance.

What is the Business Model for KidSense AI Platform?

KidSense.ai, developed by Kadho Inc., carved out a specialized niche in children’s speech technology by building one of the largest young-voice datasets in the industry and packaging it into a privacy-safe, offline speech-recognition engine.

KidSense AI is not a consumer-facing product. Instead, it provides the speech engine inside toys, robots, learning apps, AR/VR systems, and other kid-centric devices. The value proposition rests on three pillars:

KidSense’s revenue model is structured to match the economics of hardware:

One-time Licensing Fees

For low-margin, short-life products (e.g., toys), partners pay a flat fee for embedding the engine. This avoids ongoing costs for manufacturers and ensures predictable per-product economics.

Annual SaaS / Subscription Contracts

For devices that stay active longer (e.g., educational robots or connected learning systems), KidSense offers:

- yearly licensing

- optional over-the-air model updates

- multi-language support packages

- technical maintenance

This turns some customers into recurring revenue accounts.

Customizations & enterprise-level agreements

Large companies occasionally pay for:

- Tuning the models for their product line

- Adding new languages

- Embedding neuroscience-inspired features, Kadho developed initially

Although not publicly disclosed, such deals are common with enterprise ASR licensing.

Revenue Performance

While later financials weren’t publicly disclosed, early traction shows commercial viability:

- $1.2M in 2018 from toy and robotics clients (names undisclosed due to NDAs).

- Continued global deployments after KidSense moved from a 2017 cloud beta to a fully offline engine in 2018, which expanded its applicability.

- No reliable public data has surfaced on post-2018 revenues, especially after the ROYBI acquisition in 2021.

Funding and Capital Structure Before Acquisition

KidSense operated as a venture-backed startup focused on research and development, raising about $3.5 million before its acquisition.

Early investors such as Plug and Play Tech Center, Beam Capital, Skywood Capital, SFK Investment, and Sparks Lab supported the company’s development of its offline, child-specific speech recognition technology.

In 2018, the company explored raising an additional $3M bridge toward a Series A. Specific valuation terms and financial metrics after 2021 were not publicly released.

Strategic Developments Influencing the Business Model

2018 Shift to Fully Offline ASR

This marked a critical inflection point: the business became more attractive to global manufacturers seeking privacy-compliant technology without an ongoing cloud bill.

2021 Acquisition by ROYBI

ROYBI, known for AI-driven educational robots, integrated KidSense to strengthen its multilingual and child-focused learning products. The acquisition aligned KidSense’s technology with a broader edtech platform that already relied on language learning and speech interaction.

Continued Global Deployments

Since leaving stealth mode, KidSense’s speech engine has powered products launched internationally, though few details are public due to OEM nondisclosure agreements.

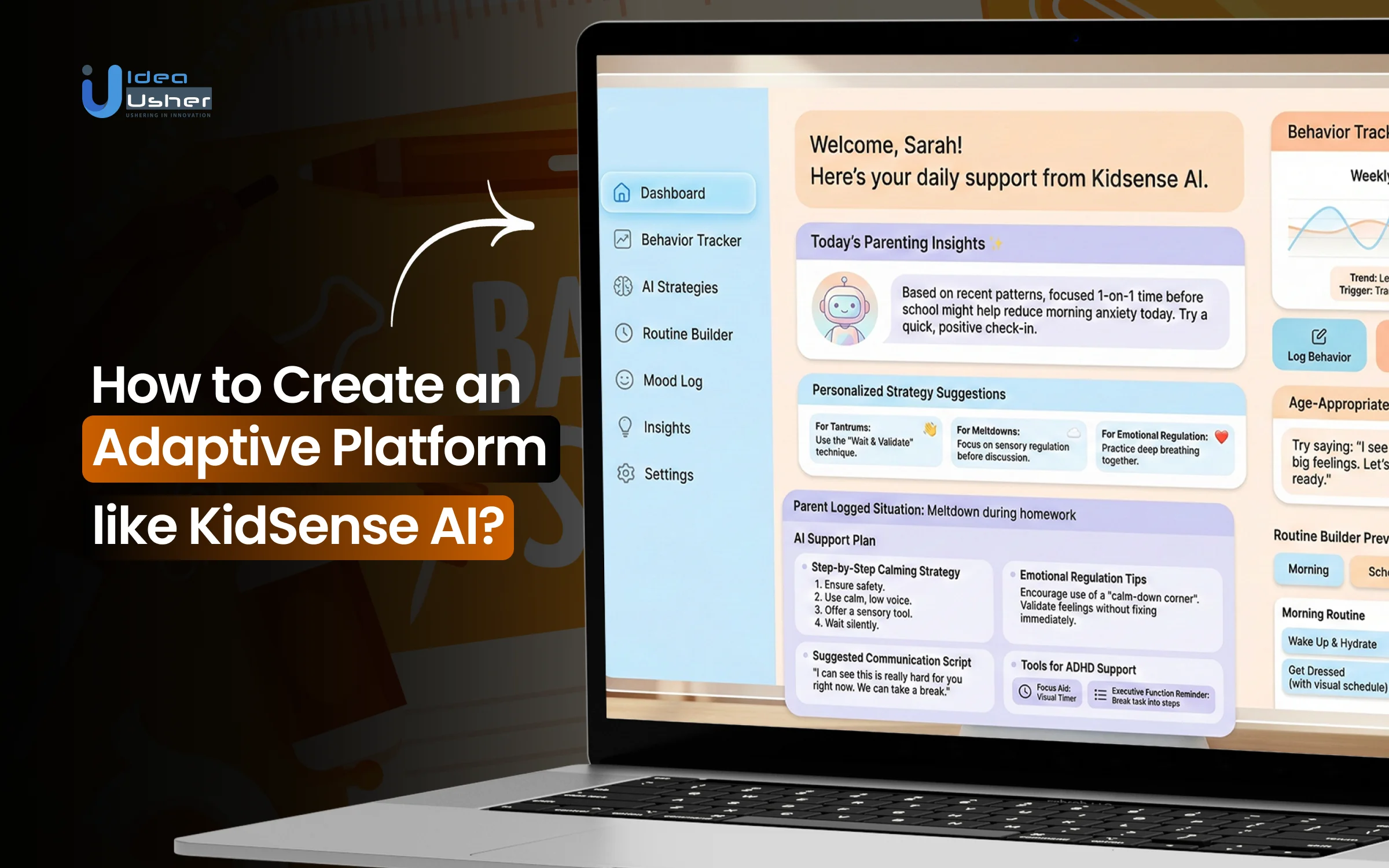

How to Create an Adaptive Platform like KidSense AI?

To create an adaptive platform like KidSense AI, you would start by building child-tuned speech models that run securely on a device and respond with low latency. Then you would layer an adaptive engine that learns a child’s skill level in real time and adjusts tasks with reliable precision.

We have created multiple KidSense-level adaptive platforms for our clients, and this is how we typically deliver them.

1. Child Acoustic Model

We begin by developing a proprietary acoustic model designed for children’s speech. Our team collects and curates compliant, child-focused datasets and applies developmental modeling to ensure the system accurately interprets the unique pitch and variability of young speakers.

2. Edge ASR Engine

Next, we engineer an on-device ASR engine that delivers ultra-low latency while preserving full privacy. We compress and quantize the speech models to run smoothly on constrained hardware.

3. Adaptive Learning Engine

Our adaptive learning engine combines a real-time learner model with a granular domain model that maps every micro-skill. Using an RL-based adaptation engine, we personalize content, difficulty, and learning steps for each child.

4. Privacy by Design

Because children’s safety is non-negotiable, we build every platform with privacy-first engineering. All voice processing stays on-device, data is protected with local encryption, and optional federated learning enables system-wide improvements without exposing personal information. Parents retain complete control through strict, customizable oversight tools.

5. Multi-Language Support

We integrate multilingual ASR models that can handle bilingual users, regional accents, and natural code-switching. This ensures consistent accuracy across diverse linguistic environments.

6. Hardware Testing and Optimization

We validate and optimize the system directly on real-world hardware, including chips, smart toys, and wearables. Deep power and latency profiling ensure smooth performance and long battery life.

Successful Business Models for Adaptive Platforms like KidSense AI

Adaptive platforms built specifically for children, especially those involving speech recognition, personalized learning, or intelligent feedback loops, tend to thrive under a handful of proven business models. The models below consistently generate strong traction and sustainable revenue in this sector.

1. B2C Freemium / Subscription Model

This approach focuses directly on families. Parents gain access to a core experience at no cost, allowing them to gauge whether the platform truly supports their child’s learning needs.

Why It Works

A no-risk entry point significantly increases adoption among caregivers who may be hesitant to try a paid learning tool. Premium plans often include advanced personalization tools and parent dashboards.

Example:

Ello, an AI-driven reading companion, uses this model effectively. Its Adaptive Learn™ system evaluates how children read aloud and delivers tailored coaching. Ello charges $19.99 per month or $119.99 per year for full access.

2. B2B2C Licensing for Schools and Districts

In this model, platforms sell directly to educational institutions rather than individual families. Schools purchase annual licenses on a per-classroom, per-school, or per-student basis.

Why It Works

Districts value tools that improve learning outcomes and reduce teacher workload. By offering compliance with privacy laws such as FERPA and COPPA, adaptive platforms become trusted long-term partners.

Examples:

Lexia Core5, a flagship literacy platform from Lexia Learning, uses a pure B2B licensing model. Lexia’s literacy products generated over $112 million in revenue in 2021, driven largely by district-wide contracts and school subscriptions.

3. B2B SaaS for EdTech and Device Manufacturers

Companies with deep technical expertise often license their adaptive engines, such as speech recognition models or learning algorithms, to other businesses. This model positions the platform as infrastructure rather than a consumer-facing product.

Why It Works

Many toy companies and EdTech developers want advanced AI capabilities but lack the resources to build them internally. Revenue can be structured through usage-based fees, per-device licensing, or annual enterprise contracts.

Example:

KidSense AI is a strong example. Its specialized speech recognition engine, designed specifically for children, was compelling enough that ROYBI Inc. acquired it to strengthen its educational robot. This acquisition underscores the value of embedded, child-focused AI for companies seeking to differentiate hardware or software for young learners.

Speech Recognition Assessments Can Improve Reading Scores by 40%

According to a study evaluating an AI-based speech recognition tool among 100 Moroccan students in grades one to three. These learners were between six and nine years old and were placed in equal groups of fifty to ensure the results remained reliable. The ASR group increased from 45.6 to 63.4, while the control group increased from 43.9 to 49.8, indicating that speech recognition support may yield stronger gains in early reading.

Speech recognition assessments may improve reading scores by nearly 40 percent by providing children with immediate, precise feedback that strengthens phonological skills. The system listens with consistent accuracy, so a child could adjust errors in real time and build stronger decoding pathways.

1. A Real-Time Phonological Feedback Loop

In most classrooms, feedback comes later, after a teacher has listened, evaluated, and had time to respond. By then, the learning moment has passed.

Speech recognition changes that dynamic entirely.

What makes the difference?

- Phoneme-level accuracy: If a child says “brack” instead of “black,” the system does not simply mark it wrong. It identifies the exact sound that needs correction.

- Immediate reinforcement: Because feedback is immediate, the child can adjust and try again while the brain is still engaged.

- Growing self-monitoring skills: As children hear and see their reading errors reflected back to them, they develop strategies to catch mistakes on their own, a strong predictor of later reading fluency.

2. Children Learn Better

There is a well-documented phenomenon called the protégé effect, which shows that people learn more effectively when they believe they are teaching someone else.

With speech recognition tools:

- The child becomes the “expert” reading to the system.

- The technology listens attentively and without judgment.

- Children naturally focus more, slow down, and pay closer attention to accuracy.

This shift in role reduces performance pressure and boosts intrinsic motivation. It is practice that feels safe, supportive, and purposeful.

3. Personalized Pacing

A classroom teacher manages many learners at once. Speech recognition software, on the other hand, adapts to the child in real time.

It can:

- Identify specific decoding challenges, such as blending sounds or tricky vowel combinations.

- Provide additional practice where needed.

- Move the child forward only after mastery is demonstrated.

- Prevent both frustration and boredom.

This creates a personalized pathway that reflects how each child learns rather than how the curriculum is scheduled.

Beyond Higher Scores: Deeper, Holistic Benefits

The measurable gains are only part of the story. Children experience breakthroughs that traditional assessments rarely provide.

- More motivation: Gamified progress tracking, small achievements, and clear indicators of improvement make reading practice an activity children enjoy.

- Lower anxiety: Practicing with a nonjudgmental digital listener removes the fear of making mistakes in front of peers or adults.

- Stronger teacher insights: Instead of spending hours listening and scoring, teachers receive clear diagnostic reports, enabling them to focus on providing targeted instruction where it matters most.

The result is a classroom where teachers intervene more effectively, children engage more confidently, and reading growth accelerates.

Common Challenges of an Adaptive Platform like KidSense AI

Building an ASR platform designed specifically for children is far more complex than adapting an adult speech model. After supporting numerous clients in this space, we’ve identified the most frequent challenges and the proven, scalable solutions that help teams overcome them.

1. Obtaining Child Speech Data

Collecting high-quality child speech data is one of the largest hurdles for any kid-focused voice AI system. Children’s voices vary widely by age, region, and emotional state, making broad coverage difficult. Additionally, ethical and legal restrictions (COPPA, GDPR-K, etc.) severely limit what data can be collected and how it can be used.

Solution:

By combining advanced synthetic child-voice generation with fully consented, regulation-compliant data collection, teams can build robust datasets without compromising safety or privacy.

Synthetic augmentation fills gaps in age ranges and accents, while controlled collection ensures clean, diverse training samples. This hybrid approach accelerates dataset expansion and significantly improves model stability across age groups.

2. Model Accuracy at High Pitch

Children exhibit much higher and more variable pitch frequencies than adults. Conventional ASR systems, trained primarily on adult voices, struggle with pitch fluctuations, rapid articulation changes, and nonstandard pronunciations.

Solution:

F₀ (fundamental frequency) adaptive modeling enables the system to track and interpret high-pitch variations with far greater precision.

When paired with loss functions designed for high-variance speech patterns, the model generalizes better across ages, dialects, and speech idiosyncratic features. This leads to dramatic improvements in recognition accuracy and lower error rates in real-world environments.

3. Running ASR on Low-Power Chips

Many child-focused products, such as educational toys, reading devices, and wearables, operate on extremely constrained hardware. Running real-time speech recognition on these devices is challenging due to limited CPU, memory, and battery capacity.

Solution:

Techniques such as quantization, pruning, and knowledge distillation reduce inference size without compromising accuracy. When combined with platform-specific DSP optimization, the ASR pipeline becomes lightweight enough for on-device execution.

This results in lower latency, higher reliability, and a smoother user experience on affordable, mass-market hardware.

4. Maintaining Privacy Compliance

When working with children’s data, privacy is non-negotiable. Parents, schools, and regulators expect strict safeguards and transparency. Cloud-based speech processing often raises concerns about storage, transmission, and control of voice data.

Solution:

Processing audio directly on the device eliminates the need to send raw speech to external servers.

By keeping logs local and storing only anonymized performance metrics when absolutely necessary, developers can maintain compliance with COPPA, GDPR-K, FERPA, and other child-data regulations. This architecture also supports stronger trust and reduces overall security risk.

Tools & APIs to Create an Adaptive Platform like KidSense AI

Creating an adaptive voice platform like KidSense AI requires integrating specialized speech models, privacy-focused edge computing, and intelligent learning systems that adapt to each child’s needs.

It also requires careful coordination among audio processing, on-device inference, and child-safe data practices to ensure the experience feels natural, responsive, and secure.

1. Speech Processing & Modeling

PyTorch

PyTorch is often used to experiment with custom speech models. Its flexibility allows rapid iteration on architectures designed for the variability and pitch characteristics of children’s voices.

TensorFlow Lite

Once your research models stabilize, deployment efficiency becomes crucial. TensorFlow Lite focuses on compression and hardware-aware optimizations to run models on low-power devices.

Kaldi / Vosk

Kaldi’s modular ASR pipelines are valuable when you need a classical approach with deep control over the decoding process. Vosk offers a simpler API and smaller models that help you build quick prototypes.

NVIDIA NeMo

NeMo provides ready-made components for ASR, NLP, and TTS. Its transfer-learning workflow allows you to adapt strong pretrained models using smaller, curated children’s datasets.

2. Edge & Embedded Development

TensorFlow Lite Micro

TFLite Micro enables ML models to operate on microcontrollers with extremely limited memory. This supports fully on-device processing, a key privacy requirement for child-focused systems.

ARM CMSIS-NN

CMSIS-NN delivers optimized neural network kernels for ARM Cortex-M processors. These optimizations enable smooth real-time inference under tight performance constraints.

Qualcomm AI Engine / SNPE

For Snapdragon-based devices, SNPE can access dedicated hardware acceleration. This provides faster inference while keeping power consumption manageable.

3. Adaptive Learning Engine

Reinforcement Learning Frameworks

Reinforcement learning helps the platform adjust difficulty levels and instructional decisions based on children’s performance. These frameworks supply the scaffolding for designing and updating such policies.

ONNX Runtime

ONNX Runtime allows interoperability across PyTorch, TensorFlow, and other ML toolchains. It also provides optimized execution environments for a broad range of hardware targets.

4. Privacy & Security

On-Device Encryption Libraries

Even with offline processing, learning profiles and embedded speech features must be protected. Libraries such as libsodium and secure enclave APIs safeguard data at rest.

Federated Learning (TensorFlow Federated)

Federated learning enables model improvement without collecting raw audio. Devices compute local updates and share only transformed parameters.

5. Specialized Tooling

GAN-Based Synthetic Speech Generation

Synthetic speech produced by GAN models can help warm-start training when real children’s recordings are limited. These synthetic samples provide useful coverage for early experimentation.

Annotation Tools (ELAN, Praat)

Tools such as ELAN and Praat enable linguists to label children’s speech at a fine-grained phonemic level. These annotations support the development of pronunciation-scoring and corrective-feedback systems.

Conclusion

Privacy-first ASR for children is critical because their voice data should remain protected on the device, and KidSense AI has demonstrated that an edge-ready pipeline can set a solid benchmark for secure adaptive recognition. Platform owners and enterprises might see that future voice systems will depend on models that learn locally and respond naturally without exposing raw audio. Idea Usher helps teams build compliant, high-accuracy edge platforms through a transparent, collaborative process, so you always know how your system works and how it safeguards young users.

Looking to Develop an Adaptive Platform like KidSense AI?

Idea Usher can help you build a platform like KidSense AI by designing on-device models that securely process children’s speech and adapt in real time. With over 500,000 hours of coding experience, our team of ex-MAANG/FAANG developers will engineer a lightweight system that learns from each interaction and delivers precise phoneme-level feedback.

You might also benefit from our ability to scale multilingual recognition so your product evolves smoothly as you grow.

What We Offer:

- Advanced speech recognition and adaptive learning algorithms

- Child-safe AI architecture built with data privacy at its core

- Scalable, cloud-based solutions ready for mass deployment

- Seamless integrations with existing LMS and analytics tools

Explore our latest projects to see the kind of cutting-edge AI solutions we’ve created.

Work with Ex-MAANG developers to build next-gen apps schedule your consultation now

FAQs

A1: You could build a reliable child-focused ASR engine without harvesting large pools of child audio because modern synthetic voice generation can create controlled training signals, and small ethical datasets may still provide enough variation to help the model generalize while keeping privacy intact.

A2: The cost may vary because each build depends on language scope, feature depth, and target hardware, yet most teams should expect a range that starts near fifty thousand dollars and can exceed two hundred thousand as the system becomes more adaptive and operates across more environments.

A3: Yes, because with careful model pruning and quantization, the ASR stack can run efficiently on constrained processors, and you might still achieve real-time inference that feels natural to young users without stressing the device.

A4: Yes, provided that all speech processing stays on the device and the system never exports identifiable audio, and this local pipeline will usually allow you to meet strict regulatory controls while still delivering consistent recognition performance.