Grading assignments, essays and exams demands extensive time, and many teachers struggle to keep up with growing workloads and expectations. Maintaining consistency, giving detailed feedback and returning grades quickly can be challenging. These pressures are pushing schools and edtech teams to consider an Edexia-like AI grading system that automates routine evaluation while helping educators remain accurate, fair and responsive.

Modern AI grading tools demonstrate how powerful assessment can be when machine learning, natural language processing and rubric-based evaluation work together. They analyze responses at scale, highlight errors, detect patterns and generate constructive feedback. These systems act as intelligent assistants, reducing manual effort and giving educators more time for personal instruction and student engagement.

In this guide, we’ll explore how to create an AI grading system similar to Edexia, the technology that powers automated assessment, and the key features needed for accuracy, transparency, and classroom adoption. This blog will help you understand the full roadmap for developing a reliable AI-driven grading solution.

What is an AI Grading System, Edexia?

Edexia is an AI grading system that helps teachers evaluate student work efficiently, consistently, and transparently. It analyzes assignments using rubric criteria, adapts to each teacher’s style, and supports various work types, including essays and handwritten responses. Learning from corrections, this platform aligns with individual expectations over time, ensuring accurate grading without removing educator control.

This platform learns from individual teaching styles, adapting to each educator’s unique grading philosophy rather than generic standards. Its transparent process shows teachers why it suggests specific grades or comments, building trust. Edexia respects teacher authority while providing detailed feedback students need to improve.

- Supports diverse assessment types by grading essays, math problems, science reports, diagrams, handwritten work, graphs, and more.

- Teacher-trained grading style adaptation allows the system to learn from a teacher’s corrections and apply their standards to future grading.

- Transparent rubric breakdown and feedback generation provides clear criterion-by-criterion evaluation and meaningful student feedback.

- Bulk-upload and class-wide grading support enables efficient handling of multiple assignments and large class submissions.

- Time-saving potential comes from automating grading and feedback, reducing workload and giving teachers more time for instruction.

Business Model:

Edexia is a verticalized AI-in-Education tool that resolves a major pain point: the heavy, error-prone, time-consuming nature of grading and feedback with a scalable, institution-ready approach.

- AI grading assistant: Edexia automates the evaluation of student work across multiple subjects and formats, including essays, math problems, handwritten assignments, diagrams, graphs, and science reports.

- Teacher-trained grading adaptation: The system learns each educator’s rubric and grading style, aligning future assessments with their standards while reducing workload.

- Scalable assessment solution: Supports bulk uploads, class-wide workflows, and diverse assessment types, enabling institutions to manage grading efficiently at scale.

- Productivity and consistency gains: By automating marking and feedback (up to ~80% time savings), Edexia improves educator efficiency and ensures consistent evaluation across assignments.

Funding Summary:

Edexia has raised a seed funding round of USD 500,000, led by Y Combinator. This early investment validates the platform’s approach to AI-driven grading and highlights strong confidence in its market potential. This seed raise demonstrates that AI assessment tools are gaining meaningful early-stage traction. For anyone planning to build and launch a similar platform, Edexia’s funding signals clear investor interest in scalable, workflow-focused educational AI solutions.

Types of AI Used in an AI Grading System

AI grading platforms rely on multiple intelligent models working together to evaluate assignments accurately and consistently. Below is a breakdown of the essential AI types that power automated grading, feedback generation and teacher-style adaptation.

| Type of AI | Purpose | How It Supports Grading |

| Natural Language Processing (NLP) | Understands written student responses | Interprets essays, short answers and explanations with semantic comprehension. |

| Computer Vision (CV) | Processes visual or handwritten submissions | Reads handwritten work, diagrams and math steps through image-to-text extraction. |

| Machine Learning (ML) Classification | Scores responses using learned patterns | Applies rubric-based classification to match student work with scoring criteria. |

| Generative AI Models | Creates feedback and improvement suggestions | Produces criterion-aligned feedback, summaries and personalized guidance. |

| Retrieval-Augmented Generation (RAG) | References rubrics and curriculum documents | Ensures outputs stay aligned with academic standards and teacher rubrics. |

| Adaptive Learning Algorithms | Learns teacher grading styles | Adjusts scoring logic using preference-driven calibration for each educator. |

| Sentiment and Tone Analysis | Evaluates tone and clarity in responses | Helps assess writing quality, argument strength and communication effectiveness. |

| Anomaly Detection Models | Identifies errors or inconsistent grading | Flags unusual responses or scoring mismatches through self-review heuristics. |

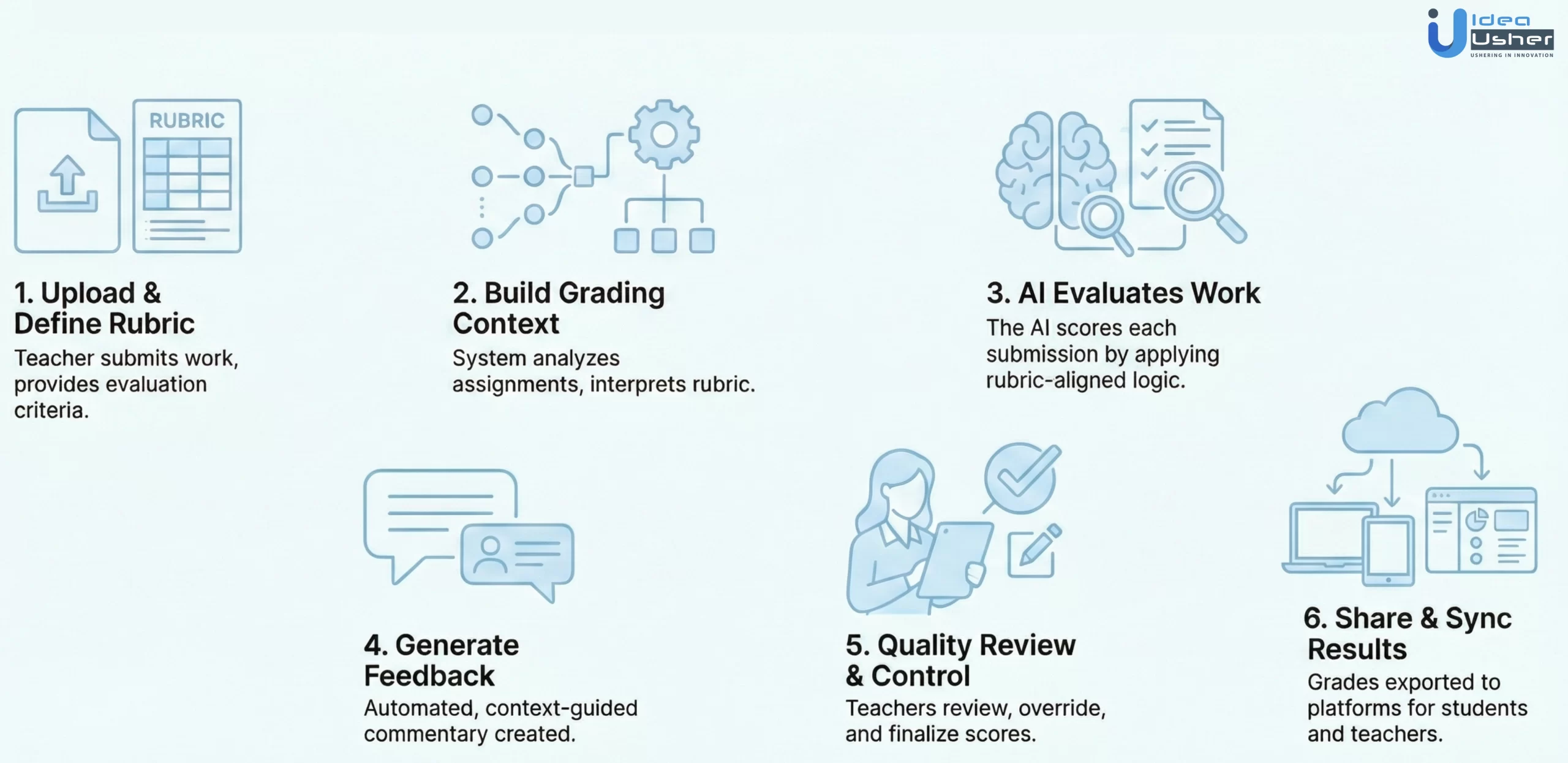

How does an AI Grading System Work?

An Edexia-like AI grading system uses NLP and machine learning to evaluate student work quickly and accurately. It automates scoring, feedback, and rubric alignment, helping teachers save time and maintain consistent assessments.

1. Teacher Uploads Assignments and Defines the Rubric

The process begins when a teacher uploads student submissions in any supported format such as essays, handwritten responses, diagrams or math solutions. The teacher also provides a grading rubric or selects an existing one so the system knows exactly how to evaluate each criterion.

2. System Analyzes the Input and Builds Grading Context

Once the files and rubric are submitted, the platform extracts text, structure or visual elements from each assignment. It then builds a grading context by interpreting rubric parameters, performance indicators and teacher expectations, preparing the AI for precise evaluation.

3. AI Evaluates Each Response Using Rubric Logic

The AI scores student work by applying criterion-level analysis, checking clarity, reasoning, completeness, correctness and subject understanding. The grading engine uses rubric-aligned scoring models that ensure each decision matches the defined evaluation standards.

4. Teacher-Style Adaptation

Teachers can adjust initial AI scores using platform calibration to guide the system’s learning. The system learns these adjustments through preference-driven tuning, aligning future grading with the teacher’s personal style, strictness level and interpretation of quality.

5. Automated Feedback Generation

After scoring, the system generates detailed feedback for each rubric criterion. This feedback is constructed using context-guided commentary, highlighting strengths, weaknesses and improvement suggestions. Students receive clear insights without teachers manually writing repetitive remarks.

6. Quality Assurance and Review

Before finalizing results, the system performs an internal self-check cycle to detect inconsistencies, unclear evaluations or missing rubric connections. Teachers can review all scores, override anything, or adjust feedback to maintain complete control over final grading.

7. Export, Share & Sync Results

Once validated, scores and feedback can be exported, downloaded or integrated back into LMS platforms. Students receive structured reports, and teachers get a complete overview of class performance, making grading transparent and trackable.

How AI Grading Systems Reduce Instructor Grading Time by 37%?

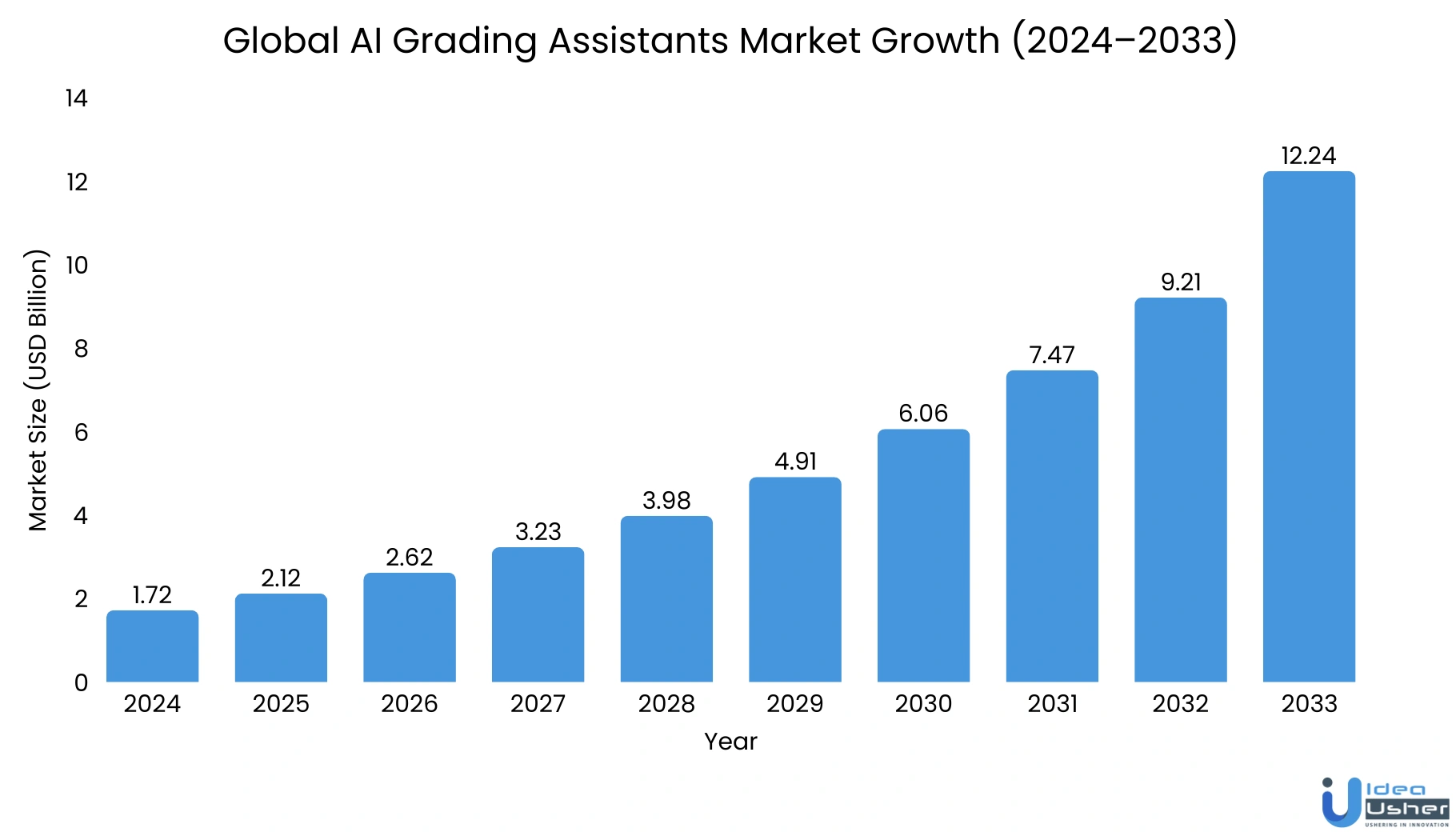

The global AI Grading Assistants market hit USD 1.72 billion in 2024 and is projected to grow at a CAGR of 22.8% to USD 12.24 billion by 2033. This expansion is driven by institutions needing to reduce faculty workloads while ensuring assessment quality, making automated grading systems essential for large-scale education.

Average grading time for instructors decreased by 37% thanks to the AI grading system automation. This improved efficiency lets educators spend more time on teaching and personalized student support.

A. Automated Assessment Processing Reduces Grading Bottlenecks

Traditional grading involves reviewing work, applying rubrics, scoring, and giving feedback, making the process slow and labor-intensive. AI grading automates these tasks with NLP and machine learning, improving speed, consistency, and accuracy.

- By 2025, 72% of schools globally will use AI grading systems, showing how automated assessment has shifted from experimental technology to essential educational infrastructure and expanding the market for scalable grading platforms.

- In U.S. public schools, 48% of multiple-choice assessments are now auto-graded, showing widespread adoption and that AI grading has achieved product-market fit for high-volume objective assessments.

- Universities are embracing automation, with 63% using essay-scoring AI platforms, showing AI’s maturity to reliably evaluate complex, subjective writing with natural-language understanding.

- Advanced NLP now saves teachers up to 50% of evaluation time, freeing them from manual grading and enabling more focus on impactful instruction.

B. Consistent Quality & Reduced Stress Improving Outcomes

AI grading systems save time and ensure greater consistency than human graders, who face fatigue, bias, and inconsistent standards. This enhances fairness, reduces instructor stress, and boosts retention and satisfaction, while maintaining academic rigor.

- 68% of teachers report reduced stress from AI grading, showing that automation helps lower burnout and improves overall job satisfaction.

- AI assessment tools reduce grading errors by 40%, offering more reliable evaluations and decreasing disputes and administrative correction work.

- 20–40% of teacher time can be automated, giving educators up to 13 hours weekly for higher-impact tasks like personalized instruction and mentoring.

- One in three teachers now use AI in teaching, while seven in ten worry about cheating, increasing demand for grading systems with plagiarism detection and integrity safeguards.

AI grading systems are transforming education by significantly reducing instructor grading time, improving consistency, and lowering stress. With widespread adoption across schools and universities, these tools not only streamline assessments but also free educators to focus on personalized instruction, enhancing both teaching quality and student outcomes.

Benefits of Using an AI Grading System in Education

AI grading systems offer faster, more accurate, and consistent evaluation of student work, reducing teacher workload and administrative tasks. They also provide detailed feedback, enhancing learning outcomes and supporting personalized instruction.

1. Improved Grading Efficiency

AI grading systems significantly reduce time spent on repetitive evaluation tasks by automating rubric-based scoring and feedback generation. This efficiency helps teachers manage higher workloads and shows why such platforms gain strong adoption across modern institutions.

2. Consistent and Fair Scoring

AI tools apply the same rubric logic to every submission, eliminating unintentional bias and variability. This consistency creates trust in assessment quality and highlights a strong market need for solutions that offer dependable, repeatable grading outcomes.

3. Enhanced Student Feedback

AI-generated feedback provides clear, criterion-linked insights that students can act on immediately. Faster, more detailed responses improve learning outcomes, making feedback automation a highly attractive feature for platforms seeking strong institutional engagement.

4. Multi-Format Assessment Support

Modern classrooms rely on diverse assignment types, from essays to handwritten answers. AI systems that handle multi-modal evaluation add major value for educators and stand out as high-impact features when designing a commercial EdTech product.

5. Reduced Teacher Burnout

Automating high-volume grading reduces cognitive load and administrative pressure. This directly addresses one of the biggest challenges in education today, making AI grading solutions a compelling investment for schools and a strong opportunity for product builders.

Key Features to Include in an Edexia-like AI Grading System

An Edexia-like AI grading system needs powerful features that support multi-format evaluation, teacher-style calibration, and automated feedback. These features ensure accurate, scalable, and time-saving assessments that meet modern classroom needs.

1. AI-Powered Rubric-Based Scoring

The system should evaluate student work using rubric-aligned scoring logic that interprets criteria, performance indicators and academic expectations. This consistency is strengthened through criteria-parsing mechanisms and lightweight scoring inference models that minimize variation and maintain accuracy across large assessment sets.

2. Teacher-Style Adaptation

The platform must learn from teacher corrections and scoring adjustments. By applying preference-driven calibration and subtle behavioral weighting, the AI aligns its grading patterns with each educator’s standards, delivering personalized evaluations that remain stable, explainable and instructionally grounded.

3. Multi-Format Assessment Support

An advanced grading tool should handle text, diagrams, handwritten responses and structured problem-solving tasks. This is enabled through multi-modal interpretation layers and conditional input-processing pipelines that allow the AI to understand varied formats while preserving accuracy across subjects and assignment types.

4. Automated Feedback Generation

The system generates detailed feedback for students using criterion-specific insights that highlight strengths, gaps and improvement areas. Through context-sensitive feedback modeling, it produces meaningful commentary grounded in rubric logic, reducing repetitive teacher workload while improving learner understanding.

5. Bulk Grading & Class-Wide Processing

Teachers should be able to upload entire classes of assignments for quick evaluation. A batch-processing workflow supported by parallel scoring routines ensures rapid turnaround and consistent results, helping educators manage large submission volumes without added operational strain.

6. Transparent Grading Breakdown

The tool should display clear insights into how each score was determined. By offering a traceable scoring explanation backed by structured decision-mapping layers, educators can review results, verify correctness and maintain strong confidence in AI-supported grading.

7. Error Detection & Regrade Suggestions

The system can identify unclear responses, missing elements or potential misclassifications. Using self-review heuristics and subtle anomaly detection cues, it flags problematic sections for teacher review and suggests refinements that strengthen grading consistency and reduce oversight.

8. Integrations with LMS and Classroom Tools

The platform connects seamlessly with popular LMS environments, enabling workflow-aligned interoperability through structured API-driven connections. This allows assignments, grades and feedback to move between systems smoothly without disrupting established educator routines.

How to Create an Edexia-like AI Grading System?

Developing an Edexia-like AI grading system combines adaptive AI, teacher-trained rubrics, multi-format evaluation, and automated feedback. Our structured process ensures an efficient, accurate, and scalable grading platform.

1. Consultation

We begin with a detailed consultation to understand grading challenges, institutional needs and assessment workflows. Our team gathers insights about rubric structures, evaluation patterns and academic contexts to build a clear project blueprint that aligns with real educator expectations.

2. Requirement Analysis

We analyze the required grading features, multi-format support and AI capabilities needed for accurate evaluation. Through structured requirements engineering, we outline functional modules, scoring logic and user flows that form the foundation of a reliable AI grading ecosystem.

3. UX Research and Wireframing

Our designers study how teachers review assignments and interact with grading tools. Based on these insights, we create wireframes with intuitive scoring layouts, guided steps and clear review panels that make AI-assisted grading simple and transparent for educators.

4. AI Workflow Design

We design AI workflows that manage rubric interpretation, style learning and feedback generation. This includes building criterion-aware processing pipelines that help the system read assignments, apply scoring rules and generate detailed responses aligned with instructional intent.

5. Platform Architecture Planning

Our developers structure the platform architecture to ensure scalability, modularity and high performance. We design data flow pathways and communication layers that support multi-format grading, retrieval logic and continuous model adaptation across various academic use cases.

6. Core Feature Development

We develop essential features such as rubric-based scoring, multi-format submission parsing and feedback generation. Each component is built with educator-centered logic, ensuring the system produces evaluations that feel accurate, trustworthy and aligned with real classroom standards.

7. AI Model Integration

We integrate the AI models responsible for scoring, feedback and analysis. Our team configures instruction-sensitive behavior, context protocols and calibration functions so the system grades accurately while adapting to different teaching styles and rubric expectations.

8. Retrieval and Knowledge Integration

We establish retrieval pipelines that allow the AI to access rubrics, curriculum documents and reference materials. By enabling RAG-based knowledge grounding, we ensure grading decisions remain aligned with academic criteria rather than generic model assumptions.

9. User Testing and Iteration

We conduct usability sessions with teachers to test the grading interface, scoring clarity and feedback quality. Using iterative refinement cycles, we adjust workflows, scoring interpretations and UI components based on real educator insights.

10. Deployment and Ongoing Support

We deploy the platform with secure infrastructure, configure grading environments and support onboarding for teachers and institutions. After launch, we provide continuous monitoring and enhancements to maintain accuracy, speed and reliability as grading demands evolve.

Cost to Build an Edexia-like AI Grading System

Building an Edexia-like AI grading system involves costs tied to AI model development, multi-format assessment capabilities, and platform scalability. Understanding these factors helps institutions and edtech companies budget effectively and plan for a high-performance grading solution.

| Development Phase | Description | Estimated Cost |

| Consultation | Early discovery and project scoping with educators. | $3,000 – $6,000 |

| Requirement Analysis | Functional detailing and grading workflow mapping. | $5,000 – $9,000 |

| UX Research & Wireframing | Interface studies and grading-focused UI layouts. | $6,000 – $10,000 |

| Platform Architecture Design | Structuring systems with modular architecture planning. | $9,000 – $15,000 |

| Core Feature Development | Building main tools and scoring feature modules. | $17,000 – $30,000 |

| AI Workflow & Model Integration | Integrating AI scoring with instruction-aware logic. | $14,000 – $26,000 |

| Retrieval & Knowledge Integration | Implementing curriculum-based RAG integration. | $12,000 – $18,000 |

| QA Testing | Validation, feedback testing and usability refinement. | $6,000 – $12,000 |

| Deployment & Post-Launch Support | Launching system with continuous monitoring. | $7,000 – $19,000 |

Total Estimated Cost: $64,000 – $126,000

Note: Development costs depend on grading complexity, AI sophistication, dataset preparation, and customization. Advanced workflow automation, multi-format assessment support, and long-term enhancements may influence the budget.

Consult with IdeaUsher to get a customized project quote and a detailed development roadmap for building a top-quality AI grading platform tailored to your educational goals and product vision.

Cost-Affecting Factors of AI Grading System Development

Several key factors influence the cost of developing an AI grading system, from feature complexity to scalability and AI model sophistication.

1. Scope & Feature Complexity

Advanced features like multi-format grading, rubric adaptation and bulk processing increase development time. Larger scopes require specialized feature engineering and deeper workflow design, which raises overall costs.

2. AI Model Depth & Accuracy Requirements

More accurate scoring models require extensive training, calibration and refinement. Higher expectations demand advanced model tuning and iterative evaluation cycles, increasing development investment.

3. Quality & Volume of Training Data

Access to clean, domain-relevant datasets impacts accuracy and cost. Preparing datasets for rubric alignment or handwriting interpretation involves data preprocessing workflows that add development effort.

4. Multi-Format Assessment Support

Handling essays, diagrams, handwritten work and math responses requires additional pipelines. Each new input type demands multi-modal processing layers that increase system complexity and development cost.

5. LMS & Third-Party Integrations

Connecting with LMS platforms or academic tools requires API handling and workflow mapping. These interoperability integrations add engineering time and influence cost estimates.

6. Compliance & Security Standards

Protecting student submissions requires privacy controls, secure storage and compliant data handling. Implementing education-grade security adds necessary but cost-increasing development layers.

Challenges & Solutions of AI Grading System Development

Developing an AI grading system comes with challenges like data accuracy, rubric alignment, and system scalability. Implementing smart algorithms, teacher calibration, and robust architectures ensures reliable, efficient, and high-quality assessment solutions.

1. Ensuring Accurate and Fair Scoring

Challenge: AI may misinterpret complex responses or fail to match rubric depth, creating inconsistencies in automated scoring across varied subjects and formats.

Solution: We improve accuracy through criterion-aware tuning, iterative teacher calibration and multi-layer validation checks, ensuring the system reliably aligns with academic expectations while maintaining consistent scoring quality across diverse submissions.

2. Handling Multi-Format Student Submissions

Challenge: Essays, handwritten answers, diagrams and math steps require different interpretation pipelines, increasing complexity in unified AI grading workflows.

Solution: We implement multi-modal processing layers with specialized extraction routines, allowing the system to handle text, visuals and handwriting accurately while maintaining structured grading logic for each format.

3. Maintaining Transparency in AI Decisions

Challenge: Teachers may struggle to trust AI scoring when explanations behind decisions are not clear or traceable.

Solution: We provide traceable scoring breakdowns, explanation layers and criterion-linked insights so educators can easily verify decisions, refine outputs and maintain strong oversight of the grading process.

4. Adapting to Individual Teacher Grading Styles

Challenge: Different educators apply unique scoring preferences, making it hard for a single model to match every teacher’s evaluation style.

Solution: We use preference-driven calibration, allowing teachers to correct initial scores. The system learns these patterns and adapts future grading to reflect their specific expectations accurately.

Revenue Models of an AI Grading System

An AI grading system can generate revenue through flexible models that fit both schools and large institutions. These approaches help platforms scale efficiently while offering affordable, value-driven assessment automation for educators.

1. Subscription-Based Model

Schools, universities or individual educators pay a recurring fee to access grading tools. This may include monthly or yearly per-teacher, per-student, or per-institution plans. Subscriptions ensure predictable, recurring revenue and allow flexible tiering based on feature depth.

2. Freemium to Premium Conversion

Users can access limited features for free, while advanced capabilities such as multi-format grading, detailed analytics or bulk submissions unlock through paid upgrades. The bottom-up adoption strategy helps gain teachers first, then convert institutions.

3. Enterprise Licensing for Schools and Districts

Large institutions pay for full access, administrative dashboards, custom workflows and priority support. This model offers high contract value and long-term holding because once integrated, changing costs increase significantly for schools.

4. White-Label Solutions

EdTech platforms, LMS providers or online course companies license the AI grading engine as a white-label integration, allowing them to embed grading capabilities under their own branding. This opens new B2B revenue streams.

5. Custom Implementations

Custom rubric design, curriculum alignment, model fine-tuning or institutional onboarding can be offered as paid professional services, especially for large academic clients with specific requirements.

Conclusion

A well-planned approach to building an Edexia-like AI Grading System can help institutions streamline assessments, improve feedback quality, and reduce manual workloads. By combining reliable data pipelines, accurate scoring models, transparent evaluation logic, and strong security practices, the platform can deliver measurable value in real academic environments. As long as the development roadmap stays focused on usability, fairness, and continuous optimization, the system can grow with evolving learning needs and support educators in creating a more efficient and consistent grading experience.

Build Your AI Grading System Platform with IdeaUsher!

IdeaUsher helps EdTech companies and institutions develop intelligent grading platforms that deliver accurate evaluations, reduce teacher workload, and improve student feedback quality. We design systems that handle written responses, assignments, and test scoring with high reliability.

Why Work With Us?

- Advanced Assessment Technology: We create grading engines that analyze context, structure, and intent for fair and consistent results.

- Custom Development Approach: From rubric-based scoring to LMS integration, every element is built to match your operational needs.

- Proven EdTech Success: Our experience in AI learning platforms ensures a smooth, efficient development process from idea to launch.

- Secure Infrastructure: Your platform is built with strong data protection and scalability to support long-term growth.

Check out our portfolio to understand how we help other businesses to launch their AI solutions in the market.

Connect with us to build a grading system that elevates assessment quality and classroom efficiency.

Work with Ex-MAANG developers to build next-gen apps schedule your consultation now

FAQs

Accuracy depends on training data quality, model selection, and continuous refinement. With strong datasets, validation, and bias testing, the system can produce consistent grading results that align closely with teacher evaluations across different subjects.

The system needs past graded assignments, rubrics, writing samples, and structured scoring guidelines. This data helps train the model to evaluate patterns, reasoning, and performance, enabling it to deliver fair and consistent grading outputs.

Yes, it can evaluate multiple subjects by training models on subject-specific datasets. Additional layers can be added for writing-based tasks, quantitative assignments, or conceptual responses, ensuring accurate grading across varied academic areas.

Schools can deploy the system through cloud integration, LMS connectivity, user authentication, and custom grading workflows. Training sessions help teachers review outputs and maintain control over final grading decisions.