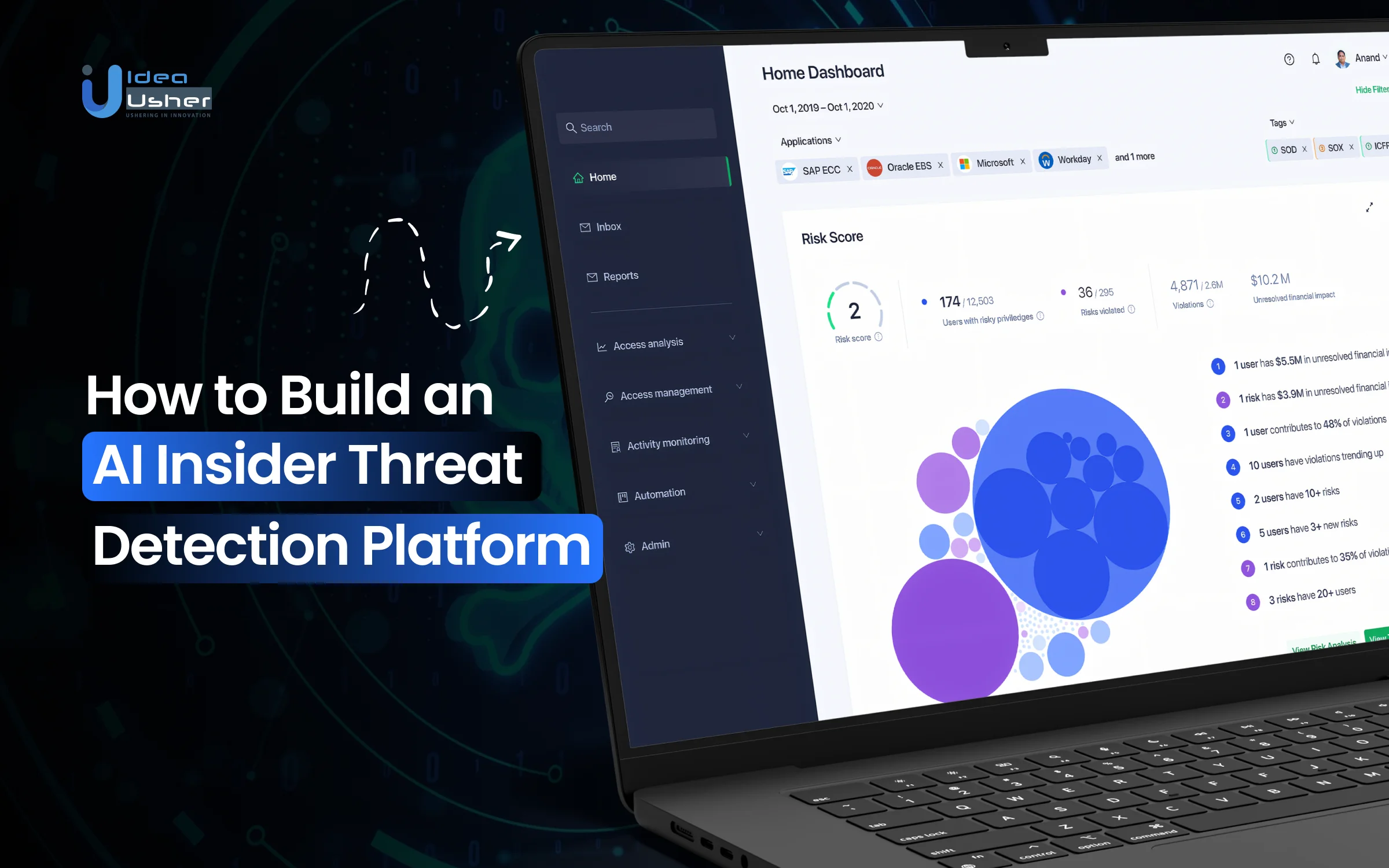

Not all security threats come from outside an organization. Employees, contractors, and trusted users already have system access, making insider risks harder to detect. Unusual behavior, accidental misuse, or malicious intent can cause damage unnoticed. This challenge drives organizations to adopt AI insider threat detection platforms that continuously monitor behavior and uncover risks hidden within normal operations.

AI-driven insider threat tools analyze behavioral patterns instead of rigid rules. By monitoring user activity, access behavior, data movement, and context over time, they detect subtle risks. Machine learning reduces false alerts, adapts to role changes, and delivers actionable insights without disrupting daily operations.

In this blog, we’ll explore how to build an AI-powered insider threat detection platform, the key features it should include, and the technology behind intelligent behavior monitoring. This guide will give you a clear roadmap for creating a proactive and scalable insider threat detection solution.

What is an AI Insider Threat Detection Platform?

An AI Insider Threat Detection Platform uses machine learning and behavioral analytics to identify risky or abnormal actions by employees, contractors, or accounts. It continuously learns normal user behavior across systems and channels, flagging deviations that indicate data misuse, sabotage, policy violations, or credential compromise.

It represents a high-value, next-generation security solution built to protect organizations from internal risks that traditional tools fail to detect, leveraging UEBA-driven behavioral intelligence to deliver scalable automation, real-time visibility and reduced investigation workload across modern cloud and hybrid environments.

- UEBA-powered digital fingerprinting builds multi-dimensional identity profiles using activity entropy, access rhythms, and device posture to expose deviations missed by traditional log analysis.

- Sequence-aware UEBA modeling analyzes actions as behavioral chains, detecting multi-step insider activity like staged data collection, lateral movement setup, or subtle policy bypassing.

- Contextual privilege drift analytics track long-term access usage to identify incremental misuse, abnormal entitlement expansion, and hidden escalations via compromised internal accounts.

- Adaptive UEBA intent inference evaluates linguistic cues, urgency signals, and workflow context to separate accidental misuse from high-risk malicious insider intent.

- High-resolution UEBA telemetry monitors micro-level file interactions, transfer velocity, and aggregation patterns to uncover slow data exfiltration disguised as normal activity.

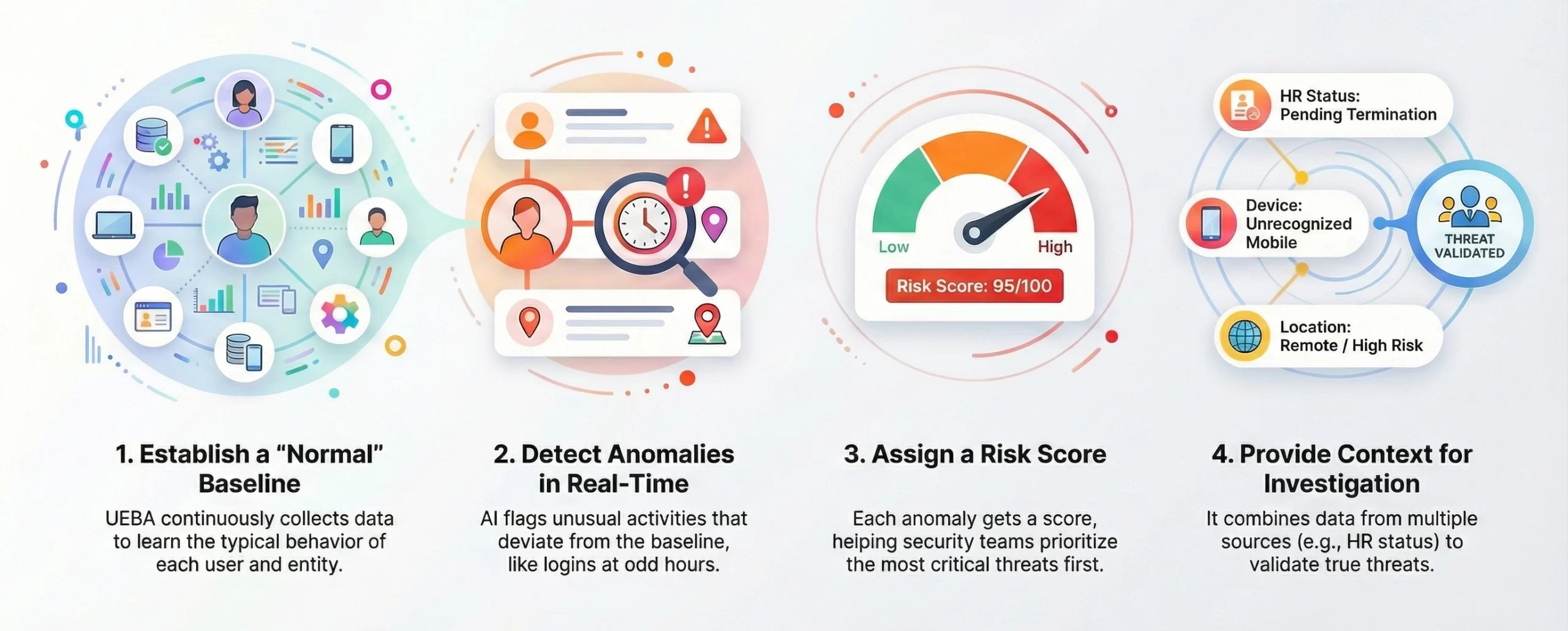

The Role of UEBA in AI Insider Threat Detection

UEBA is a cybersecurity approach that leverages machine learning and behavioral analytics to create a baseline of “normal” activity for every user and non-human entity (like servers or applications) within an organization’s network. It then flags significant deviations from these baselines as potential security threats.

1. Behavioral Baselining

UEBA systems continuously collect data from various sources (logs, network traffic, access data, HR systems) to establish normal patterns for each user and peer group. This dynamic learning is driven by AI and ML algorithms.

2. Anomaly Detection

Instead of relying on predefined rules (which can be bypassed by subtle threats), UEBA uses AI to spot unusual activities in real-time, such as a user accessing data outside their normal work hours, from an unusual location, or downloading an abnormally high volume of data.

3. Insider Threat Identification

It is particularly effective at identifying both malicious and negligent insiders, as well as external attackers using compromised credentials, because they inevitably behave differently than the legitimate user.

4. Risk Scoring

UEBA assigns a risk score to anomalous activities. This helps security teams prioritize the most critical threats and reduces the “alert fatigue” often associated with traditional security information and event management (SIEM) systems.

5. Contextual Analysis

By correlating data points across different systems (e.g., combining a user’s access logs with their HR status like recent resignation), UEBA provides valuable context for investigations, allowing analysts to differentiate a true threat from a benign but unusual event

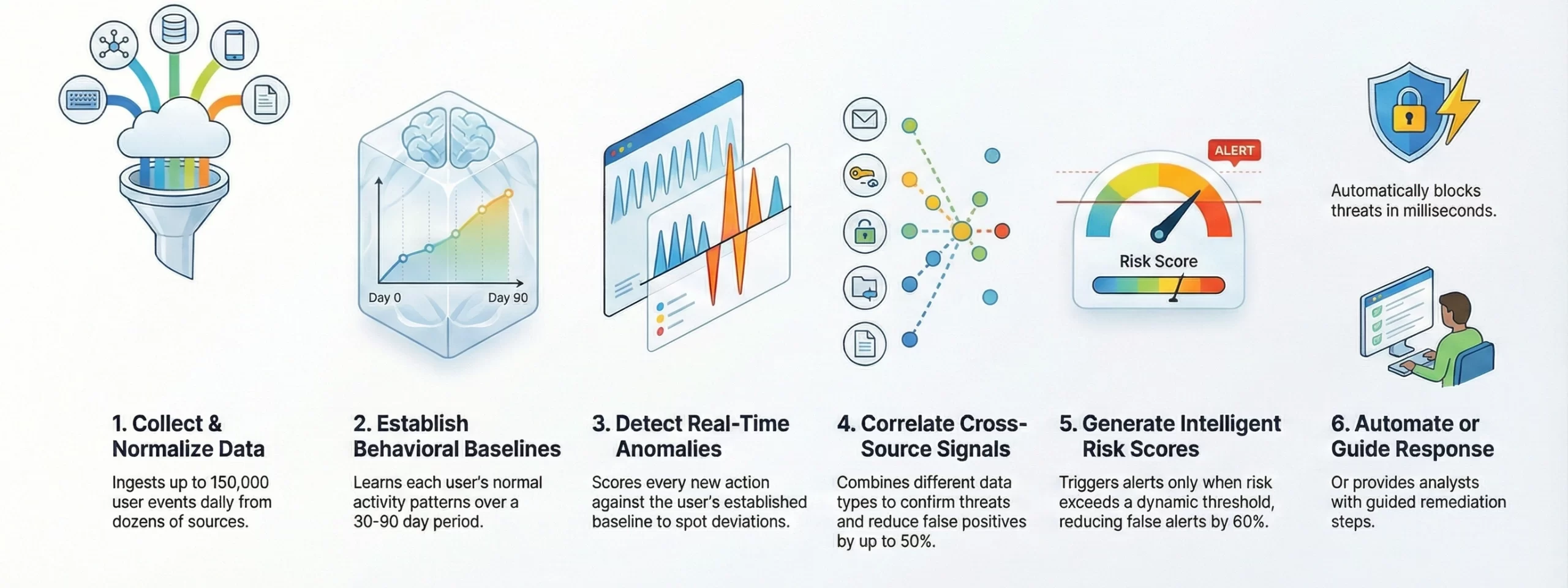

How an AI Threat Detection Platform Works?

An AI insider threat detection platform analyzes user behavior, correlates activity signals and identifies risky deviations. The following explanation uses a general platform example “SentinelGuard AI” to help clearly understand how such a system works behind the scenes.

1. Collects and Normalizes User Activity Data

The platform begins by ingesting large streams of user activity signals to establish a clean, structured foundation for behavioral analysis.

- A system like SentinelGuard AI processes 50,000 to 150,000 user events per day across endpoints, SaaS apps, identity systems and file servers.

- Logs from 20 to 40 different sources are converted into a uniform event schema to eliminate format inconsistencies.

- Noise filters remove up to 18% redundant or low-quality events that would otherwise distort baseline models.

- Each event is enriched with tags such as geo-data, device ID, session token, privilege level and timestamp accuracy within ±5 ms.

2. Builds Behavioral Baselines for Every User and Entity

Next, the platform studies historical activity patterns to establish individualized behavioral fingerprints.

- Baselines are trained over 30 to 90 days of user history, covering typical access times, file interaction rates and privilege usage patterns.

- The system analyzes 5,000 to 50,000 events per user per month, depending on job role and tool usage.

- Behavioral clusters place users into dynamic peer groups, improving detection accuracy for specialized roles like finance or DevOps.

- Outlier activity under 2% frequency is tracked separately to avoid corrupting normal behavior models while still enabling rare-pattern detection.

3. Applies Real-Time Anomaly Detection Models

As new activity streams in, the AI evaluates and scores every action in real time.

- Event processing pipelines operate at 200 to 600 events per second, supporting enterprise-scale workloads.

- Sequence modeling detects 4 to 10 abnormal steps in a behavior chain, catching multi-stage insider actions.

- Statistical deviation checks identify spikes beyond the user’s 95th to 99th percentile activity range.

- Anomaly classifiers monitor combinations like off-hours access plus unusual device plus atypical data volume, which increases risk by 3x to 12x.

4. Correlates Multi-Source Signals for Contextual Understanding

To reduce false positives, the platform correlates signals across identity, device, file and communication systems.

- SentinelGuard AI merges up to 15 different signal types, including authentication logs, endpoint telemetry, email metadata and network flow records.

- Correlation logic detects sequences such as odd login locations within 10 minutes of large data access attempts.

- Device posture mismatches (OS version, IP change, security agent status) raise contextual risk scores by 20% to 40%.

- Cross-source evidence is required before marking events as high-risk, reducing alert noise by 30% to 50%.

5. Generates Intelligent Risk Scores and Alerts

Each anomaly is ranked using models that consider intent, context and organizational sensitivity.

- Risk scores combine severity indicators, data sensitivity labels, baseline deviation metrics and privilege context into a single evaluation.

- Alerts trigger only when risk exceeds a dynamic threshold optimized per user, reducing false alerts by 40% to 60%.

- High-risk alerts bundle evidence such as timestamps, related sessions, device fingerprints and behavior-chain snapshots.

- Low-risk anomalies are retained as training signals, improving long-term detection accuracy by 5% to 12% each month.

6. Automates Response Actions or Guides Security Teams

Finally, the platform initiates automated defenses or assists analysts with guided remediation steps.

- Automated controls can suspend accounts, block file transfers or terminate sessions within 300 to 800 milliseconds after detection.

- Guided workflows provide analysts with action suggestions, data lineage, impacted assets and recommended containment paths.

- Feedback loops incorporate analyst decisions, improving model precision by 8% to 15% in subsequent weeks.

- Post-incident insights are integrated into baseline recalibration cycles to strengthen the detection of similar threats in the future.

How 83% of Organizations Suffer Insider Attacks Shows the Need for AI Detection?

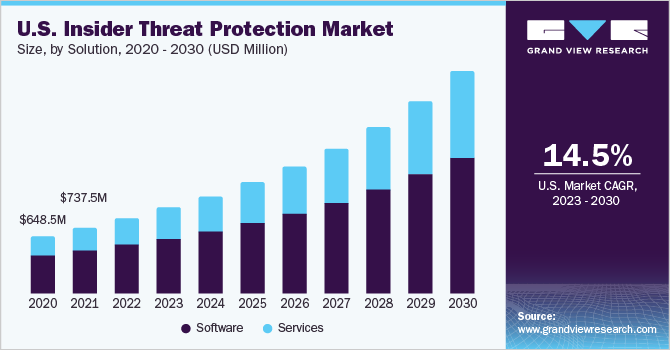

The insider threat landscape is rapidly expanding, with the global protection market projected to reach approximately USD 6.16 billion by 2025 and USD 13.75 billion by 2030, growing at a 17.4% CAGR. Advanced AI-driven detection is becoming essential to identify covert threats and prevent costly security breaches.

IBM Security’s 2024 report revealed that 83% of organizations experienced insider attacks annually, with 21% reporting 11–20 incidents per year. This trend proves that insider attacks are widespread and escalating, making AI-powered detection platforms critical for enterprise security.

A. Insider Attacks Are Escalating Faster Than Ever

Insider threats are rising in frequency and cost. Cybersecurity Insiders reports 60% of organizations face over 30 insider incidents yearly, each costing about $755,760. This underscores the need for AI-powered detection systems to manage high-volume insider activity.

- Organizations experiencing 30 incidents per year face up to $22.7 million in total annual losses from insider threats alone.

- The average annual cost of insider incidents reached $17.4 million, a 44% increase over the past two years.

- 67% of organizations experience 21–40+ incidents annually, up from 53%, proving insider threats are constant, recurring events.

- Manual monitoring cannot scale to the volume of insider incidents modern enterprises face.

- Insider incidents often originate from trusted accounts, bypassing traditional access controls and perimeter defenses.

B. Why AI-Based Insider Threat Detection Is Essential?

Insider threats are notoriously difficult to detect and slow to contain. 63% of organizations report that insider threats are more challenging to detect than external attacks, highlighting the need for intelligent, behavior-based monitoring systems.

- The average time to identify and contain insider breaches is 292 days, allowing insiders months to exfiltrate data or commit fraud.

- Only 24% of organizations have dedicated insider threat programs, leaving 76% exposed to unmanaged internal risks.

- AI-powered detection with User Behavior Analytics can detect threats 50% faster, significantly reducing potential damage.

- 46% of organizations plan to increase investment in insider risk programs, signaling urgent market demand.

- Platforms like Nightfall reduce false positives by 4× and deliver 4× lower cost of ownership, improving efficiency for security teams.

Insider threats are frequent, costly, and increasingly complex, making traditional security measures insufficient. AI-powered insider threat detection platforms offer faster detection, fewer false positives, continuous monitoring, and measurable ROI. With threats escalating across organizations globally, implementing AI-driven solutions is essential for protecting assets, preventing financial losses, and staying ahead of evolving insider risks.

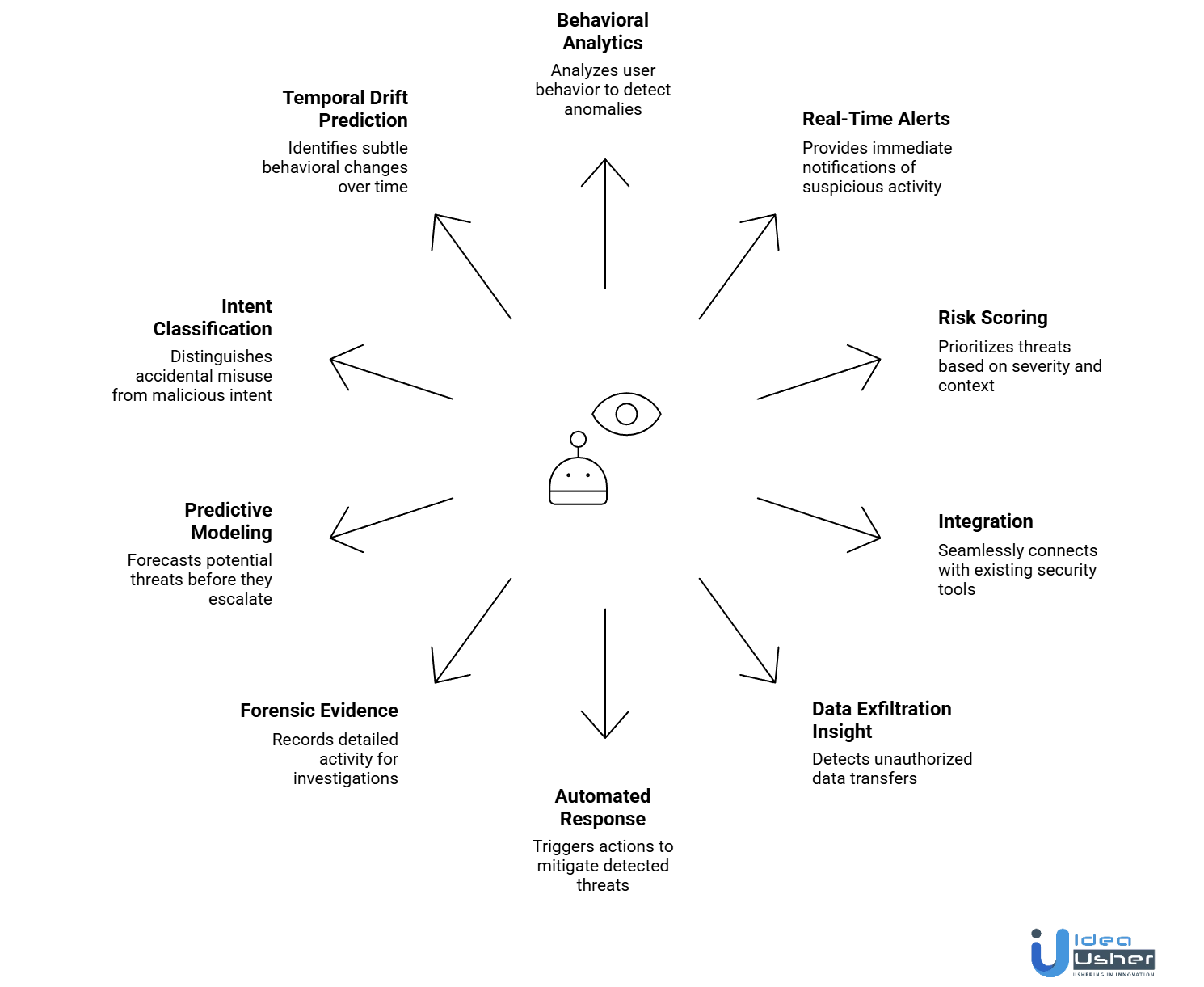

Key Features of an AI Insider Threat Detection Platform

An AI Insider Threat Detection Platform leverages advanced machine learning to identify risky behavior, prevent data breaches, and protect organizational assets. These are the key features shaping modern cybersecurity solutions.

1. Behavioral Analytics & User Profiling

An AI insider threat detection platform builds dynamic behavioral profiles for every user by analyzing access frequency, device patterns, data usage rhythm and login behavior. These baselines help detect subtle deviations that traditional rule-based tools overlook, especially during multi-step or low-signal insider activity.

2. Real-Time Anomaly Detection & Alerts

The system continuously evaluates live activity streams using machine learning and sequence analysis, flagging deviations such as off-hours logins, unusual file interaction velocity or abnormal privilege usage. Real-time alerts give security teams immediate visibility into emerging insider risks.

3. Risk Scoring & Threat Prioritization

Each anomaly is evaluated through contextual risk scoring, combining factors like role sensitivity, behavioral deviation severity, asset importance and access context. This prioritization helps analysts focus on the highest-impact insider threats while significantly reducing alert fatigue.

4. Integration With Existing Security Infrastructure

Advanced platforms integrate seamlessly with SIEM, IAM, EDR and cloud security tools. This cross-system correlation enriches detection with identity context, device posture signals and network behavior, ensuring a unified view of insider activity across the entire environment.

5. Data Exfiltration Insight & DLP-Aware Monitoring

With embedded analytics for data movement patterns, the platform can detect suspicious transfers, cloud uploads, bulk file access spikes or stealthy exfiltration attempts. These DLP-aware signals strengthen internal safeguards against unauthorized data leakage or misuse.

6. Automated Response & Guided Remediation

When high-risk behavior is detected, the system can trigger automated defensive actions such as privilege restriction, session termination or file transfer blocking. Guided remediation workflows support analysts with actionable context, improving containment speed and accuracy.

7. Forensic Evidence & Comprehensive Audit Trails

The platform records detailed activity trails, capturing timestamps, sequence flows and contextual metadata. These forensic logs support investigations, compliance reporting and post-incident analysis by providing verifiable evidence of all significant user actions.

8. Predictive Modeling for Early Threat Anticipation

Advanced AI models examine long-term behavioral shifts to predict emerging insider risks before they escalate. By identifying early indicators of privilege misuse, data staging or compromised account activity, the system enables proactive intervention rather than reactive containment.

9. AI-Driven Insider Intent Classification Model

Beyond detecting anomalies, this model evaluates motivational indicators like sentiment shifts, workflow disruption patterns, privilege escalation context, and data aggregation velocity. It classifies user action intent, enabling organizations to distinguish accidental misuse from high-risk, malicious insider behavior with greater precision.

10. Temporal Behavior Drift Prediction Engine

This engine analyzes long-term behavioral evolution across weeks or months, identifying subtle drift patterns that precede insider incidents. By forecasting deviations before they become risky actions, the platform enables preemptive intervention and dramatically lowers the window in which insider threats can escalate unnoticed.

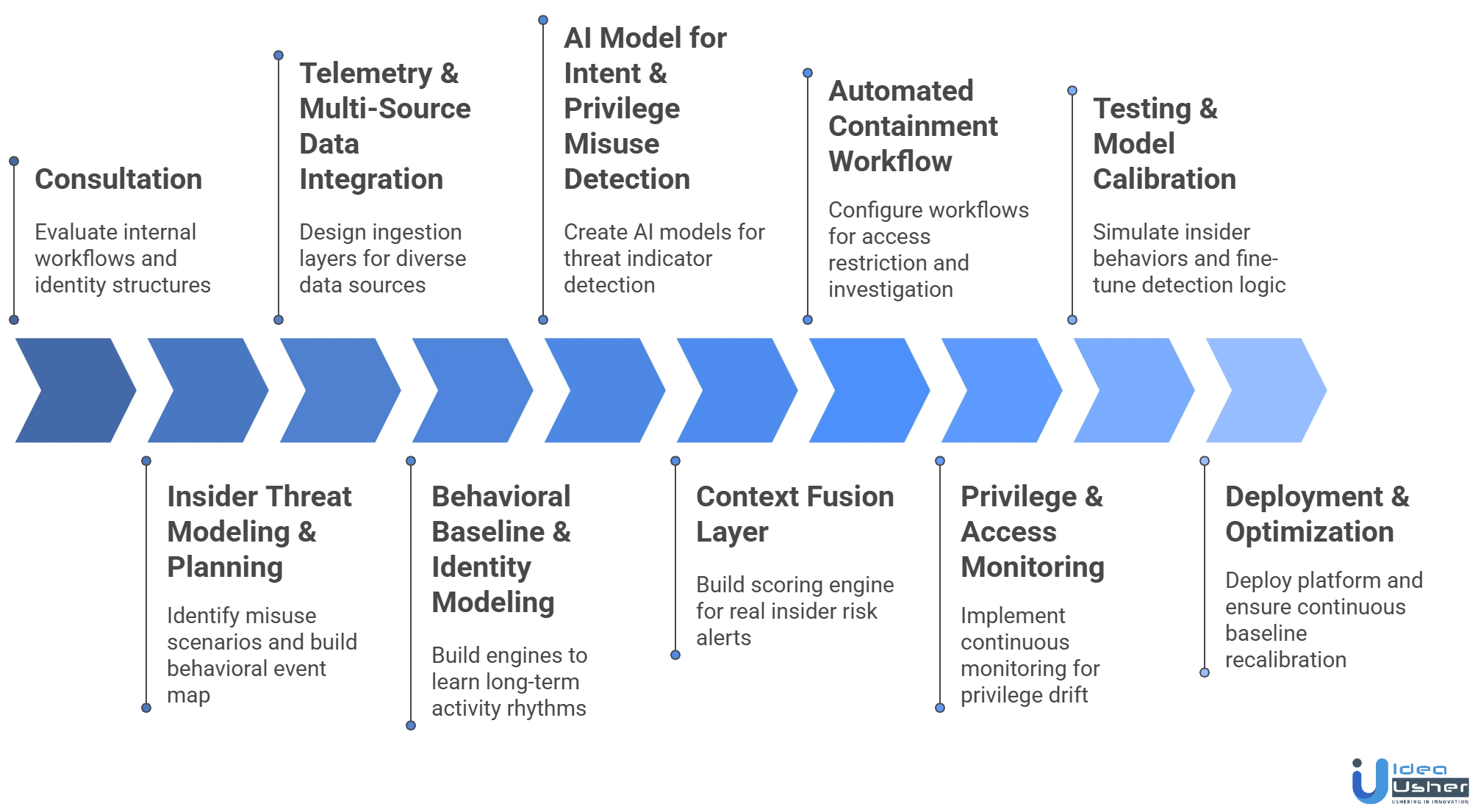

How to Build an AI Insider Threat Detection Platform?

Building an AI Insider Threat Detection Platform involves integrating machine learning, behavior analytics, and real-time monitoring to proactively identify risks. Our developers follow a structured steps and strategies for developing an effective security solution.

1. Consultation

We begin by evaluating internal workflows, identity structures and high-value assets. Our developers study access patterns, user roles and data exposure paths to create a risk-aligned development plan that reflects real organizational behavior and insider threat realities.

2. Insider Threat Modeling & Planning

Our team identifies misuse scenarios such as privilege escalation, data staging, unauthorized transfers and covert account manipulation. We build a behavioral event map that defines which user actions, access contexts and system events must be captured for accurate UEBA-driven detection.

3. Telemetry & Multi-Source Data Integration

We design ingestion layers that capture signals from endpoints, SaaS applications, IAM tools, network logs and collaboration systems. This enables the construction of a multi-channel behavioral graph that reflects how users interact with data, devices and internal systems in real environments.

4. Behavioral & Identity Modeling Framework

Our developers build engines that learn long-term activity rhythms such as access frequency, privilege usage entropy, session continuity and data movement velocity. This identity-centric modeling allows the system to detect deviations that indicate potential insider risk or subtle credential compromise.

5. AI Model for Intent & Privilege Misuse Detection

We create AI models that interpret multi-step behavior sequences, contextual misalignments and unusual privilege behaviors. The system applies intent inference logic, escalation pattern detection and drift analysis to uncover threat indicators that cannot be identified by rule-based monitoring tools.

6. Context Fusion Layer Development

We build a scoring engine that merges deviation magnitude, identity trust level, resource sensitivity and privilege context. Our context fusion layer ensures alerts reflect real insider risk rather than isolated anomalies lacking operational or behavioral significance.

7. Automated Containment Workflow

Our developers configure automated workflows that restrict access, block sensitive transfers or suspend suspicious sessions using behavior-driven triggers. Guided playbooks provide analysts with structured investigation steps, improving containment speed for emerging insider threat scenarios.

8. Privilege & Access Monitoring

We implement continuous monitoring for privilege drift, access anomalies and misconfigured roles. This prevents insider risks created by entitlement sprawl, temporary privilege misuse or accidental overexposure of sensitive systems across the organization.

9. Testing & Model Calibration

We simulate insider behaviors such as data hoarding, anomalous login chains, privilege misuse and slow exfiltration attempts. Our developers fine-tune behavioral deviation thresholds, correlation logic and risk scoring to ensure high-fidelity detection with minimal alert noise.

10. Deployment & Behavioral Optimization

We deploy the platform within the organization’s environment, ensure stable telemetry flows and establish continuous baseline recalibration cycles. Our team monitors detection performance, updates identity models and enhances risk logic as user behavior evolves over time.

Cost to Build an AI Insider Threat Detection Platform

The cost to build an AI Insider Threat Detection Platform depends on technology, data complexity, and security requirements. Explore key factors influencing investment for an effective, scalable cybersecurity solution.

| Development Phase | Description | Estimated Cost |

| Consultation | Defines insider risk scenarios and scopes behavior-first detection goals. | $4,000 – $7,000 |

| Threat Modeling | Outlines threat patterns and behavioral event mapping for data capture. | $6,000 – $12,000 |

| Data Integration Design | Designs ingestion layers and multi-source behavioral signal fusion. | $12,000 – $20,000 |

| Behavioral Baseline & Identity | Builds personalized behavioral profiles using activity rhythm analysis. | $10,000 – $18,000 |

| AI Model Development | Develops ML models for intent inference and deviation detection. | $14,000 – $30,000 |

| Risk Scoring Engine | Creates context-rich scoring logic that prioritizes real insider threats. | $14,000 – $22,000 |

| Automated Response Workflow | Configures behavior-triggered response actions for risk containment. | $10,000 – $15,000 |

| Privilege & Access Monitoring | Adds monitoring for entitlement drift and misaligned privileges. | $9,500 – $16,500 |

| Explainable AI Integration | Implements transparent detection reasoning for analysts. | $11,500 – $17,000 |

| Testing & Calibration | Runs insider threat simulations for threshold tuning and validation. | $4,000 – $7,500 |

| Deployment & Optimization | Ensures stable deployment and ongoing behavioral model refinement. | $5,000 – $11,000 |

Total Estimated Cost: $66,000 – $127,000

Note: Development costs vary with organizational scale, data volume, analytics complexity, compliance, custom integrations, simulation depth, and ongoing optimization needs.

Consult with IdeaUsher for a personalized cost estimate and roadmap to build a high-performing, AI-driven insider threat detection platform.

Cost-Affecting Factors to Consider

Key cost-affecting factors include technology complexity, data volume, and security requirements, all crucial for budgeting an effective AI Insider Threat Detection Platform.

1. Scope and Depth of Behavioral Analytics

More advanced behavior modeling and multi-entity baselining increase development time, as deeper insights require richer datasets, extended training cycles and more complex anomaly detection logic.

2. Number & Diversity of Telemetry Sources

Integrating endpoints, IAM, SaaS apps and network logs raises cost because each source requires custom ingestion pipelines, correlation mechanisms and normalization logic.

3. Complexity of Privilege and Access Governance

Environments with dynamic roles or sensitive permissions require additional engineering for privilege drift analysis, context-aware controls and governance validation, increasing overall development investment.

4. AI Model Sophistication

Building accurate insider detection models requires large volumes of labeled events and synthetic behavior simulations, which significantly influence training time and overall development cost.

5. Required Automation Level

Highly automated workflows for session blocking, access restriction and data transfer control demand more engineering and behavior-triggered orchestration, increasing both development and testing costs.

Suggested Tech Stacks for an AI Insider Threat Detection Platform

Choosing the right tech stack is crucial for building a robust AI Insider Threat Detection Platform. These are some suggested tools and frameworks that ensure efficiency, scalability, and reliable security performance.

| Category | Suggested Technologies | Purpose |

| AI & Machine Learning Frameworks | TensorFlow, PyTorch, Scikit-learn | Model behaviors, detect anomalies, and analyze intent across activity streams. |

| Data Processing & Stream Ingestion | Apache Kafka, Apache Flink, Logstash | Handle high-volume telemetry from endpoints, IAM, SaaS apps, and networks. |

| Big Data Storage & Processing | Elasticsearch, Apache Hadoop, Snowflake | Store and query large behavioral datasets for baselining and drift analysis. |

| Identity & Access Management Integration | OAuth 2.0, OpenID Connect, Keycloak | Provide identity context, session integrity, and privilege visibility. |

| Security Log & Event Aggregation | SIEM tools, Elastic Stack | Consolidate logs for correlation and forensic investigation. |

| NLP & Intent Interpretation Tools | Hugging Face Transformers, spaCy | Analyze communications for intent-based risk indicators. |

| Containerization & Orchestration | Docker, Kubernetes | Enable scalable deployment and efficient modular services. |

| Monitoring, Observability & Telemetry | Prometheus, Grafana, OpenTelemetry | Offer real-time performance and behavioral model insights. |

| Automated Remediation & Workflow Engines | Airflow, Temporal, Camunda | Trigger responses and guide structured remediation workflows. |

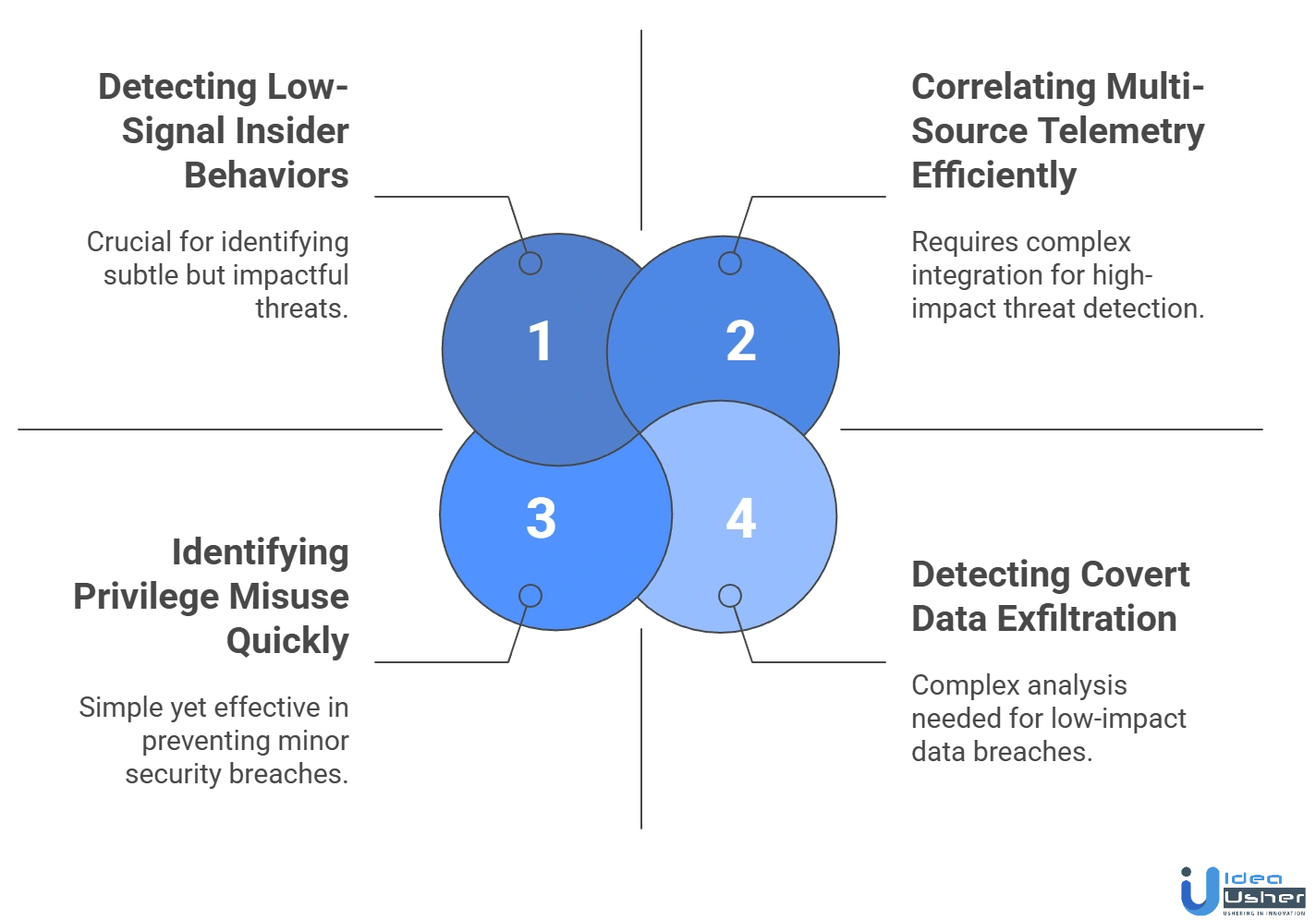

Challenges & How Our Developers Will Solve Them?

Developing an AI Insider Threat Detection Platform involves complex challenges like data accuracy, behavior analysis, and system integration. Our developers address these hurdles with effective, strategic solutions.

1. Detecting Low-Signal Insider Behaviors

Challenge: Insider actions often resemble routine workflows, making harmful behavior difficult to distinguish from legitimate activity in large, dynamic environments.

Solution: We develop behavior-aware detection models that assess subtle deviations, sequence anomalies and access context shifts, enabling the platform to surface low-signal insider activity that would otherwise blend into everyday operations.

2. Correlating Multi-Source Telemetry Efficiently

Challenge: Insider detection requires fusing endpoint, identity, SaaS, network and file telemetry, which can overwhelm systems lacking strong correlation logic.

Solution: Our platform uses multi-channel correlation pipelines that unify signals into behavioral clusters, enabling precise interpretation while maintaining high performance across large and complex data streams.

3. Identifying Privilege Misuse Quickly

Challenge: Dynamic environments introduce shifting access rights, making hidden privilege misuse difficult to detect.

Solution: We implement privilege drift analysis and access pattern modeling that reveal unusual entitlement spikes, abnormal role transitions and misuse of elevated permissions before they impact sensitive systems or data.

4. Detecting Covert Data Exfiltration

Challenge: Insiders often exfiltrate data gradually to avoid detection, creating minimal spikes in activity.

Solution: We integrate data movement entropy analysis and long-window behavior monitoring, identifying subtle aggregation patterns, stealthy transfers and unusual file access velocity across weeks or months of activity.

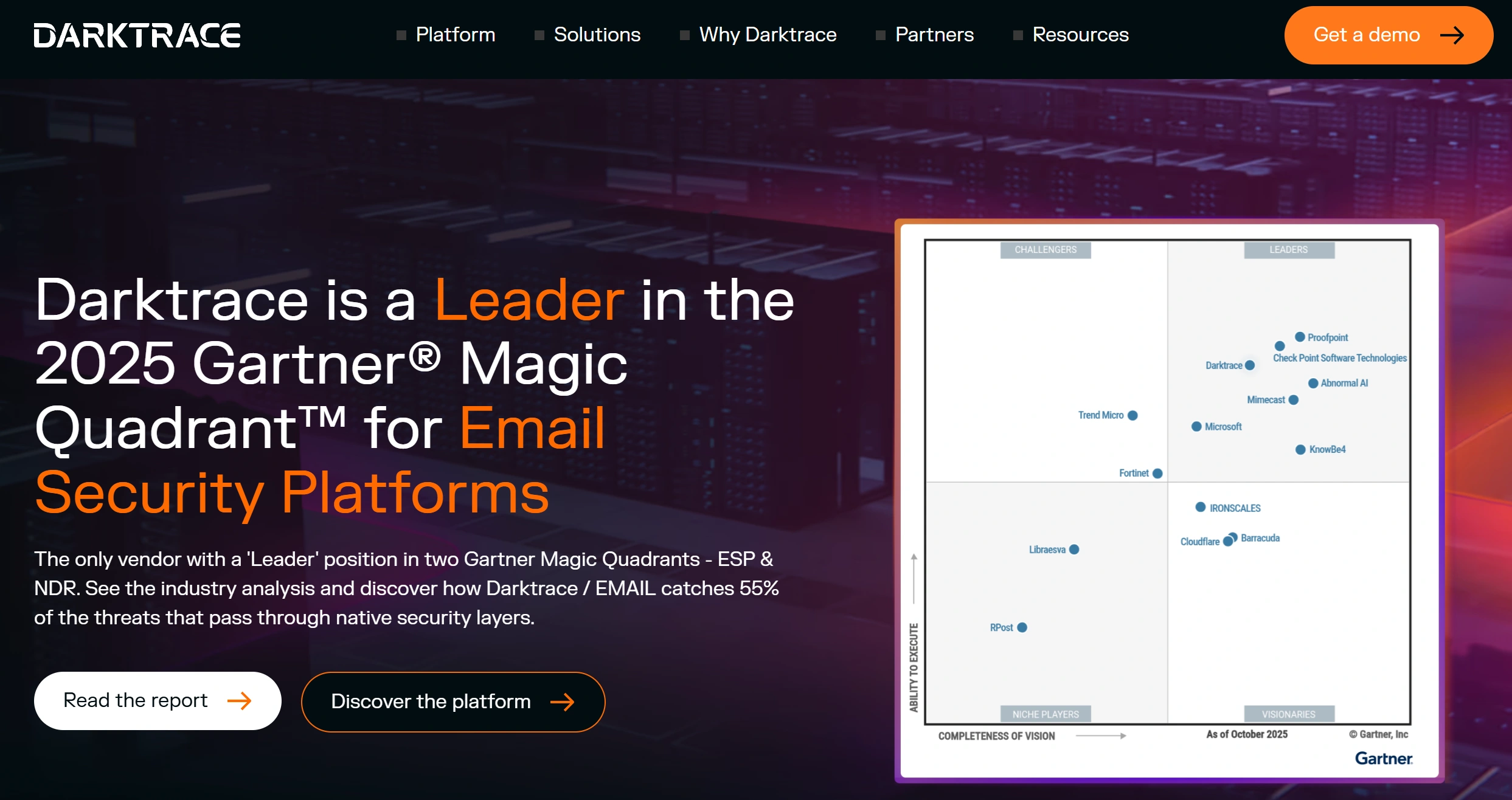

Leading AI-Powered Insider Threat Detection Platforms

AI insider threat detection platforms use machine learning and behavioral analytics to identify anomalous user activity and reduce internal security risks. These are some leading platforms showcasing how AI helps detect and mitigate insider threats.

1. Darktrace

Darktrace uses self-learning AI to build dynamic models of normal user, device, and network behavior, enabling it to spot subtle insider threat anomalies like privilege abuse, lateral movement, and unusual access patterns. Its Cyber AI Analyst automates investigations and can trigger targeted autonomous response actions to contain threats early.

2. Teramind

Teramind employs machine learning and behavioral analytics to continuously monitor user activity across endpoints, applications, and networks. Its AI-driven predictive risk scoring helps detect negligent, compromised, or malicious insider behavior before it results in data loss, policy violations, or compliance failures.

3. DTEX

DTEX combines AI-powered behavioral analytics, contextual risk scoring, and data loss prevention to identify early indicators of insider threats. Its platform is designed to uncover user intent, surface hidden risk patterns, and support proactive mitigation while maintaining a strong balance between security monitoring and employee privacy.

4. Lepide

Lepide’s Data Security Platform uses AI-enhanced behavioral baselining to identify deviations from normal user activity that indicate insider risk. With automated alerts, predefined threat models, and investigation workflows, it helps security teams respond quickly to privilege misuse, data misuse, and unauthorized access.

5. Proofpoint

Proofpoint Insider Threat Management applies behavioral analytics and contextual intelligence to correlate user activity with data movement across endpoints and cloud services. It enables real-time detection of risky insider actions, supports rapid investigation, and helps prevent data exfiltration caused by negligent or malicious insiders.

Conclusion

Developing an AI Insider Threat Detection Platform involves balancing advanced analytics with responsible monitoring. The focus should remain on identifying risky behavioral patterns while respecting privacy and compliance boundaries. Such platforms rely on contextual signals, user behavior baselines, and real-time anomaly detection to surface genuine threats early. When thoughtfully engineered, the system empowers security teams to act with clarity rather than suspicion. A reliable solution enhances trust, supports governance goals, and strengthens internal security without adding unnecessary operational complexity.

Build an AI Insider Threat Detection Platform with IdeaUsher!

Insider threats demand smarter, behavior-driven security solutions. At IdeaUsher, we develop AI-powered UEBA platforms that detect privilege misuse, data exfiltration patterns, identity compromise and long-window behavioral drift across enterprise environments.

Why Choose IdeaUsher?

- UEBA and Behavioral Analytics Expertise: We design engines that model user behavior, correlate multi-source telemetry and identify subtle deviations missed by traditional tools.

- Advanced Detection Capabilities: From identity integrity scoring to privilege drift monitoring, we engineer platforms prepared for modern insider threat challenges.

- Custom-Built for Your Infrastructure: Our developers tailor ingestion pipelines, risk scoring logic and automation workflows to your environment and compliance needs.

- Enterprise-Grade Quality: We deliver scalable, secure and high-performing solutions capable of supporting large datasets and real-time threat evaluation.

Explore our portfolio to see a wide range of successful digital products we’ve delivered across diverse industries.

Get in touch with IdeaUsher for a free consultation and a detailed development strategy.

Work with Ex-MAANG developers to build next-gen apps schedule your consultation now

FAQs

An effective AI insider threat detection platform continuously monitors user behavior, access patterns, and system interactions to identify abnormal activities that indicate data misuse, privilege abuse, or policy violations.

AI models establish behavioral baselines for users and roles, then flag deviations such as unusual file access, login timing, or data transfers, reducing false positives while focusing on genuine insider risk signals.

Essential integrations include identity management systems, endpoint monitoring tools, cloud services, access logs, and SIEM platforms to provide a unified view of user activity across the enterprise environment.

Development must address data minimization, role-based access controls, audit trails, and regulatory requirements to ensure employee privacy while maintaining transparency, legal compliance, and defensible monitoring practices.