We’ve all experienced chatting with a bot that just doesn’t understand us. Sometimes it gets our requests wrong or misses the tone of our messages. People use more than just words to communicate; they use gestures, images, and emotions too. That’s why Multimodal Conversational AI is making such a difference.

By combining text, voice, vision, and even emotional cues, multimodal AI helps systems understand people in a more natural way, almost like having a real conversation. Businesses in areas like retail, healthcare, and customer service are starting to see how important this change is. Now, it’s not just about getting faster answers; it’s about creating smarter, more human interactions that build trust and keep people engaged.

In this blog, we’ll explore the key benefits of Multimodal Conversational AI and why it’s becoming the backbone of next-gen customer experience. You’ll learn how this technology elevates user engagement and personalization. With deep expertise in advanced AI solutions, IdeaUsher helps businesses integrate multimodal intelligence to deliver seamless, context-aware interactions.

What is Multimodal Conversational AI?

Multimodal Conversational AI is a type of artificial intelligence that lets machines interact with people in different ways, like through text, speech, images, video, or gestures, rather than just using text like traditional chatbots.

It makes human-computer interaction smoother and more natural, letting people talk to computers much like they would talk to another person.

This technology helps connect what people want to do with how digital systems understand them. It makes interactions smooth and context-aware on different platforms and devices by using various input and output channels:

- Text: Enables users to chat through messaging interfaces, websites, or mobile apps for quick, precise communication.

- Voice: Supports natural, spoken conversations through voice assistants or IVR systems, allowing hands-free interaction.

- Image: Recognizes and interprets visual inputs such as product photos, medical scans, or documents to provide relevant responses.

- Video: Uses facial recognition, emotional cues, and visual context to adapt tone and response style dynamically.

- Gestures: Detects and interprets physical gestures or movements via sensors or cameras to trigger specific actions or responses.

Unimodal vs Multimodal AI Models

As AI evolves, it’s important to distinguish between unimodal and multimodal models. Unimodal models use a single input source, while multimodal AI combines various data types for a more human-like, context-aware understanding.

| Aspect | Unimodal AI Models | Multimodal AI Models |

| Definition | Process and analyze data from a single modality (e.g., text, image, or audio). | Process and integrate data from multiple modalities such as text, image, audio, and video simultaneously. |

| Input Type | Works with one type of input at a time. For example, a chatbot that understands only text. | Accepts multiple input types, e.g., text, voice, and image together for richer understanding. |

| Output Type | Produces one-dimensional outputs like text summaries or image labels. | Generates context-aware outputs combining multiple forms such as text + speech + visuals. |

| Context Understanding | Limited to the context of a single data source. | Combines information from various sources to achieve deeper situational awareness. |

| Applications | Text translation, image classification, or speech-to-text models. | Virtual assistants, multimodal chatbots, autonomous vehicles, healthcare diagnostics, AR/VR experiences. |

| Complexity | Simpler architecture and lower computational cost. | More complex model architecture involving fusion layers and cross-modal training. |

| Accuracy & Adaptability | Performs well within a single domain but struggles with mixed data environments. | Offers higher accuracy and adaptability, mirroring human-like perception and reasoning. |

| Example | GPT-3 (text-only), ResNet (image-only), or Whisper (audio-only). | GPT-5, Gemini, and LLaVA that process text, voice, and image inputs together. |

Three Crucial Components of Multimodal AI

Multimodal AI brings together information from different sources, like text, images, audio, and video, to help systems understand and reason more effectively. Its design usually includes three main parts:

1. Input Module (Perception / Representation)

Purpose: To capture and encode data from various modalities into machine-understandable formats.

Functions:

- Uses modality-specific encoders (e.g., Transformers for text, CNNs or Vision Transformers for images, RNNs for audio).

- Converts raw data into embeddings or feature vectors.

- Establishes a foundation for cross-modal understanding.

Example: CLIP encodes text and images separately into a shared embedding space so that similar concepts align across modalities.

2. Fusion Module (Integration / Alignment)

Purpose: To combine and align information from different modalities into a unified representation.

Functions:

- Applies attention mechanisms, co-attention layers, or multimodal transformers to integrate signals.

- Learns relationships and dependencies between modalities (e.g., linking image regions with corresponding text phrases).

- Enables the model to perform cross-modal reasoning.

Example: Flamingo (DeepMind) fuses visual and textual tokens using cross-attention to understand and answer questions about images.

3. Output Module (Reasoning / Generation)

Purpose: To perform reasoning, prediction, or generation based on the fused multimodal understanding.

Functions:

- Uses decoders or task-specific output heads to produce the final output (e.g., text, classification label, image).

- Generates responses or actions consistent with multimodal input.

- Supports tasks such as image captioning, visual question answering (VQA), and multimodal dialogue.

Example: GPT-4o generates textual responses to visual inputs, such as describing an image or interpreting a chart.

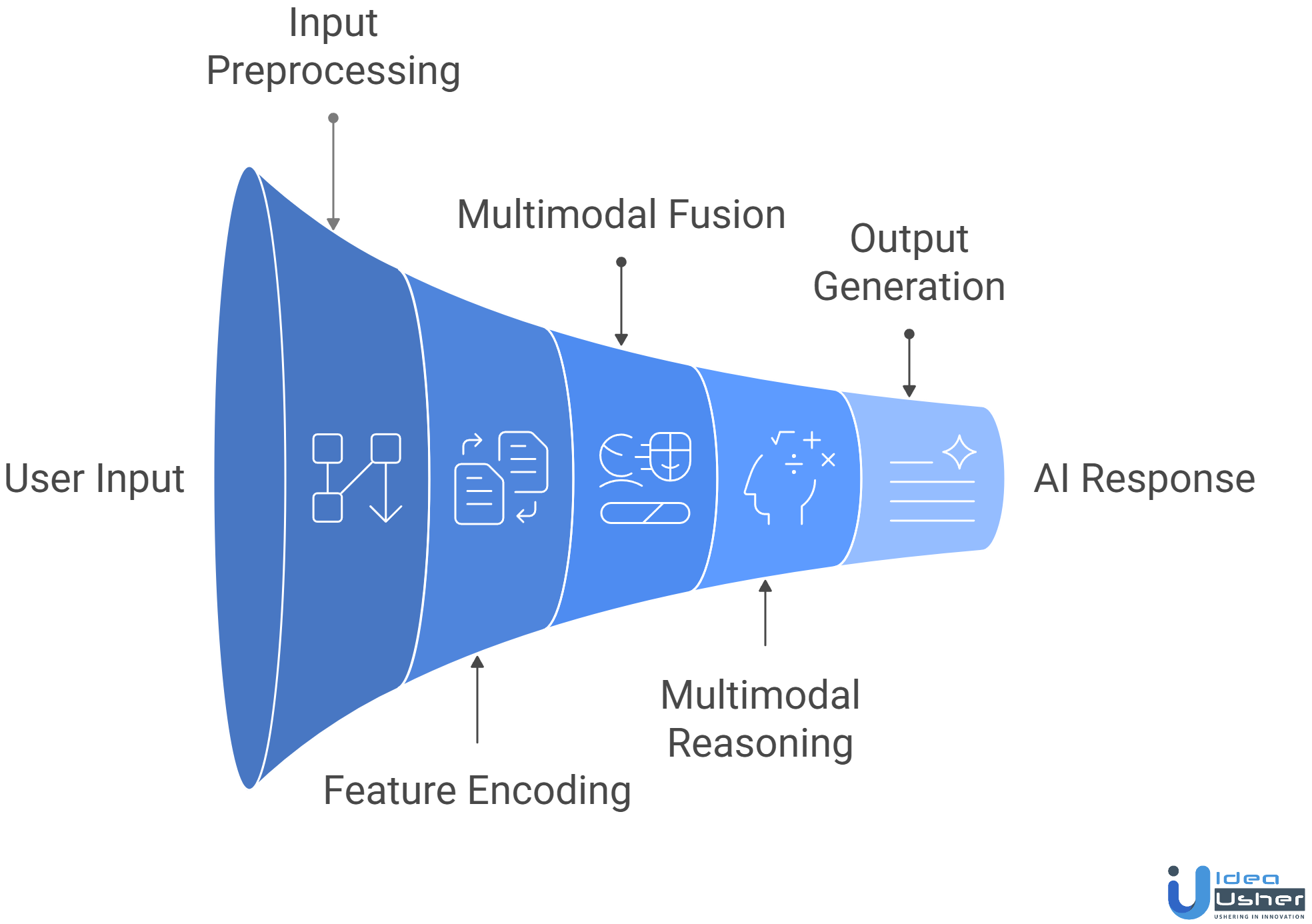

Workflow of Multimodal Conversational AI

The workflow of a Multimodal Conversational AI integrates text, voice, images, and gestures for natural, human-like interactions. It processes, understands, and responds across channels, boosting engagement and accuracy.

1. User Input Stage

The user interacts with the AI using one or more modalities:

- Text (typing a question or command)

- Speech (speaking a query)

- Image / Video (uploading visual content)

- Sensor Data (location, gestures, etc.)

Example: A user uploads a photo of a sign and asks, “Can you tell me what is written on this sign?”

In this scenario, the AI receives both visual input (the image of the sign) and textual input (the user’s question), which are later processed together to generate an accurate response.

2. Input Preprocessing

Each input type is preprocessed to clean, normalize, and transform it into a machine-readable format suitable for further analysis. This step ensures that diverse inputs are standardized before entering the model.

| Input Type | Preprocessing Tasks |

| Text | Tokenization, sentence segmentation, and normalization |

| Speech | Speech-to-text (ASR), noise reduction |

| Image | Object detection, segmentation, normalization |

| Video | Frame extraction, motion detection, scene analysis |

| Sensor Data | Signal filtering, encoding into feature vectors |

After preprocessing, raw multimodal inputs are turned into structured feature representations. These are compact numerical forms that capture key information from each modality and can be used by neural encoders.

3. Feature Encoding (Modality-Specific Encoders)

Each modality is processed by a specialized encoder that converts features into embeddings (numerical representations of meaning).

| Encoder | Input | Output |

| Text Encoder (Transformer like GPT/BERT) | Text tokens | Text embeddings |

| Audio Encoder (e.g., Whisper, Wav2Vec2) | Speech | Audio embeddings |

| Vision Encoder (e.g., CLIP-ViT, ResNet) | Image/Video | Visual embeddings |

These embeddings represent semantic meaning in a shared high-dimensional space.

4. Multimodal Fusion Layer

This is the core integration step, where the AI connects the dots between different inputs.

Common fusion strategies:

- Early Fusion: Combine embeddings before reasoning.

- Late Fusion: Independently process each modality, then merge final outputs.

- Cross-Attention Fusion: Use attention layers so each modality can “attend” to another (e.g., text attends to relevant image regions).

Example: When asked, “What color is the car?”, the text encoder looks for the words “color” and “car.” At the same time, the image encoder finds the car’s pixels. The fusion layer then connects these two ideas.

5. Multimodal Reasoning Layer

Now the combined embeddings are passed to a multimodal large language model (MLLM) for reasoning.

This stage includes:

- Context understanding

- Cross-modal inference (“connects visual + textual clues”)

- Knowledge integration (using pretrained knowledge or external data)

- Response planning (decides what and how to answer)

Models like GPT-4V, Gemini, Claude 3 Opus, or Kosmos-2 operate here.

6. Output Generation

In this stage, the system produces a response or action based on multimodal reasoning, using specialized models for each output type according to the task and context.

| Output Type | Generation Model | Example |

| Text | Decoder (LLM) | “The sign says ‘Welcome to Paris’.” |

| Speech | TTS (Text-to-Speech) | Spoken voice output |

| Image / Video | Diffusion model (DALL·E, Stable Diffusion) | Visual answer or diagram |

| Action / Command | Robotics controller | Physical or digital actions |

The multimodal AI transforms its understanding into a human-readable response, ensuring it matches the user’s request and context.

How 60% of Enterprises Use Multimodal AI to Enhance CX?

The global multimodal AI platform market, valued at USD 1.73 billion in 2024, is projected to reach USD 10.89 billion by 2030 with a 36.8% CAGR. This growth reflects a shift towards multimodal conversational AI (voice, text, image, video), transforming business-customer connections, operations, and ROI.

Today, more than 60% of large companies use multimodal conversational AI. They combine voice, text, image, and video interactions to improve customer experience, streamline workflows, and increase sales efficiency.

This shift reflects a major evolution from simple chatbot deployments to context-aware, multimodal ecosystems that are actively shaping enterprise communication, service quality, and ROI.

Enterprise Adoption and Market Trends

Adoption is rising rapidly as enterprises recognize tangible benefits in productivity and service delivery.

- 40% of generative AI solutions are projected to be multimodal by 2027, reflecting the shift beyond text-based systems.

- Financial services lead adoption at 92%, with more than half their systems supporting both voice and text interfaces.

- Across industries, one-third of all conversational AI deployments are now multimodal, a sign of deep integration into CX and automation strategies.

- The CANDOR corpus offers over 850 hours and 7+ million words of multimodal conversations, one of the largest corpora enabling at-scale emotion and behavior analysis in dialogue systems.

Enterprises aren’t just experimenting anymore; they’re scaling multimodal AI to core business functions.

Business Impact: Sales, Efficiency, and ROI

The move toward multimodal AI is backed by measurable, performance-based outcomes.

- 90% of businesses improved complaint resolution time after integrating chat and voice bots.

- 67% increase in sales reported from AI-driven product recommendations and personalized customer interactions.

- 21% jump in conversion rates and 27% improvement in CSAT seen in companies deploying multimodal AI interfaces.

- On average, AI customer service delivers $3.50 ROI per dollar invested, with industry leaders reporting up to 8x ROI through operational savings and engagement growth.

- Juniper Research noted that 90% of chatbot-using businesses achieve at least $0.70 per support interaction, cutting 4 minutes per query on average.

This data shows that multimodal conversational AI not only automates tasks but also boosts performance in different departments.

Case Studies: Sephora & Bank of America

We highlight key studies, adoption trends of multimodal conversational AI, and outcomes driving this transformation, backed by data and case studies from brands like Sephora and Bank of America.

1. Sephora — Personalization at Scale

Sephora’s AI-powered assistant handles 75% of daily queries autonomously, slashing response times to under 10 seconds.

Engagement soared with a 200% boost in AI-based skin analysis usage, 11 million virtual try-ons, and a 35% lift in conversions via its Virtual Artist.

The system also achieved a 20% drop in customer service costs and an 18% reduction in cart abandonment, making AI a core growth driver for Sephora’s eCommerce funnel.

2. Bank of America (Erica) — Financial AI at Enterprise Scale

Bank of America’s Erica AI has surpassed 3 billion client interactions and serves 50 million users with 58 million monthly interactions.

With 1.7 billion personalized insights delivered, Erica boosted digital adoption to 83% of customer relationships, up from 77%, contributing to a 19% YoY increase in net income and 11% revenue growth.

Erica’s continued learning (over 75,000 NLP enhancements) showcases how iterative multimodal AI can deliver measurable business outcomes.

Multimodal conversational AI benefits extend beyond customer interactions. Approximately 63% of business leaders say it fosters hyper-personalized experiences and boosts brand loyalty, while 80% of CEOs plan to enhance customer engagement with it. Additionally, it improves employee satisfaction by automating repetitive tasks, allowing teams to focus on more creative work. Overall, AI enhances human potential and transforms both customer and employee experiences.

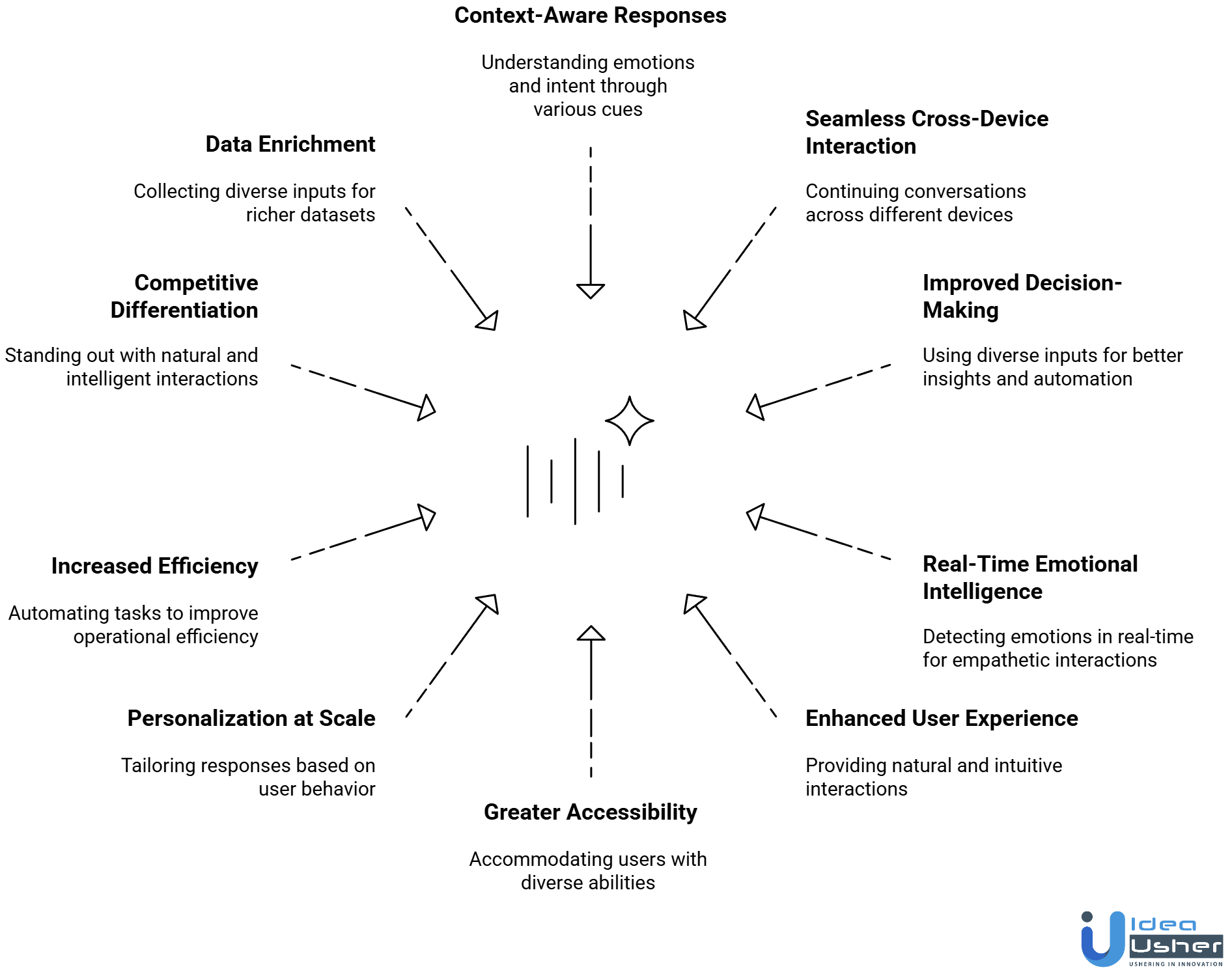

Top Benefits of Using Multimodal Conversational AI

Multimodal Conversational AI enhances engagement by integrating text, voice, image, and video inputs for seamless, natural interactions. It connects human intent with digital understanding, enabling businesses to offer richer, context-aware experiences.

1. Context-Aware Responses

Unlike unimodal systems, multimodal AI uses cues like tone, facial expressions, and visual information to better understand emotions and intent. This helps it respond in a smarter and more empathetic way.

2. Seamless Cross-Device Interaction

Users can begin a conversation on one device (like a smartphone) and continue it on another (like a smart speaker) without losing context. This fluidity ensures uninterrupted communication across platforms.

3. Improved Decision-Making

Multimodal AI looks at different types of input, such as text, voice, and images, to provide more meaningful insights. This helps businesses automate complex decisions, make better recommendations, and improve the accuracy of their data-driven work.

4. Real-Time Emotional Intelligence

Multimodal systems analyze voice, facial expressions, and words to detect emotions like frustration, confusion, or joy in real time. This enables emotionally intelligent interactions, such as support bots adjusting tone or escalating issues when users sound upset.

5. Enhanced User Experience

When people can communicate using speech, gestures, text, and visuals, their interactions feel more natural and intuitive. This leads to better engagement, higher satisfaction, and improved retention.

6. Greater Accessibility

Multimodal AI ensures inclusivity by accommodating users with diverse preferences and abilities. For instance, voice input supports visually impaired users, while text-based interactions help those with hearing impairments.

7. Personalization at Scale

The system learns from user behavior across channels to tailor responses and recommendations in real time. This personalization helps build stronger relationships and drives brand loyalty.

8. Increased Efficiency for Businesses

Automation that uses multimodal AI can manage large numbers of interactions, lighten the workload for staff, and speed up response times. This leads to better overall efficiency for operations.

9. Competitive Differentiation

Companies leveraging multimodal AI gain a distinct market edge. Offering natural, contextually intelligent, and emotionally aware interactions helps brands stand out from competitors using traditional chatbots.

10. Data Enrichment & Better Insights

Since multimodal AI collects and correlates inputs from voice, text, images, and gestures, it builds richer datasets for analytics. This helps organizations uncover deeper insights about user intent, preferences, and pain points, improving future service design.

Real-World Use Cases of Multimodal Conversational AI in Various Industries

Multimodal Conversational AI empowers machines to process and respond using text, voice, images, video, and sensor data in a unified framework. Its cross-modal intelligence is reshaping how industries function by enabling more natural, efficient, and human-like interactions.

1. Healthcare

Multimodal AI in healthcare integrates clinical data, speech, and imaging to assist doctors and improve patient outcomes. It enables faster diagnosis, intelligent documentation, and virtual care delivery.

Use Cases:

- Medical Image Interpretation: Analyzing X-rays or CT scans alongside doctors’ notes.

- Virtual Health Assistants: Understanding spoken symptoms and patient-uploaded photos.

- Clinical Documentation: Automatically transcribing and summarizing consultations.

Impact: Improves diagnostic accuracy, reduces clinician workload, and enhances patient engagement through intelligent automation.

Example: Nabla Copilot is a multimodal healthcare assistant that listens to doctor–patient conversations, processes speech and cues, and generates medical notes. It demonstrates how next-generation systems enhance clinical productivity and documentation accuracy.

2. Retail & E-Commerce

In retail, multimodal AI blends computer vision, natural language, and voice understanding to deliver a personalized and seamless shopping experience.

Use Cases:

- Visual Product Search: Customers find items by uploading photos.

- Shopping Assistants: Understand voice, text, and visual inputs.

- Personalized Recommendations: Suggest items based on browsing and visual behavior.

Impact: Enhances product discovery, drives higher conversions, and builds stronger customer loyalty.

Example: Amazon StyleSnap allows shoppers to upload an image and instantly find visually similar products available on Amazon, bridging visual intent with purchase actions.

3. Education & E-Learning

Multimodal AI transforms education by integrating text, speech, handwriting, and visual learning materials to deliver adaptive and interactive learning experiences.

Use Cases:

- AI Tutors: Respond to spoken questions, images, or notes.

- Content Generation: Create visual and textual learning materials automatically.

- Language Feedback: Evaluate pronunciation and writing in real time.

Impact: Promotes personalized learning, supports different learning styles, and enhances student engagement.

Example: Khan Academy’s “Khanmigo”, powered by GPT-4, serves as a multimodal AI tutor that interacts with students through text and visual problem-solving guidance, offering context-aware learning support.

4. Manufacturing & Industrial Automation

In manufacturing, multimodal AI combines data from cameras, sensors, and operator input to streamline production, predict failures, and assist technicians in real time.

Use Cases:

- Visual Quality Control: Detecting product defects through image and sensor data.

- Maintenance Assistance: Real-time guidance using voice and video.

- Robot Collaboration: Operate machinery through speech and visual commands.

Impact: Increases operational efficiency, minimizes downtime, and enhances workplace safety.

Example: Siemens Industrial Copilot, developed with Microsoft, uses multimodal AI to help engineers interact with machines via natural language and visual inputs, improving productivity on the factory floor.

5. Banking & Financial Services

In finance, multimodal AI enhances security, automates service interactions, and provides personalized insights by integrating visual, vocal, and textual intelligence.

Use Cases:

- Biometric Verification: Secure authentication using face and voice

- Conversational Banking: Manage accounts via voice or text.

- Data Interpretation: Explain charts or financial reports through conversation.

Impact: Strengthens fraud prevention, simplifies banking interactions, and improves user trust.

Example: Bank of America’s Erica is an AI-powered virtual assistant that understands both text and voice queries, helping customers perform transactions and receive personalized financial advice seamlessly.

6. Automotive & Transportation

Multimodal AI enhances the driving experience by merging voice commands, vision systems, and environmental sensors for safer and smarter vehicle control.

Use Cases:

- Voice and Gesture Controls: Operate vehicle systems hands-free.

- Driver Monitoring: Detect fatigue or distraction using facial and audio cues.

- Navigation Assistance: Combine visual and spoken instructions dynamically.

Impact: Improves driver safety, ensures comfort, and supports semi-autonomous vehicle operation.

Example: Mercedes-Benz MBUX is a multimodal in-car assistant that understands natural speech, gestures, and visual cues, enabling intuitive and context-aware interaction between driver and vehicle.

7. Entertainment and Media

In entertainment, multimodal AI enables creative automation and interactive experiences by combining text, visuals, and audio understanding for storytelling and content creation.

Use Cases:

- AI Storytelling: Generate narratives using speech and visual prompts.

- Content Moderation: Detect unsafe material in video and audio.

- Video Generation: Create media from text-based scripts.

Impact: Accelerates content production, boosts creativity, and enhances audience engagement.

Example: Synthesia.io uses multimodal AI to generate lifelike videos from text scripts, synchronizing visuals, speech, and expressions for professional content creation without human actors.

8. Customer Service

Multimodal AI revolutionizes customer support by integrating text, speech, and visual inputs to understand problems more accurately and provide faster, empathetic responses.

Use Cases:

- Virtual Support Agents: Handle voice and text interactions.

- Visual Troubleshooting: Analyze uploaded images or screenshots.

- Sentiment Analysis: Detect tone and emotion in speech.

Impact: Enhances response accuracy, reduces resolution time, and improves overall customer satisfaction.

Example: LivePerson Conversational Cloud uses multimodal AI for customer support via text, voice, and images. It detects intent, analyzes sentiment, and uses visual context for personalized, real-time support across channels.

9. Security and Surveillance

In the security domain, multimodal AI combines vision, audio, and biometric data to detect threats, authenticate identities, and automate incident analysis.

Use Cases:

- Anomaly Detection: Analyze video and audio for unusual behavior.

- Access Control: Authenticate users via face and voice recognition.

- Incident Reporting: Summarize surveillance data automatically.

Impact: Increases threat detection accuracy, strengthens security systems, and speeds up response times.

Example: SenseTime employs multimodal AI for intelligent surveillance, integrating video, voice, and behavioral data to detect anomalies and improve situational awareness in real time.

10. Agriculture

Multimodal AI supports modern agriculture by analyzing environmental data, satellite imagery, and voice queries to optimize crop management and resource usage.

Use Cases:

- Crop Health Monitoring: Detecting issues using images and sensors.

- Voice-Based Farm Assistance: Farmers ask questions and share field images.

- Predictive Analytics: Forecasting yield using multimodal data inputs.

Impact: Boosts productivity, reduces resource waste, and promotes sustainable farming practices.

Example: John Deere See & Spray uses computer vision and AI to identify weeds and target pesticide use precisely, combining visual and environmental data for smarter crop management.

How IdeaUsher Can Help You Build a Multimodal Conversational AI Solution?

Building a multimodal conversational AI requires expertise in AI modeling, NLP, computer vision, voice processing, and user experience. IdeaUsher integrates these to help businesses create intelligent, interactive solutions that enhance customer engagement.

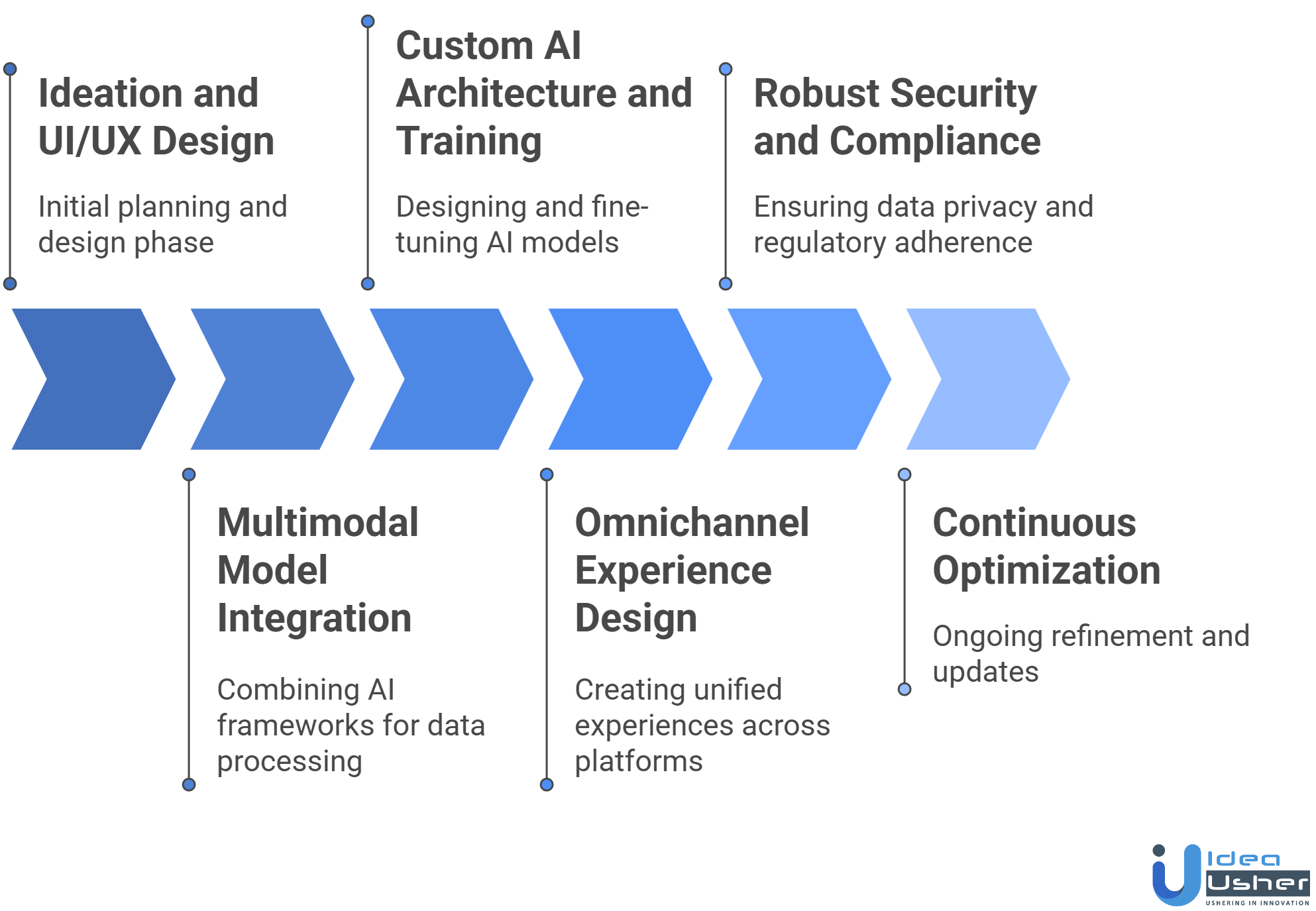

1. End-to-End Product Development

IdeaUsher manages the entire process, starting with ideation and UI/UX design and continuing through model integration and deployment. Our team brings together all interaction channels whether text, voice, video, gesture, or image, into a consistent, human-centered experience.

2. Multimodal Model Integration

We use advanced AI frameworks like GPT, Whisper, and CLIP to process voice, text, and visual data at the same time. This helps your platform understand real-world context, including what users say, how they say it, and what they show.

3. Custom AI Architecture & Training

Our engineers design scalable architectures powered by neural networks, transformers, and large language models. We also fine-tune models using your proprietary datasets to ensure accurate intent detection and domain-specific intelligence.

4. Omnichannel Experience Design

From mobile apps to web portals and smart devices, IdeaUsher creates unified experiences across every digital touchpoint. This approach helps users stay engaged across platforms, which leads to higher retention and greater satisfaction.

6. Robust Security & Compliance

Every solution we build adheres to enterprise-grade security standards like GDPR, HIPAA, and ISO. Data privacy, encryption, and secure API integrations are prioritized to maintain user trust and regulatory compliance.

7. Continuous Optimization & Support

Post-launch, IdeaUsher provides model monitoring, performance optimization, and ongoing updates. We help refine your multimodal AI based on user behavior analytics and feedback to ensure consistent improvement over time.

Estimated Development Cost Breakdown

The cost of developing a multimodal conversational AI platform depends on its complexity, feature scope, AI integrations, and supported input modes (text, voice, image, and video). Below is an estimated breakdown of development costs across various phases for a full-scale, production-ready solution.

| Development Phase | Description | Estimated Cost |

| Consultation | Requirement analysis and architecture planning for multimodal use cases. | $6,000 – $12,000 |

| UI/UX & Experience Design | Designing intuitive interfaces for cross-platform interactions. | $10,000 – $18,000 |

| Multimodal Model Integration | Integrating GPT, Whisper, and CLIP models for text, voice, and vision. | $20,000 – $40,000 |

| Custom AI Architecture & Training | Building scalable neural models and fine-tuning with domain data. | $15,000 – $30,000 |

| Omnichannel Integration | Connecting to mobile, web, and smart devices for unified communication. | $8,000 – $15,000 |

| Security & Compliance | Implementing GDPR/HIPAA standards, encryption, and secure APIs. | $5,000 – $9,000 |

| Testing & Deployment | QA testing and deployment on cloud or hybrid environments. | $4,000 – $8,000 |

| Post-Launch Support | Ongoing monitoring, optimization, and feature updates. | $2,000 – $6,000/month |

Total Estimated Cost: $68,000 – $138,000

Consult with IdeaUsher to receive a customized estimate tailored to your specific multimodal AI vision, platform requirements, and long-term scalability goals.

Conclusion

The rise of Multimodal Conversational AI is transforming how users and businesses interact, making communication more intuitive, engaging, and efficient. By combining voice, text, vision, and gestures, it creates richer, more human-like experiences across industries. Whether used in customer support, healthcare, or e-commerce, this technology enhances personalization and understanding at every interaction. As businesses continue to prioritize seamless user experiences, adopting multimodal AI can unlock new possibilities for automation, accessibility, and customer satisfaction in a rapidly evolving digital landscape.

Work with Ex-MAANG developers to build next-gen apps schedule your consultation now

FAQs

Multimodal Conversational AI combines multiple forms of communication such as text, voice, images, and gestures to create a more natural and interactive user experience. It processes diverse inputs simultaneously to deliver context-aware and human-like responses.

It helps businesses improve customer engagement by offering faster, more personalized interactions. By understanding multiple input modes, companies can enhance support efficiency, strengthen user satisfaction, and create more immersive digital experiences that drive long-term customer loyalty.

Industries like healthcare, e-commerce, education, and customer service benefit significantly. The technology enables more intuitive communication, streamlines processes, and enhances accessibility, making it easier to serve customers and provide support across multiple touchpoints effectively.

Unlike traditional chatbots that rely solely on text, Multimodal Conversational AI processes various input types for deeper understanding and engagement. It allows users to interact naturally, improving accuracy, emotional context, and the overall conversational experience.