Businesses face a relentless flow of decisions, from interpreting customer feedback and optimizing operations to anticipating market shifts. Despite vast datasets and automation tools, many still struggle to turn information into insight and intent into action. LLM Enterprise AI is changing that, bridging the gap between static automation and true intelligent decision-making.

Powered by large language models, agentic AI adds a new dimension to enterprise intelligence. It doesn’t just process data; it understands context, learns patterns, and takes initiative. From managing complex workflows and drafting personalized communications to autonomously improving processes, LLM-driven agents are evolving into trusted collaborators rather than mere tools.

In this blog, we’ll explore how LLM-driven agentic AI is transforming the business landscape. From understanding its architecture to identifying use cases and implementation strategies, we’ll uncover how enterprises can build scalable, context-aware AI systems that drive measurable transformation and future-ready innovation.

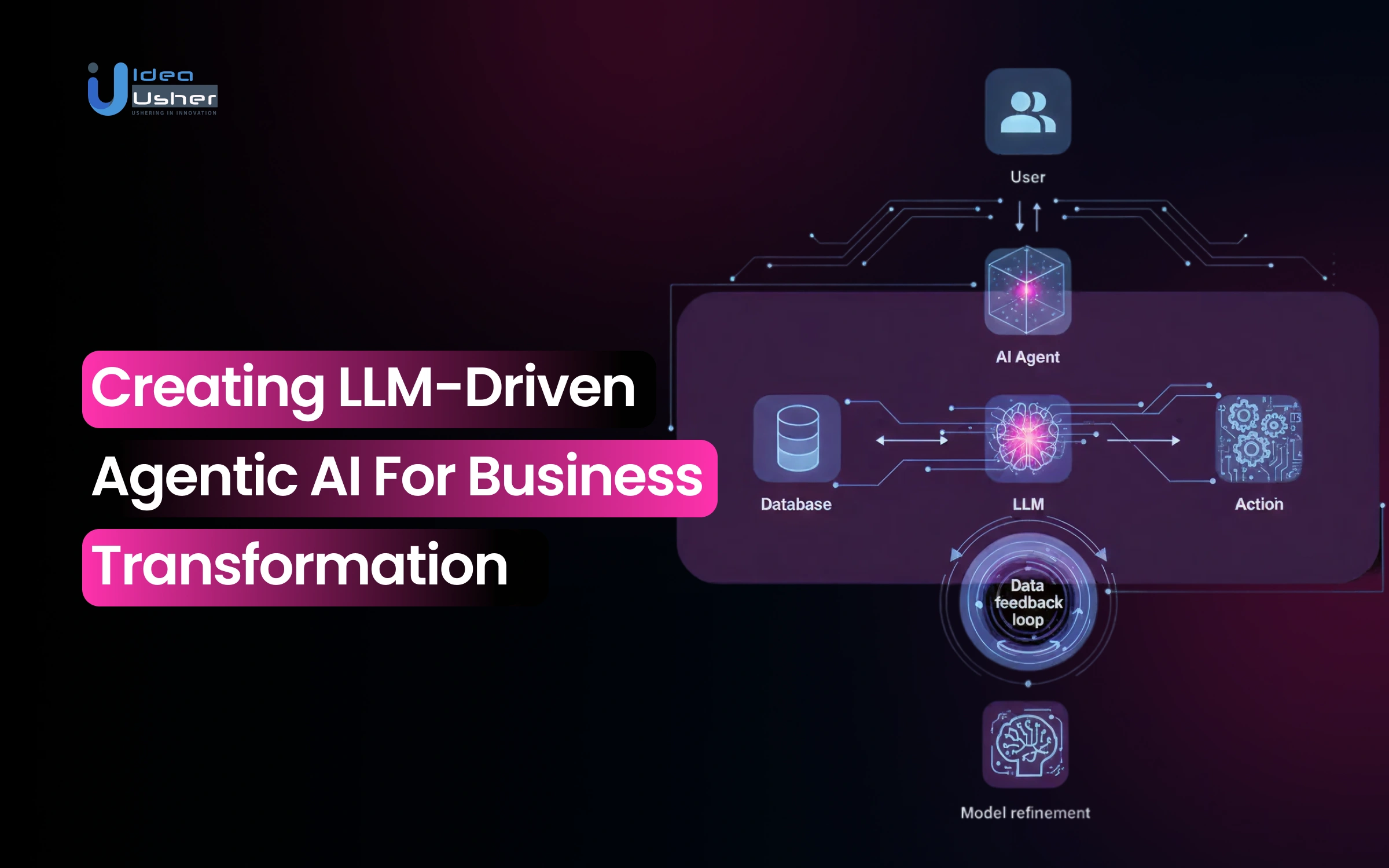

What is an LLM-Driven Agentic AI?

An LLM-driven Agentic AI is an AI system powered by a Large Language Model (LLM) capable of autonomous, goal-directed actions. Unlike traditional AI with predefined responses, it can reason, plan, and act independently based on context. It uses the language model’s cognitive skills such as reasoning, memory, and adaptability, to make decisions and interact with digital environments, data, and humans.

These traits allow businesses to deploy LLM-based agentic AI for various uses like automation, decision support, customer engagement, and data-driven process improvement. In practice, LLM-driven systems act as intelligent agents that integrate:

- Cognitive Reasoning: Powered by an LLM, enabling deep understanding and logical planning.

- Memory Mechanisms: Retaining context and experiences to improve future performance.

- Tool and API Integrations: Allowing the AI to act in real-world digital environments, from automating reports to managing customer interactions.

- Feedback Loops: Helping the system learn from outcomes, self-correct, and optimize over time.

Core Components of an LLM-Driven Enterprise AI System

An LLM Enterprise AI functions through interconnected components that allow it to reason, plan, and act independently, with each element playing a vital role in understanding language, recalling context, executing actions, and improving through feedback.

| Component | Description | Purpose in the Business |

| Large Language Model (LLM) | The cognitive core that understands natural language, generates responses, and performs reasoning. | Enables comprehension, contextual dialogue, and decision-making across varied tasks. |

| Memory System | Stores contextual information, past actions, and user interactions. | Provides continuity, learning, and personalization over time. |

| Planning & Reasoning Engine | Breaks down complex goals into actionable steps using logical and strategic reasoning. | Powers goal-oriented behavior and structured execution. |

| Tool & API Integrations | Connects the AI to external platforms, software, and data sources. | Allows the agent to perform real-world actions like data retrieval, automation, and reporting. |

| Perception & Input Layer | Processes diverse inputs such as text, documents, or voice commands. | Expands the AI’s ability to interpret and understand information from multiple sources. |

| Action Executor | Executes the tasks planned by the reasoning engine across connected systems. | Converts decisions into measurable outputs and completed workflows. |

| Feedback & Self-Correction Loop | Continuously monitors outcomes and compares them with intended results. | Ensures adaptive learning, accuracy improvement, and self-optimization. |

| Governance & Safety Layer | Implements policies, security measures, and compliance protocols. | Ensures ethical, transparent, and responsible AI operation within organizational standards. |

Why Businesses Are Moving Toward Agentic Systems?

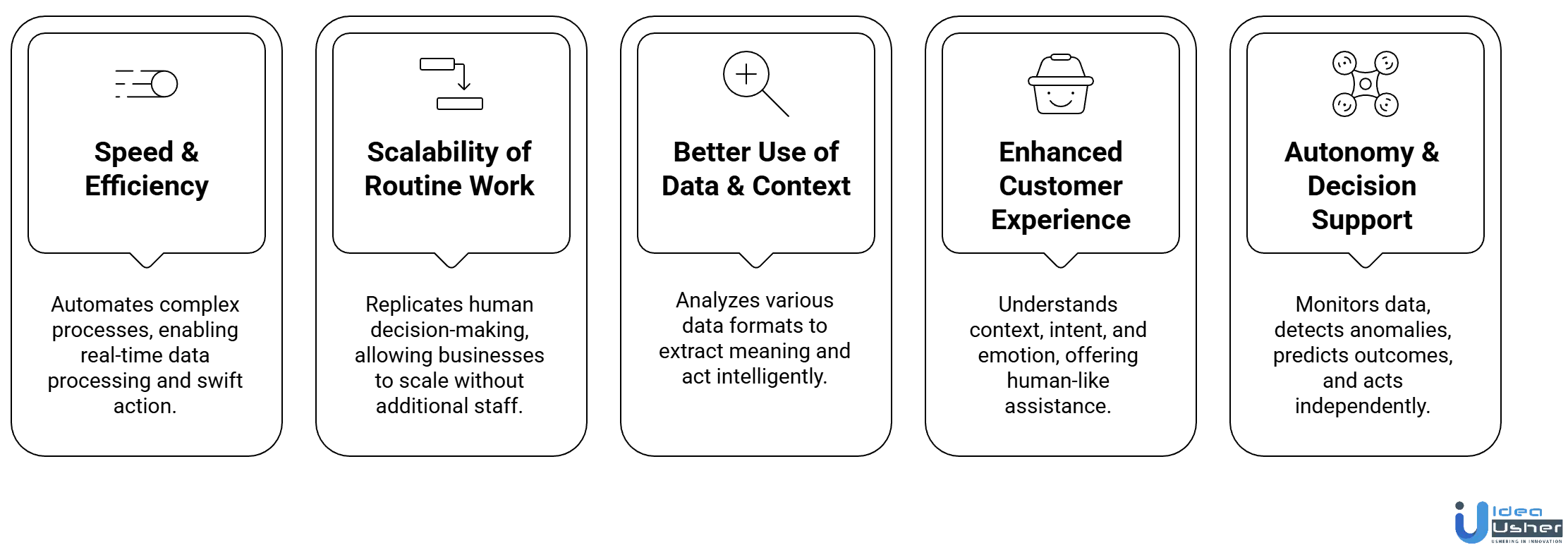

As businesses adopt digital transformation, agentic AI autonomous systems powered by Large Language Models (LLMs) are the next frontier. They interpret context, decide, and act independently, transforming operations. Here are reasons for adoption, with real-world examples.

1. Speed & Efficiency

LLM Enterprise AI enhances speed by automating complex multi-step processes, allowing real-time reading, interpretation, and action across systems. Using LLMs, businesses process data quickly, summarize insights, and act swiftly, freeing teams for strategic tasks.

Example: H&M employs an LLM virtual assistant for product queries, outfit recommendations, and customer requests. It handles 70% of inquiries independently, reducing response times threefold and increasing sales by 25%.

2. Scalability of Routine Work

As organizations grow, repetitive tasks like data entry, reporting, or customer support scale with business volume. Agentic AI uses intelligent agents to replicate human decision-making, allowing businesses to scale without extra staff, ensuring consistent performance, lower costs, and seamless global experiences.

Example: Bank of America’s “Erica” is an LLM-based financial assistant that automates millions of daily customer interactions from balance inquiries to transaction tracking, freeing up human agents for complex cases. The system has already handled over one billion customer engagements.

3. Better Use of Data & Context

Traditional automation systems struggle with unstructured data, but LLM Enterprise AI analyzes various data formats like documents, conversations, and logs to extract meaning and act intelligently. This enables organizations to turn fragmented data into actionable insights, making operations more responsive and data-driven.

Example: Ascendion’s AAVA platform utilizes LLM-driven agents to analyze project data, code documentation, and logs, automating software design, testing, and deployment, enhancing speed and reducing errors.

4. Enhanced Customer Experience

Customers expect real-time, personalized support. LLM Enterprise AI understands context, intent, and emotion, offering human-like assistance with accuracy and consistency. Businesses can scale engagement, maintain personalization, and improve satisfaction and retention.

Example: Walmart’s “AI Super Agents” integrate LLMs into shopping, supplier, and employee support channels to provide smarter recommendations, streamline purchasing, and enhance the retail experience for millions of customers globally.

5. Autonomy & Decision Support

Agentic systems represent a shift from reactive to proactive intelligence. These AIs monitor data, detect anomalies, predict outcomes, and act independently, providing real-time support and resilience. This autonomy lessens reliance on humans, enhancing organizational adaptability.

Example: HubSpot’s “Breeze Agents” work independently in its CRM to generate content, handle leads, and respond to inquiries. They learn from interactions, allowing faster responses and smarter decisions for marketers and support teams.

Use Cases of LLM-Driven Agentic AI in Modern Businesses

The next wave of AI transformation involves autonomous, LLM Enterprise AI agents that can act, plan, and execute tasks independently, reshaping how organizations work and innovate across industries. Let’s explore how businesses in different sectors are harnessing agentic AI to unlock real-world impact.

1. Enterprise Productivity & Operations

Large enterprises are moving beyond simple AI assistants to fully functional autonomous agents that integrate across platforms, automate workflows, and manage decision-making at scale.

Microsoft Copilot + Power Platform Agents: Microsoft’s ecosystem enables organizations to deploy task-specific AI agents via Copilot Studio and Power Automate. These agents reduce administrative workload by over 30% by handling repetitive tasks, summarizing meetings, and streamlining project management.

Notion & ClickUp AI Work Agents: These productivity platforms now feature AI agents that automatically plan projects, generate documentation, and update timelines, freeing up teams to focus on strategic execution instead of manual coordination.

2. Finance & Banking

In financial services, where accuracy, compliance, and speed are paramount, LLM-driven agents are proving invaluable for risk assessment, client advisory, and software optimization.

JPMorgan Chase – “LLM Suite”: JPMorgan’s proprietary agentic ecosystem supports fraud detection, compliance monitoring, and market research automation. It has been credited with major efficiency gains and earned recognition as Innovation of the Year (American Banker, 2025).

Morgan Stanley – WealthGPT: Built in partnership with OpenAI, this AI agent assists financial advisors with personalized portfolio insights and client communication, cutting research time dramatically while improving advisory accuracy.

3. Healthcare & Life Sciences

Agentic AI is helping healthcare organizations unlock the potential of vast, complex medical data to improve diagnostics, patient care, and clinical efficiency.

Mayo Clinic Platform: The Mayo Clinic uses AI agents to extract insights from unstructured medical records, aiding clinicians in diagnosis and treatment recommendations. Early programs have seen a 20% improvement in diagnostic accuracy.

Tempus One: This precision medicine company leverages LLM agents to query millions of genomic and clinical documents in seconds, identifying optimal cancer treatment options, a massive leap from traditional manual review processes.

4. Retail & E-Commerce

In retail, agentic AI is creating smarter, self-optimizing systems that handle pricing, marketing, and customer engagement, all without human intervention.

Amazon: Amazon uses AI-driven agents to optimize pricing, manage logistics, and generate marketing copy dynamically. This has helped reduce inventory waste and improve profit margins through smarter, real-time decisions.

Shopify: Shopify’s “Sidekick” acts as an autonomous business co-pilot, setting up online stores, crafting campaigns, and managing customer queries automatically. For small business owners, it’s like having a virtual operations manager 24/7.

5. Automotive & Manufacturing

Manufacturers are turning to LLM agents to power predictive maintenance, supply chain optimization, and workforce management, creating leaner and more responsive production systems.

BMW Group: BMW’s “AI Factory” initiative deploys LLM-driven agents for maintenance scheduling, supply coordination, and shift optimization, resulting in a 25% reduction in operational downtime.

Tesla: Tesla’s in-house AI agents continuously analyze performance data to propose and test improvements for Autopilot and manufacturing processes, accelerating innovation through constant feedback loops.

6. Software Development & Technology

Agentic AI is revolutionizing how code is written, tested, and deployed. Software companies are now embracing autonomous developer agents that can manage entire project lifecycles.

Cognition Labs – Devin: Dubbed the world’s first fully autonomous software engineer, Devin can plan, code, debug, and deploy applications independently. Startups using Devin report faster development cycles and reduced need for large engineering teams.

Replit – Ghostwriter Agents: Replit’s AI agents automatically fix bugs, refactor code, and generate pull requests, making collaborative development smoother and more efficient for distributed teams.

7. Travel & Hospitality

Travel platforms are embracing agentic AI to provide real-time personalization and dynamic itinerary management for travelers.

Expedia & Booking.com: Their AI travel concierges can now autonomously plan trips, adjust bookings, and handle customer service interactions, creating a seamless, intelligent travel experience from search to stay.

Why Are 60% of Businesses Adopting LLM-Based Agentic AI Platforms?

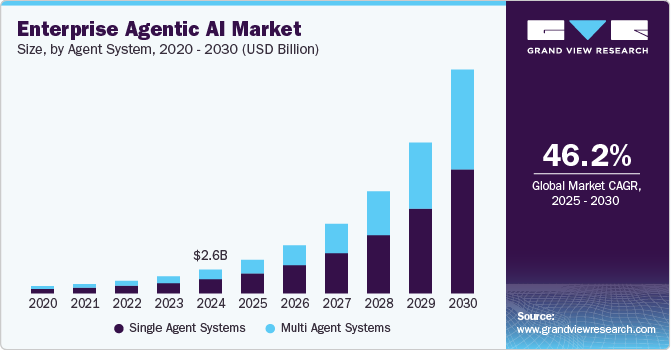

The global LLM Enterprise Agentic AI market was valued at USD 2.58 billion in 2024 and is projected to reach USD 24.50 billion by 2030, growing at a remarkable CAGR of 46.2% from 2025 to 2030. This is a clear indicator that agentic AI is rapidly becoming the next defining wave of enterprise transformation.

According to NVIDIA, more than 60% of enterprises are already using or expanding LLM-powered agents in different business areas, from internal operations to customer engagement. This shows that intelligent, autonomous systems are becoming a regular part of enterprise workflows.

Agentic AI Driving the Future of Enterprise Efficiency

Businesses are now recognizing the benefits of LLM-based agentic AI platforms. These systems understand context, reason autonomously, and act securely, helping organizations scale quickly while ensuring compliance and trust.

- Enterprise Efficiency: McKinsey reports that generative LLM solutions can improve customer-care productivity by 30 – 45%, freeing human teams to focus on higher-value tasks.

- Faster ROI: ServiceNow achieved an estimated USD 10 million in annualized benefits within just 120 days of implementing its internal LLM-powered “Now Assist” system, showing how quickly these platforms deliver measurable returns.

- Automation in Action: Grant Thornton, using “Aisera”, has reported 75% auto-resolves and up to 90% faster resolution times, proving that agentic AI can successfully augment human work at scale.

The Next Big Opportunity in Enterprise AI

While adoption is accelerating, the LLM-based agentic AI market is still in its early growth phase, leaving immense room for innovators and platform builders.

Enterprises are now seeking secure, compliant, and customizable agentic AI ecosystems that can integrate seamlessly into existing operations, especially in sectors like finance, healthcare, manufacturing, and logistics.

For new entrants, this represents a rare window of opportunity: to develop trustworthy agentic platforms that combine data privacy, governance, and industry-grade intelligence and capture a share of a market set to grow nearly 10× by 2030.

Key Features That Enable Business Transformation

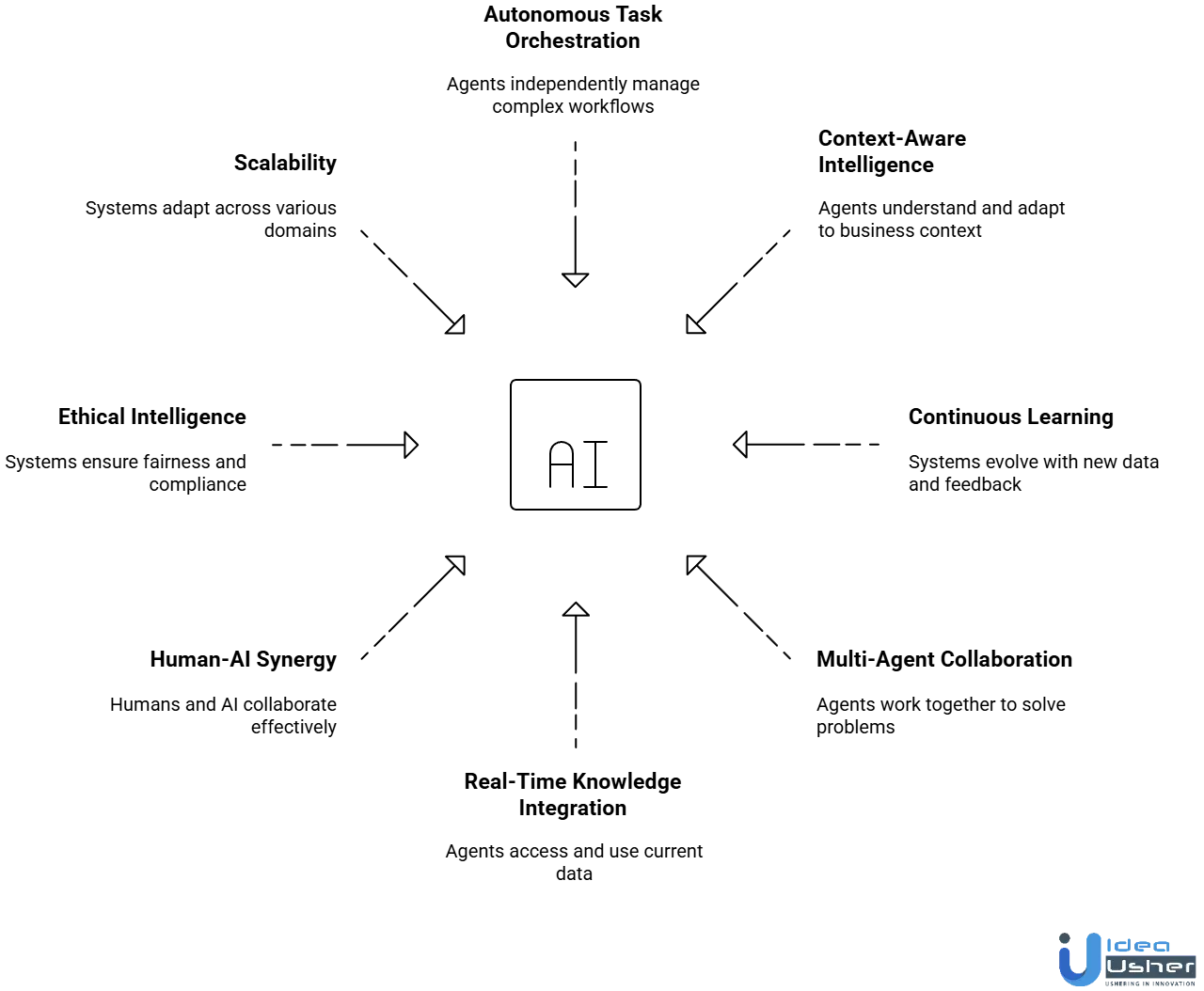

LLM Enterprise AI systems are transforming organizations by enabling adaptive decision-making through language intelligence and autonomous reasoning. These features show how agentic architectures foster significant business change across industries.

1. Autonomous Task Orchestration

Agentic systems can independently plan, prioritize, and execute complex workflows across multiple business functions. This autonomy reduces manual oversight, accelerates operations, and ensures consistency in decision-making while maintaining alignment with organizational objectives.

2. Context-Aware Intelligence

Through advanced language understanding and knowledge grounding, LLM-driven agents interpret business context, intent, and nuance. This allows them to adapt behavior dynamically, respond intelligently to evolving situations, and provide accurate insights rooted in real-world understanding.

3. Continuous Learning & Adaptation

Unlike static automation systems, agentic AI continuously learns from interactions, data updates, and feedback. This self-improving mechanism ensures that the system evolves alongside business environments, leading to sustained performance and innovation over time.

4. Multi-Agent Collaboration & Coordination

Agentic architectures often consist of interconnected agents that communicate, delegate tasks, and share knowledge. This collaborative structure enables distributed problem-solving and allows the system to manage complex, multi-step business operations efficiently and reliably.

5. Real-Time Knowledge Integration

LLM Enterprise AI integrates with real-time enterprise data streams, documents, and external sources. By retrieving and reasoning over the most current information, they enable faster, data-driven decisions and enhance organizational agility in rapidly changing markets.

6. Human-AI Synergy & Transparency

Agentic AI promotes effective collaboration between humans and intelligent systems. Transparent decision explanations, interactive feedback channels, and oversight controls allow human experts to guide, audit, and refine AI behavior, creating trust and accountability in every decision.

7. Ethical & Compliant Intelligence

Modern agentic systems are designed with governance and compliance frameworks that ensure fairness, privacy, and ethical integrity. This not only builds confidence but also safeguards organizations against regulatory risks and reputational harm.

8. Scalability Across Business Domains

The modular design of LLM-driven agentic architectures enables seamless scaling across departments, industries, and use cases. Once deployed in one domain, these systems can be rapidly adapted to new functions, unlocking cross-functional efficiency and enterprise-wide transformation.

Creating LLM-Driven Agentic AI for Business Transformation

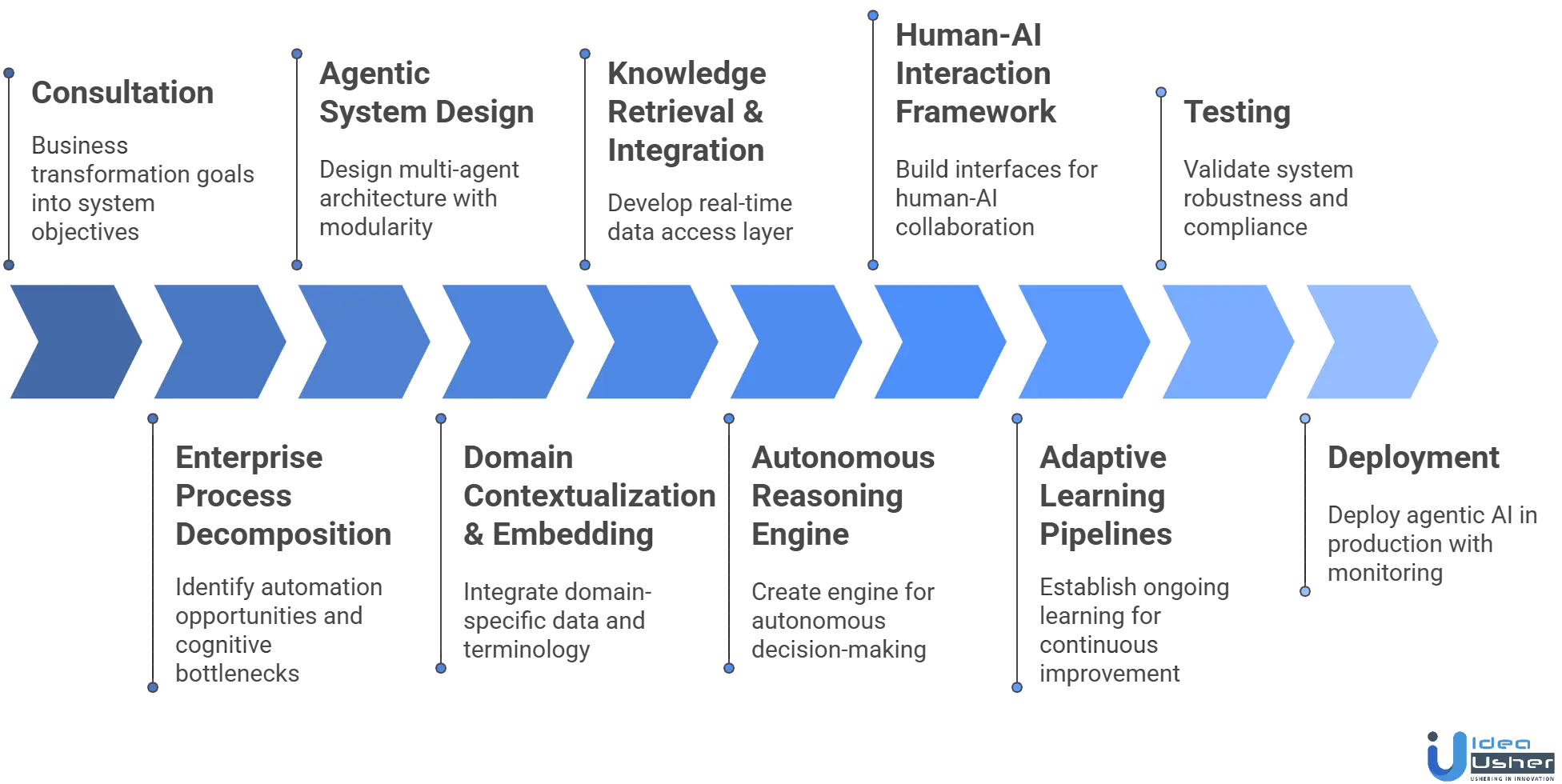

Creating an LLM Enterprise AI requires strategic design and domain expertise. Each phase aligns technical precision with business objectives, enabling task automation, context understanding, intelligent reasoning, and continuous improvement.

Below is the development process that our team follows to build scalable, intelligent agentic AI systems.

1. Consultation

We consult with you to understand business transformation goals into system objectives. Developers collaborate with you to define measurable outcomes like optimizing decision-making, enhancing customer experience, or improving operational efficiency. This ensures all design and engineering decisions support strategic priorities.

2. Enterprise Process Decomposition

Our team performs process decomposition to find automation opportunities and cognitive bottlenecks. We audit workflows, decision structures, and knowledge sources to identify where agentic behavior adds value. This guides the architecture and data needs for intelligent autonomy.

3. Agentic System Design

We design a multi-agent architecture where each agent has specific roles like planning, coordination, or execution, connected through structured communication protocols. The design emphasizes modularity, enabling agents to collaborate dynamically while remaining independent and goal-oriented.

4. Domain Contextualization & Embedding

To ensure accurate AI operation in a business domain, our developers perform domain contextualization by integrating proprietary datasets, ontologies, and terminology. This enhances the LLM’s ability to interpret domain-specific intent, respond precisely, and adhere to standards and regulations.

5. Knowledge Retrieval & Integration

We develop a knowledge retrieval layer linking AI to structured and unstructured enterprise data. With contextual indexing and semantic pipelines, it accesses relevant info in real time. This enables agents to reason and act on current organizational knowledge for adaptive, informed decisions.

6. Autonomous Reasoning Engine

Our engineers create a reasoning engine that enables agents to think and act autonomously by implementing decision policies, inference mechanisms, and prioritization frameworks. This reasoning layer shifts AI from reactive to proactive decision-making.

7. Human-AI Interaction Framework

We create robust interfaces and supervision tools for seamless collaboration between humans and AI. Emphasizing transparency, users can review decisions, offer corrections, and influence reasoning through structured feedback. This hybrid model balances autonomy with human oversight, ensuring reliability and ethical alignment.

8. Adaptive Learning Pipelines

To sustain long-term performance, we establish ongoing learning pipelines where agents get feedback from interactions, system results, and user reviews. Through reinforcement learning and fine-tuning, the system gradually enhances reasoning, contextual awareness, and decision-making without manual retraining.

9. Testing

Before deployment, our QA teams validate the system’s robustness, bias control, and compliance by simulating operational scenarios to ensure fairness and resilience. Ethical frameworks guarantee agentic behaviors follow standards.

10. Deployment

Finally, we deploy agentic AI in production with monitored rollouts. Observability tools track performance, errors, and reasoning in real time. Once stable, the agentic AI scale across departments, add capabilities, and optimizes for business goals.

Cost to Create LLM-Based Agentic Enterprise AI

Creating an LLM Enterprise AI goes beyond simply utilizing a language model; it needs a scalable system that can act, reason, and integrate with business workflows. Below is a cost breakdown table highlighting key components and estimated expenses for development and deployment.

| Development Phase | Description | Estimated Cost |

| Consultation | Business goal assessment, AI use case definition, and technical feasibility analysis. | $4,000 – $8,000 |

| Enterprise Process Decomposition | Workflow and data audit to identify automation areas and define agent roles. | $10,000 – $15,000 |

| Agentic System Design | Architectural planning for multi-agent structures and decision logic. | $13,000 – $19,000 |

| Domain Contextualization & Embedding | Incorporating domain data and terminology to enhance contextual understanding. | $13,000 – $22,000 |

| Knowledge Retrieval & Integration | Building data pipelines and retrieval frameworks for real-time knowledge access. | $10,000 – $16,000 |

| Autonomous Reasoning Engine | Implementing reasoning logic and goal evaluation for autonomous decision-making. | $17,000 – $30,000 |

| Human-AI Interaction Framework | Designing user interfaces and feedback systems for collaboration and oversight. | $10,000 – $14,000 |

| Adaptive Learning Pipelines | Setting up continuous learning and feedback loops for system improvement. | $11,000 – $18,000 |

| Testing | Functional, ethical, and compliance testing under simulated scenarios. | $10,000 – $16,000 |

| Deployment | Production launch, monitoring, and scalability implementation. | $11,000 – $17,000 |

Total Estimated Cost: $70,000 – $145,000

Note: The development cost of an LLM-driven agentic enterprise AI varies based on project scale, data diversity, integration complexity, and performance requirements, as well as customization and optimization needs.

Consult with IdeaUsher for a personalized estimate and end-to-end strategy to develop a secure, adaptive, and enterprise-grade agentic AI platform aligned with your business goals and industry standards.

Tech Stack Recommendations for LLM Enterprise AI

Building an LLM-driven Agentic AI requires a flexible, secure tech stack that enables natural language understanding, autonomous reasoning, data integration, and continuous learning while ensuring scalability, interoperability, and data privacy.

Below are the recommended components of the tech stack categorized by function.

1. Foundation Models (LLMs)

These serve as the cognitive core of the agentic AI, enabling understanding, reasoning, and communication.

- Recommended Options: OpenAI GPT series, Anthropic Claude, Meta LLaMA, Mistral, Google Gemini, or fine-tuned open-source models like Falcon or Zephyr.

- Purpose: Natural language processing, reasoning, and dialogue generation for intelligent decision-making.

2. Agent Frameworks and Orchestration

Frameworks that manage multiple agents, define roles, and enable collaboration across workflows.

- Recommended Options: LangChain, AutoGen, Semantic Kernel, CrewAI, or Haystack Agents.

- Purpose: Multi-agent orchestration, reasoning chains, and task automation pipelines.

3. Knowledge Management and Retrieval

Tools for integrating structured and unstructured enterprise data into the agentic ecosystem.

- Recommended Options:

- Vector Databases: Pinecone, Weaviate, Milvus, or Chroma.

- Knowledge Graphs: Neo4j, ArangoDB, or Stardog.

- Purpose: Contextual retrieval, data grounding, and semantic search capabilities.

4. Data Processing and Integration Layer

Infrastructure for managing, cleaning, and connecting enterprise data sources.

- Recommended Options: Apache Kafka, Airbyte, Databricks, or AWS Glue.

- Purpose: Real-time data streaming, transformation, and integration across systems.

5. Autonomous Reasoning and Decision Engine

The reasoning layer that powers adaptive and context-aware decision-making.

- Recommended Options: Custom rule-based engines, ReAct frameworks, or hybrid neural-symbolic reasoning systems.

- Purpose: Enables agents to evaluate context, prioritize goals, and make decisions autonomously.

6. Human-AI Interaction Layer

Interfaces that facilitate transparent collaboration between human users and AI agents.

- Recommended Options: Streamlit, Gradio, Next.js, or React for UI; FastAPI or Flask for backend APIs.

- Purpose: Provides interactive dashboards, conversation interfaces, and feedback systems.

Challenges & Solutions in LLM Enterprise AI Development?

LLM-driven agentic AI offers businesses the ability to automate complex workflows, make intelligent decisions, and scale operations efficiently. However, building these systems comes with significant challenges that must be addressed to ensure reliability, security, and ethical use.

1. Data Privacy & Security

Challenge: Handling sensitive data is critical for LLM-driven agentic systems, and without proper safeguards, businesses risk data breaches, unauthorized access, or regulatory violations.

Solution: We solve this by implementing end-to-end encryption, access controls, anonymization, and continuous monitoring, ensuring the agentic system securely manages data while maintaining compliance with regulations like GDPR.

2. Reliability & Accuracy of Decisions

Challenge: LLM Enterprise AI systems may generate plausible but incorrect outputs, and acting autonomously on these can cause operational or financial errors.

Solution: We address this by incorporating human-in-the-loop oversight, continuous model training, and feedback loops, enabling the system to refine decisions and maintain high accuracy in all business operations.

3. Integration with Legacy Systems

Challenge: Many businesses rely on legacy infrastructure, which can make it difficult for LLM-driven agentic systems to integrate and operate smoothly across platforms.

Solution: We solve this challenge using API bridges, middleware, and modular integration strategies, allowing agentic AI systems to work seamlessly with both existing legacy systems and modern software solutions.

4. Scalability & Resource Constraints

Challenge: Training and running LLM-driven agentic AI can be resource-intensive, making scalability a challenge for growing businesses without overloading infrastructure.

Solution: We overcome this by leveraging cloud-based deployment, pre-trained models, optimized workflows, and modular design, ensuring scalable agentic AI systems that handle increasing demand efficiently.

5. Ethical & Governance Concerns

Challenge: LLM-driven agentic systems may produce biased or unethical outputs if not properly guided, risking reputational damage and regulatory scrutiny.

Solution: We address this with transparent governance frameworks, ethical guidelines, diverse training datasets, and override controls, ensuring agentic AI behaves responsibly and aligns with organizational values.

Conclusion

Adopting LLM Enterprise AI can significantly transform how businesses operate, innovate, and serve their customers. By integrating large language models into workflows, organizations can automate complex tasks, enhance decision-making, and unlock new opportunities for growth. Success lies in aligning LLM capabilities with business objectives while maintaining transparency, scalability, and data security. As enterprises embrace this shift, LLM-driven AI will play a central role in creating intelligent, adaptive systems that drive measurable efficiency and deliver long-term strategic advantages across industries.

Why Choose IdeaUsher for LLM-Driven Agentic Enterprise AI Development?

At IdeaUsher, we help enterprises unlock new opportunities through LLM Enterprise AI solutions that drive efficiency, intelligence, and scalability.

With expertise in integrating large language models into existing business ecosystems, we design AI agents that understand, learn, and act in real time to optimize operations and deliver measurable impact.

Why Work with Us?

- End-to-End LLM Integration: From data preparation to deployment, we ensure seamless AI adoption tailored to your business goals.

- Enterprise-Grade Security: Our frameworks prioritize data privacy and regulatory compliance across every layer of development.

- Custom Business Intelligence: We design agentic AI solutions that empower decision-making, automation, and customer engagement.

- Proven AI Expertise: With experience across multiple industries, our team delivers robust, adaptive, and future-ready LLM solutions.

Collaborate with IdeaUsher to transform your business using next-generation LLM-driven AI systems that combine intelligence, security, and scalability for lasting enterprise growth.

Work with Ex-MAANG developers to build next-gen apps schedule your consultation now

FAQs

LLM Enterprise AI automates repetitive tasks, improves data-driven decision-making, and personalizes customer experiences. It enables businesses to streamline operations, reduce costs, and achieve faster innovation across departments.

Integrating LLMs helps businesses enhance efficiency, improve communication, and optimize workflows. These models can analyze vast data, generate insights, and assist teams in making informed strategic decisions quickly.

Businesses must implement strong encryption, anonymization, and access controls. Secure data pipelines and regular compliance audits are vital to protect sensitive enterprise data during model training and deployment.

Industries like finance, healthcare, logistics, and customer service benefit significantly from LLM-driven AI. It enhances automation, speeds up data analysis, and enables more personalized interactions, leading to improved performance and customer satisfaction.