- Important Market Takeaways

- What is the Musicfy App?

- How Can Apps Like Musicfy be Useful?

- How Do AI Music Generators Operate?

- Other Similar AI Music Generator App LIke Musicfy

- Understanding the Basics of Music Generation

- Developing Your AI Music Generator

- Stack Flows to Consider to Develop AI Music Generator like Musicfy

- Conclusion

- FAQs

In the ever-evolving landscape of music creation, technology continues to push boundaries, offering innovative ways to compose, produce, and experience music. Enter the realm of AI-driven music generation, where the fusion of artificial intelligence and musical creativity births groundbreaking platforms like Musicfy. This blog embarks on a journey into the heart of developing an AI music generator akin to Musicfy, exploring the intricacies, possibilities, and transformative impact of harnessing machine learning in the realm of musical expression.

Discover how AI algorithms are reshaping the music industry, the process to develop AI music generator like musicfy, and the potential implications for the future of musical composition and innovation. Join us as we unravel the mechanics behind creating melodies, harmonies, and rhythms through the eyes of artificial intelligence, paving the way for a new era of musical exploration and invention.

- Important Market Takeaways

- What is the Musicfy App?

- How Can Apps Like Musicfy be Useful?

- How Do AI Music Generators Operate?

- Other Similar AI Music Generator App LIke Musicfy

- Understanding the Basics of Music Generation

- Developing Your AI Music Generator

- Stack Flows to Consider to Develop AI Music Generator like Musicfy

- Conclusion

- FAQs

Important Market Takeaways

In the realm of music production, the integration of Artificial Intelligence (AI) tools has sparked a range of opinions among producers and creators. According to BedroomProducersBlog, a significant portion (47.9%) of producers hold a neutral stance toward AI in music production, while a substantial percentage (36.8%) have already incorporated AI tools into their workflow.

Additionally, around 30.1% express intentions to explore these tools soon. However, a notable segment (15.7%) reported disappointment with the quality of AI tools in their initial trials, highlighting concerns regarding the effectiveness and output of such technology.

Industry leaders like Spotify and Warner Music offer differing perspectives on AI’s role in music. Spotify’s CEO, Daniel Ek, emphasizes the ethical use of technology, cautioning against the replication of artists’ work without proper consent. Meanwhile, Warner Music’s Robert Kyncl acknowledges AI’s potential impact and advocates for regulations to govern its integration, ensuring a balanced approach to technological advancements in the industry.

Source: BedroomProducersBlog

Several AI-driven platforms, such as LANDR, Google’s Magenta, and AIVA, showcase practical applications of AI in music creation. LANDR, for instance, democratizes audio mastering, traditionally an expensive and time-consuming process, through its AI platform. Google’s Magenta provides tools for creating unique musical arrangements while maintaining the producer’s creative vision. AIVA offers an automated music creation tool with usage rights, easing concerns about licensing and royalties for music creators.

For those considering developing an AI music generator, these market insights offer valuable considerations. Understanding the spectrum of industry perceptions is vital, as concerns regarding originality and quality persist alongside growing acceptance. Incorporating ethical frameworks, as advocated by industry leaders, can shape the development process and foster trust among artists and producers. Prioritizing quality in AI-generated music is crucial to avoid the disappointments reported by some users, ensuring that the technology’s output meets professional standards. Furthermore, simplifying processes and providing usage rights, as demonstrated by existing platforms, can attract creators concerned about legal aspects.

What is the Musicfy App?

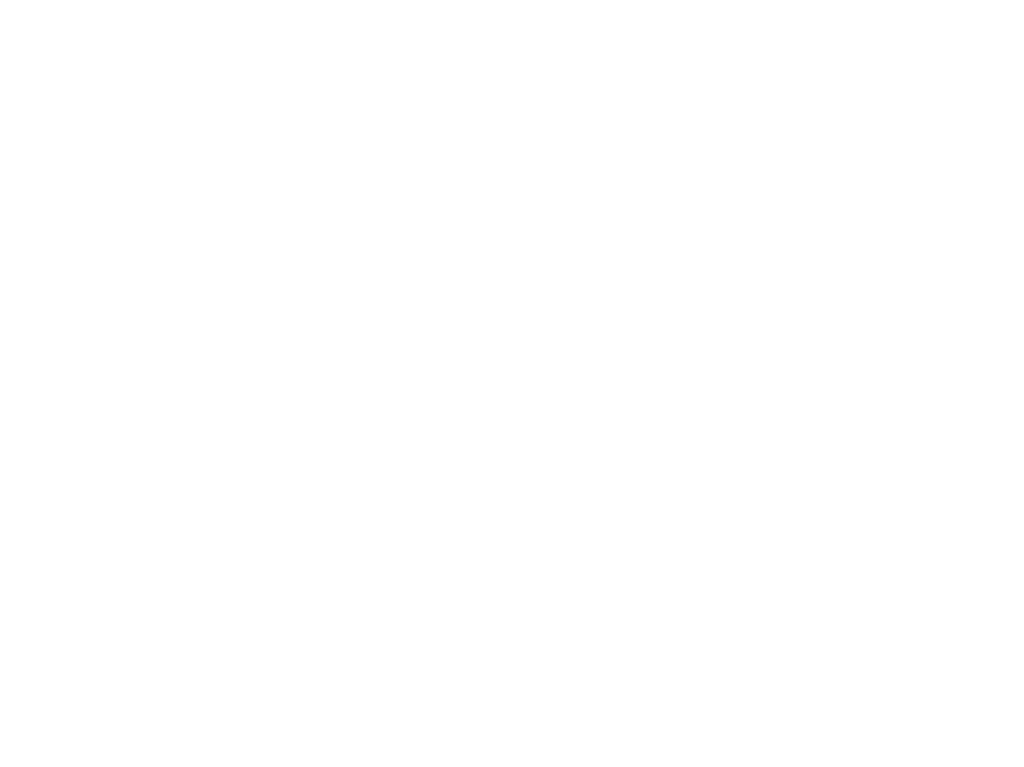

From a software development perspective, Musicfy represents a sophisticated music application that amalgamates core music player functionalities with dynamic visualizations based on the currently playing tracks. It serves as an excellent reference point for developers aspiring to develop AI music generator like musicfy, offering insights into various components and user-centric features that can be integrated into a similar application.

The app accommodates local music playback and streaming capabilities from SoundCloud, showcasing the importance of integrating diverse music sources into the platform. Developers can learn from this dual functionality to ensure seamless integration of music sources and a robust backend structure that supports multiple data streams.

A notable aspect of Musicfy’s software architecture is its emphasis on a clean, ad-free interface that prioritizes user experience. This underscores the significance of user-centric design in ensuring an intuitive and uninterrupted music browsing and playback experience.

The incorporation of a powerful equalizer within Musicfy illustrates the significance of audio customization features. Developers can glean insights into designing and implementing robust audio manipulation tools to enable users to tailor their listening experience according to individual preferences.

How Can Apps Like Musicfy be Useful?

Apps like Musicfy, driven by AI-powered music generation, have emerged as transformative tools, reshaping the landscape of music creation and consumption across various sectors. Their versatility and innovative features offer a multitude of benefits, catering to diverse needs in industries ranging from entertainment and content creation to education and wellness.

Understanding the utility of such applications is crucial in recognizing their impact and potential across different domains. Let’s explore how apps like Musicfy can prove to be immensely useful across various sectors, revolutionizing the way music is produced, consumed, and integrated into different facets of modern life.

1. Content Creation

Content creators, including bloggers, YouTubers, and podcasters, harness AI music generators to infuse their content with unique soundtracks that impeccably match the mood and tone of their narratives. These applications offer an extensive range of music styles and themes, enabling creators to seamlessly integrate background scores that enhance storytelling and captivate audiences. By leveraging AI-generated music, content creators can elevate the emotional impact of their content, establishing a more profound connection with their audience and augmenting the overall viewing or listening experience.

Tip for Developers

Learning how content creators use AI music generators to enhance user experiences by tailoring soundtracks to match specific tones and moods can guide developers in creating customizable features. Implementing a wide array of music styles and themes within the generator can offer content creators more options for seamless integration.

2. Music Production

In the realm of music production, AI music generators serve as invaluable aids for musicians and composers seeking efficient and time-saving methods for generating new musical compositions. These tools offer diverse musical templates and styles, allowing artists to explore and experiment with different sounds and arrangements. By harnessing AI’s capabilities, music producers can expedite the creative process, focusing more on fine-tuning and refining musical elements rather than investing extensive time in composing music from scratch. This streamlining of the creative process encourages greater experimentation and innovation within the music production landscape.

Tip for Developers

Insights from the music production industry emphasize the need for efficiency in generating new compositions. Developers can focus on providing a diverse set of musical templates and styles within the AI Music Generator to expedite the creative process for musicians and composers.

3. Advertising

Advertising professionals leverage AI music generators to craft catchy and memorable jingles or background music for commercials. These tools provide access to a vast repository of musical themes and styles, enabling advertisers to tailor soundtracks that resonate with target audiences. By integrating AI-generated music into advertisements, marketers can create a distinctive auditory identity that enhances brand recall and fosters a deeper connection with consumers. The ability to create customized and impactful musical accompaniments plays a pivotal role in making advertisements more engaging and memorable.

Tip for Developers

Understanding how advertisers use AI music generators to create memorable jingles or background music can guide developers in designing tools that allow for easy customization. Providing features that enable easy adaptation of music to suit different advertising campaigns can be a key focus.

4. Event Organizing

Event organizers utilize AI music generators to curate custom music playlists that set the desired ambiance and tone for diverse events. These applications offer a wide array of musical genres and moods, allowing organizers to tailor music to suit the specific themes or atmospheres of different occasions. By incorporating AI-generated music, event planners can create seamless transitions between tracks, ensuring a cohesive and immersive experience for attendees. The ability to craft personalized soundtracks enhances the overall atmosphere of events, contributing significantly to guest engagement and enjoyment.

Tip for Developers

Developers can take cues from event organizers who use AI music generators to curate custom playlists for events. This insight can influence the incorporation of features that facilitate playlist creation based on specific event themes or atmospheres.

5. Fitness Industry

Within the fitness industry, instructors rely on AI music generators to design workout playlists that synchronize seamlessly with the intensity and rhythm of their fitness classes. These tools provide an assortment of musical beats and tempos, allowing fitness professionals to create motivational and energizing playlists that elevate the exercise experience for participants. By aligning music with workout intensity, instructors can optimize motivation, increase energy levels, and synchronize movements, resulting in more engaging and effective workout sessions.

Tip for Developers

Insights from fitness instructors leveraging AI music generators to synchronize workout playlists with exercise intensity can influence the inclusion of tempo and beat customization tools within the generator, catering to different fitness routines.

6. Video Games

AI music generators play a pivotal role in enhancing the gaming experience by dynamically creating soundtracks that adapt to the actions and events within video games. These applications employ algorithms to generate music that aligns with specific game scenarios, enhancing immersion and gameplay. By generating adaptive and contextually relevant soundtracks, game developers can elevate the overall gaming experience, eliciting emotional responses and intensifying player engagement. The use of AI-generated music contributes to creating a more immersive and captivating gaming environment.

Tip for Developers

Understanding how AI-generated music enhances gaming experiences by adapting to in-game actions and events can guide developers in creating dynamic soundtracks. Implementing algorithms that respond to gameplay cues could be a pivotal aspect to Develop AI music generator like musicfy.

7. Education

In the realm of music education, AI-generated music aids students in comprehending the intricate structures and composition techniques prevalent in music. These tools provide educators with resources to introduce students to diverse musical styles and elements, facilitating a deeper understanding of music theory and composition. By incorporating AI-generated music into educational curricula, instructors can engage students through interactive and practical applications, fostering a more intuitive grasp of musical concepts. This utilization of AI in music education contributes to a more dynamic and engaging learning experience for students.

Also read, “Top 16 Advantages Of AI In Education“

Tip for Developers

Developers can leverage insights from music education to create features that help students comprehend music structures. Including educational tools that facilitate exploration of diverse musical styles and concepts can enhance the learning experience.

8. Personalized Listening Experience

Streaming platforms leverage AI-generated music to deliver personalized playlists tailored to individual user preferences and moods. These applications employ algorithms to analyze listening habits and preferences, curating customized music streams for users. By offering a continuous flow of music that aligns with users’ tastes, streaming platforms enhance user satisfaction and retention. The personalized listening experience fosters a deeper connection between users and the platform, leading to increased user engagement and loyalty.

Tip for Developers

The emphasis on personalized playlists in streaming platforms can influence developers to focus on algorithms that analyze user preferences and curate tailor-made music streams, fostering user engagement and satisfaction.

9. Film and Television

In the domain of film and television production, AI music generators enable producers to swiftly create soundtracks that complement visual narratives. These tools offer a diverse array of musical themes and styles, allowing filmmakers to select or generate music that amplifies emotions and enhances storytelling. By incorporating AI-generated soundtracks, producers can streamline the soundtrack creation process, allocating more time and resources to other aspects of production. The use of AI-generated music in film and television contributes to creating impactful audiovisual experiences while optimizing production timelines and resources.

Tip for Developers

Insights from film and television production highlight the importance of streamlined soundtrack creation. This could prompt the integration of features that expedite music selection or generation for audiovisual productions.

10. Therapy and Wellness

AI-generated music finds applications in therapy and wellness settings by creating calming and therapeutic soundscapes. These applications offer soothing and ambient music that aids relaxation and stress reduction, serving as an accompaniment for meditation, yoga, or therapy sessions. By leveraging AI-generated music, therapists and wellness instructors can create environments conducive to healing and relaxation, facilitating emotional well-being and mental relaxation. The use of AI-generated music in therapy and wellness contexts complements various relaxation techniques, contributing to an enhanced sense of calmness and tranquility.

Tip for Developers

Understanding how AI-generated music contributes to relaxation and therapeutic environments can inspire the inclusion of ambient and soothing music options within the generator, catering to wellness and relaxation purposes.

Also read, “Web3 Chat App Development: A Complete Guide“

How Do AI Music Generators Operate?

Many AI-driven music software utilize machine learning techniques in their systems. Among the common methodologies employed are deep learning and neural networks.

Deep learning involves training an artificial music generator using an extensive dataset of existing music. Through this process, the generator discerns patterns and structures within the music, thereby generating novel compositions.

Neural networks emulate the human brain’s approach to music creation. They identify patterns within musical pieces and use them as a basis for generating new compositions.

Once trained, these generative AI models can produce fresh compositions based on various inputs. These inputs might encompass parameters like tempo, key, and genre, or specific musical elements such as melodies, harmonies, and rhythms.

A typical AI music generator curates a diverse array of sounds for its music library. Utilizing the human-aided AI platform, these sounds are employed to compose and produce each track uniquely. Soundful offers users the ability to download and use any track royalty-free, based on their subscription tier, providing exceptional flexibility to the users.

Here’re some things you need to keep in mind while developing a AI music generator:-

1. Understanding User Needs

Before diving into development, comprehend the diverse needs of potential users. Research existing AI music generators to identify their strengths, weaknesses, and user feedback. This analysis will guide you in shaping your app’s features and functionalities to cater to various user preferences.

2. Designing the User Interface

Craft an intuitive and user-friendly interface. Enable users to input parameters effortlessly, such as tempo, key, genre, and specific musical elements like melodies and rhythms. The interface should provide clear options for customization while ensuring ease of navigation and interaction.

3. Implementing AI Algorithms

Integrate powerful AI algorithms into your app. Consider employing machine learning techniques like deep learning and neural networks to analyze and generate music based on the provided parameters. Ensure the algorithms can swiftly process data to produce compositions in real-time or within a reasonable timeframe.

4. Developing Export and Customization Features

Enable users to export compositions in various formats, such as MIDI, WAV, or MP3. Additionally, provide tools for further customization within the app. Features like real-time editing, track variations, or the ability to create similar compositions can enhance user experience and creativity.

5. Testing and Iteration

Conduct rigorous testing to identify and address any bugs, glitches, or usability issues. Gather feedback from beta testers to refine the app’s functionality, performance, and overall user experience. Iteratively improve the app based on user input to ensure a polished final product.

6. Ensuring Legal Compliance

Prioritize legal aspects, especially concerning copyright and royalty-free usage of generated music. Implement measures to ensure users can utilize the compositions without infringing on intellectual property rights.

Other Similar AI Music Generator App LIke Musicfy

Exploring existing AI music generator apps unveils invaluable insights and lessons crucial for those aspiring to develop their own AI-driven music composition software. Each of these platforms presents unique features and functionalities that can serve as a guiding beacon for developers in the pursuit of crafting their innovative AI music generative app.

1. Soundraw.io

Learning Focus: Customization and Parametric Selection

Soundraw.io stands out for its user-centric approach, allowing individuals to craft music by selecting specific parameters like genre, style, and preferred instruments. The crucial takeaway here lies in understanding the significance of providing users with customizable options, facilitating a tailored music-making experience. For developers, exploring methods to integrate such parameter selection features into their app becomes paramount. Additionally, the provision for fine-tuning generated music ensures alignment with users’ unique requirements, adding a layer of personalization that enhances user satisfaction and engagement.

Insights Gained: Incorporating customizable parameters into the app’s interface allows users to craft music aligned with their preferences. Enabling fine-tuning options empowers users to shape the generated music to fit their specific creative vision, highlighting the importance of user-centric customization in AI music composition software.

2. AIVA

Learning Focus: Emotional Soundtracks and Style Diversity

AIVA’s forte lies in composing emotionally resonant soundtracks across a myriad of musical styles. Developers can glean insights into the significance of offering diverse pre-defined musical styles within their app. Understanding and implementing AI models capable of generating music in various emotional and stylistic contexts add versatility and broaden the app’s appeal. Learning from AIVA’s approach emphasizes the importance of catering to diverse musical tastes and enabling the creation of compositions spanning multiple genres.

Insights Gained: Integrating AI models that can generate emotionally evocative music in diverse styles enriches the app’s repertoire. Embracing versatility and catering to a wide spectrum of musical preferences enhance the app’s attractiveness to users seeking varied musical expressions.

3. Amper Music

Learning Focus: Advanced Features and Versatility

Amper Music, despite its higher cost, offers an array of advanced features that allow users to create original music for diverse purposes. This underscores the significance of incorporating advanced functionalities within the app, even if it comes with certain pricing considerations. For developers, exploring and implementing AI algorithms capable of facilitating music composition for varied contexts and purposes becomes pivotal. This insight emphasizes the importance of versatility and catering to different user needs through advanced AI capabilities.

Insights Gained: Recognizing the value of incorporating advanced features and versatility in music generation software aids developers in conceptualizing an app that addresses diverse user requirements. Offering sophisticated AI capabilities that cater to various contexts enhances the app’s appeal to users seeking multifaceted music composition tools.

4. Ecrett Music

Learning Focus: Experimentation and User Manipulation

Ecrett Music’s AI-driven composition software empowers users to experiment and manipulate the outcomes of their compositions. This highlights the significance of providing users with tools that facilitate experimentation and customization. Developers can draw inspiration from this approach by exploring AI algorithms that allow users to have greater control over the generated compositions. Enabling user-controlled manipulation fosters a more interactive and engaging music creation experience within the app.

Insights Gained: Facilitating user experimentation and manipulation in the composition process amplifies user engagement. Implementing AI capabilities that allow for user-controlled customization offers a more immersive and interactive platform, aligning with users’ desires for creative exploration.

5. Google’s Magenta tools

Learning Focus: Open-Source Collaboration

Google’s Magenta is an open-source project that emphasizes collaboration and innovation within the AI music composition realm. The crucial lesson here revolves around the potential benefits of embracing open-source elements in app development. Developers can learn from Magenta’s approach by considering the integration of open-source functionalities, fostering a collaborative environment, and encouraging community involvement in the app’s creation. Leveraging open-source frameworks can amplify innovation and accelerate the development process through shared knowledge and contributions.

Insights Gained: Incorporating open-source elements into the app’s architecture encourages collaboration and innovation. Embracing open-source frameworks nurtures a community-driven environment that fosters collective progress and accelerated development.

6. Boomy

Learning Focus: Simplicity and User-Friendliness

Boomy’s AI-generated music tool emphasizes simplicity and ease of use, offering users a streamlined music-making experience. Developers can draw insights from Boomy’s approach by prioritizing user-friendly interfaces and simplified processes. Implementing intuitive design and straightforward interactions enhances accessibility and encourages a broader user base. Learning from Boomy underscores the significance of providing a hassle-free and user-centric music composition platform.

Insights Gained: Prioritizing simplicity in design and user experience fosters accessibility and widens the app’s user base. Incorporating user-friendly interfaces and streamlined processes ensures an intuitive and hassle-free music creation journey.

7. Soundful

Learning Focus: AI-Powered Customization

Soundful’s utilization of advanced AI algorithms to generate personalized music tracks based on user preferences provides a significant learning opportunity. Understanding and implementing similar AI models that cater to personalized music outputs aligned with diverse user preferences are crucial. This insight emphasizes the importance of offering tailored music creation experiences, enriching user engagement through AI-powered customization.

Insights Gained: Incorporating AI algorithms enabling customized music outputs aligned with user preferences enhances user engagement. Offering tailored music creation experiences through advanced AI-driven customization elevates the app’s attractiveness to users seeking personalized interactions.

8. Humtap

Learning Focus: Voice-Driven Composition

Humtap’s innovative approach allows users to create original music using their voice inputs, transforming hums and taps into melodies. The essential lesson here lies in exploring AI capabilities that interpret vocal inputs and convert them into musical foundations. Developers can learn from Humtap’s approach by delving into AI models that facilitate voice-driven composition, offering users a unique and interactive music-making experience.

Insights Gained: Implementing AI capabilities that interpret vocal inputs and transform them into musical elements enhances user engagement. Enabling voice-driven composition fosters a distinctive and interactive music creation platform.

9. Media.io

Learning Focus: Versatility and User-Friendliness

Media.io leverages AI technology to generate music tracks matching users’ vibes, moods, or styles. The lesson here emphasizes the importance of multifaceted AI tools with user-friendly interfaces. Exploring AI models accommodating diverse user preferences is pivotal. This insight underscores the significance of versatile AI functionalities and user-friendly interfaces to cater to a wide array of user preferences and enhance engagement.

Insights Gained: Incorporating AI tools with multifaceted functionalities and user-friendly interfaces ensures versatility and enhanced user engagement. Offering a platform adaptable to diverse user preferences enriches the app’s appeal.

10. Synthesizer V

Learning Focus: Unique Vocal Generation

Synthesizer V’s ability to generate sung vocals in different languages highlights a distinctive feature for developers to consider. Understanding and implementing AI models capable of generating vocals or offering unique editing interfaces add distinctive functionalities to the app. Learning from Synthesizer V emphasizes the importance of offering distinctive vocal generation capabilities within the music composition software.

Insights Gained: Implementing AI models capable of generating vocals in different languages or providing unique editing interfaces adds uniqueness and appeal. Offering distinctive vocal generation capabilities enriches the app’s feature set.

Understanding the Basics of Music Generation

Before delving into the intricacies of AI-driven music composition, it’s vital to grasp the fundamental elements that constitute music: notes, chords, and octaves. These building blocks form the backbone of melodies, harmonies, and rhythms. AI models aim to mimic these components to generate original musical compositions autonomously. Notes represent individual sounds produced by instruments, while chords are combinations of multiple notes played together to create harmonies. Octaves, comprising repeating patterns of keys across musical scales, contribute to the structure and tonality of music.

Different AI Approaches in Music Generation

WaveNet and LSTM models have emerged as powerful tools for AI-driven music generation, each leveraging distinct methodologies and capabilities. These architectures enable the development of systems that can analyze musical patterns, learn from existing compositions, and generate new pieces autonomously.

WaveNet, designed by Google DeepMind, specializes in synthesizing raw audio waveforms. Its autoregressive nature allows it to predict future audio samples based on preceding ones, effectively capturing the intricacies of sound and tone. In contrast, LSTM models, a variant of Recurrent Neural Networks, excel in recognizing and preserving long-term dependencies in sequential data. When applied to music generation, LSTMs process sequences of musical notes or chords, enabling the generation of coherent and structured compositions.

1. The WaveNet Approach

WaveNet represents a groundbreaking development in raw audio synthesis, functioning similarly to language models in Natural Language Processing (NLP). Its core objective is to generate raw audio waveforms, capturing the nuances of sound distribution in music. During the training phase, WaveNet segments audio waves into smaller chunks and predicts subsequent samples based on previous ones, maintaining an autoregressive structure.

This architecture relies on Causal Dilated 1D Convolution layers, utilizing dilated convolutions to effectively capture temporal dependencies and ensure the coherence and accuracy of generated audio. In the inference phase, WaveNet iteratively generates new samples by selecting the most probable values for subsequent samples based on the preceding ones, thereby creating a continuous and coherent audio output.

2. The LSTM Model Approach

In the realm of music generation, LSTM models stand out for their ability to capture long-term dependencies within sequential data. These models excel in understanding and preserving complex patterns inherent in musical compositions.

Similar to WaveNet, LSTM models operate by preparing input-output sequences and computing hidden vectors at each timestep, ensuring the retention of sequential information. Despite their longer training times due to sequential processing, LSTMs provide a robust framework for understanding musical structure and generating harmonious compositions.

Comparing and Implementing Approaches

The choice between WaveNet and LSTM models hinges on their specific strengths and applications. WaveNet’s prowess lies in its ability to comprehend raw audio waveforms and capture temporal dependencies accurately through dilated convolutions. This makes it an excellent choice for generating intricate audio compositions with detailed sound textures. On the other hand, LSTM models excel in recognizing complex sequential patterns, making them well-suited for capturing musical structures and creating compositions with coherent melodies and harmonies.

Developing Your AI Music Generator

Crafting an AI music generator akin to Musicfy demands a systematic approach and keen attention to detail. Here’s an in-depth exploration of each critical step tailored for developers:

1. Data Preparation

Embark on the development journey by meticulously curating and preprocessing musical data. This crucial phase necessitates structuring the dataset into sequences tailored explicitly for training the AI model. It’s imperative to compile an extensive and diverse dataset covering a wide spectrum of musical genres, styles, and intricate patterns. The dataset’s richness profoundly influences the AI model’s ability to produce versatile and dynamic musical compositions.

2. Model Implementation

The selection of an optimal AI model architecture, such as WaveNet or LSTM, holds paramount importance for effective music generation. Leveraging frameworks like TensorFlow or PyTorch, developers meticulously construct and integrate the chosen architecture. Ensuring seamless integration and optimization of the model architecture is crucial to guarantee efficient execution and processing of multifaceted musical inputs.

3. Training and Optimization

Enter the training phase, where iterative feeding of prepared datasets into the model takes center stage. This stage accentuates the fine-tuning of hyperparameters and the intricate process of optimizing the model’s architecture to heighten accuracy and performance. Continuous refinement enables developers to adapt the model continuously, striving to achieve the desired level of musical output quality.

4. Inference and Output

Empower the trained model to autonomously generate new musical compositions based on specific inputs or constraints. Facilitate the integration of interactive features, allowing seamless developer interaction and exploration within the music generation process.

5. Evaluation and Validation

Post-training, a rigorous evaluation of the model’s performance and validation of its musical output becomes indispensable. Scrutinize the generated music for coherence, melody, harmony, and a myriad of other musical qualities. Employ a blend of quantitative metrics and human evaluation to ensure the output aligns with stringent predefined quality benchmarks.

6. Feedback Loop and Iterative Improvement

Implement a dynamic feedback loop mechanism to glean insights from evaluations and user feedback. Continuously iterate based on received feedback, striving to refine and enhance the model’s capabilities continually. Fine-tune parameters, rework datasets, or tweak the model architecture iteratively for exponential improvements in music generation.

7. Deployment and User Interface Design

Prioritize an intuitive and user-friendly interface design, laying emphasis on ease of use, customization options, and the effective presentation of generated output. Strategize the deployment plan, considering integration into existing platforms, standalone application development, or API accessibility, aiming for widespread and seamless usability.

Stack Flows to Consider to Develop AI Music Generator like Musicfy

Developing an AI music generator, like Musicfy, involves orchestrating a diverse range of technologies and techniques. Here’s a closer look at the pivotal components within the technology stack that are integral to the creation of an AI-powered music generation application:

1. Machine Learning Frameworks

Machine learning frameworks such as TensorFlow, PyTorch, and Keras are the linchpins for constructing and training models within the AI music generation domain. These frameworks furnish a rich reservoir of tools and functionalities essential for the development and training of machine learning models, acting as the cornerstone for the entire system.

2. Generative Models

Central to the music generation process are advanced generative techniques like Recurrent Neural Networks (RNNs), Generative Adversarial Networks (GANs), and Variational Autoencoders (VAEs). These models serve as the creative engines, enabling the system to generate novel and authentic musical compositions, enriching the app’s ability to compose melodies and harmonies dynamically.

3. Music Information Retrieval (MIR)

Incorporating Music Information Retrieval (MIR) techniques is crucial for comprehending and extracting vital insights from music files. These techniques empower the system to analyze musical elements, decipher underlying patterns, and structure music accordingly, ensuring a more informed and contextual music generation process.

4. Natural Language Processing (NLP)

The integration of Natural Language Processing (NLP) methodologies empowers the system to interpret and respond to user inputs effectively. By leveraging NLP techniques, the app can comprehend and react intelligently to user-generated cues, significantly enhancing the interactive and responsive nature of the music generation process.

5. Data

The foundation of an AI music generator rests upon a robust and diverse dataset encompassing songs, melodies, rhythms, and other musical components. This extensive dataset serves as the training ground for the AI model, allowing it to learn and emulate various musical styles and genres, ultimately contributing to the diversity and richness of the generated music.

6. Cloud Platforms

Leveraging cloud platforms like AWS, Google Cloud, or Azure offers scalable infrastructure and resources essential for model training, data storage, and application hosting. These platforms ensure seamless performance, scalability, and accessibility for the music generation application, even in high-demand scenarios.

Also read, “Developing Cloud Native Application on AWS“.

7. APIs

API integration plays a pivotal role in enabling connectivity and interoperability with external applications or services. By integrating APIs, the AI music generator can seamlessly interact with and complement other systems, expanding its functionality and potential use cases.

8. Front-End Development

Utilizing technologies like HTML, CSS, JavaScript, or modern frameworks such as React or Angular is vital for crafting an engaging and user-centric interface. A visually appealing, intuitive, and user-friendly interface is paramount, enhancing the overall user experience and encouraging prolonged engagement.

9. Back-End Development

Implementing server-side languages like Python, Java, or Node.js, in conjunction with robust databases like MySQL or MongoDB, manages the application logic and data. This backend infrastructure ensures smooth functionality, data management, and scalability, essential for a robust music generation application.

10. Audio Processing Libraries

Integrating specialized audio processing libraries like Librosa or Essentia is imperative for intricate audio signal processing tasks. These libraries empower the system to handle and manipulate musical data effectively, contributing to the overall quality and accuracy of the generated music.

Conclusion

Developing an AI music generator akin to Musicfy involves several key steps. Firstly, gather a substantial dataset of music tracks in various genres to train the AI model. Preprocess the data by converting audio files into a machine-readable format, extracting features like pitch, tempo, and melody.

Next, choose an appropriate machine learning or deep learning algorithm such as recurrent neural networks (RNNs) or generative adversarial networks (GANs) to train the model on the dataset. Implement techniques for generating music, which may involve sequence generation or reinforcement learning to produce coherent and original compositions. Lastly, evaluate the generated music for quality and adjust the model accordingly through continuous feedback and improvement.

If you’re intrigued by the idea of developing an AI music generator, IdeaUsher can be your go-to partner for turning this concept into reality. Whether it’s a mobile app or web app, our expertise in AI-driven development can assist you in crafting an innovative and immersive music generation experience, tailored to your unique vision and requirements.

FAQs

Q1: How do you make AI-generated music?

A1: AI-generated music is created using machine learning algorithms and neural networks trained on vast amounts of musical data. There are different methods, including using generative models like Variational Autoencoders (VAEs), Generative Adversarial Networks (GANs), or Recurrent Neural Networks (RNNs) trained on music datasets. These models can learn patterns, styles, and structures from existing music and generate new compositions.

Q2: Can you monetize AI-generated music?

A2: Yes, you can monetize AI-generated music, but the process might involve considering copyright laws, ownership, and licensing. If you create original compositions using AI, you can copyright those compositions and potentially earn royalties through platforms like streaming services, licensing for commercials, movies, or video games, or by selling the music directly.

Q3: Is there an AI music maker?

A3: Yes, there are several AI-powered music-making tools and platforms available. Some popular ones include Amper Music, Jukedeck, AIVA, and OpenAI’s MuseNet. These platforms use AI algorithms to generate music based on user inputs, such as style, mood, tempo, or specific musical elements.

Q4: Is AI-generated music copyrighted?

A4: AI-generated music can be copyrighted, but the legalities might vary depending on the jurisdiction and specific circumstances. In general, if an AI creates music independently without direct human intervention, there might be questions about who owns the copyright. Some jurisdictions recognize the creator as the person who initiates the AI, while others might consider the AI or its developer as the creator. It’s essential to consult legal advice to understand copyright implications fully.