Providing students with meaningful feedback takes time, and growing class sizes and complex assignments make it harder for educators to keep up. Even with strong grading practices, returning work quickly, offering personalized comments, and maintaining consistency can be overwhelming. This is why institutions are exploring Gradescope-like AI feedback tool to automate routine evaluation, helping teachers deliver faster, clearer, and more actionable feedback efficiently.

AI-powered feedback systems transform assessment by combining machine learning, rubric scoring, and pattern detection. They analyze answers at scale, identify common mistakes, group similar responses, and generate helpful comments with minimal effort. These tools support teachers, improving accuracy and freeing time for deeper instruction.

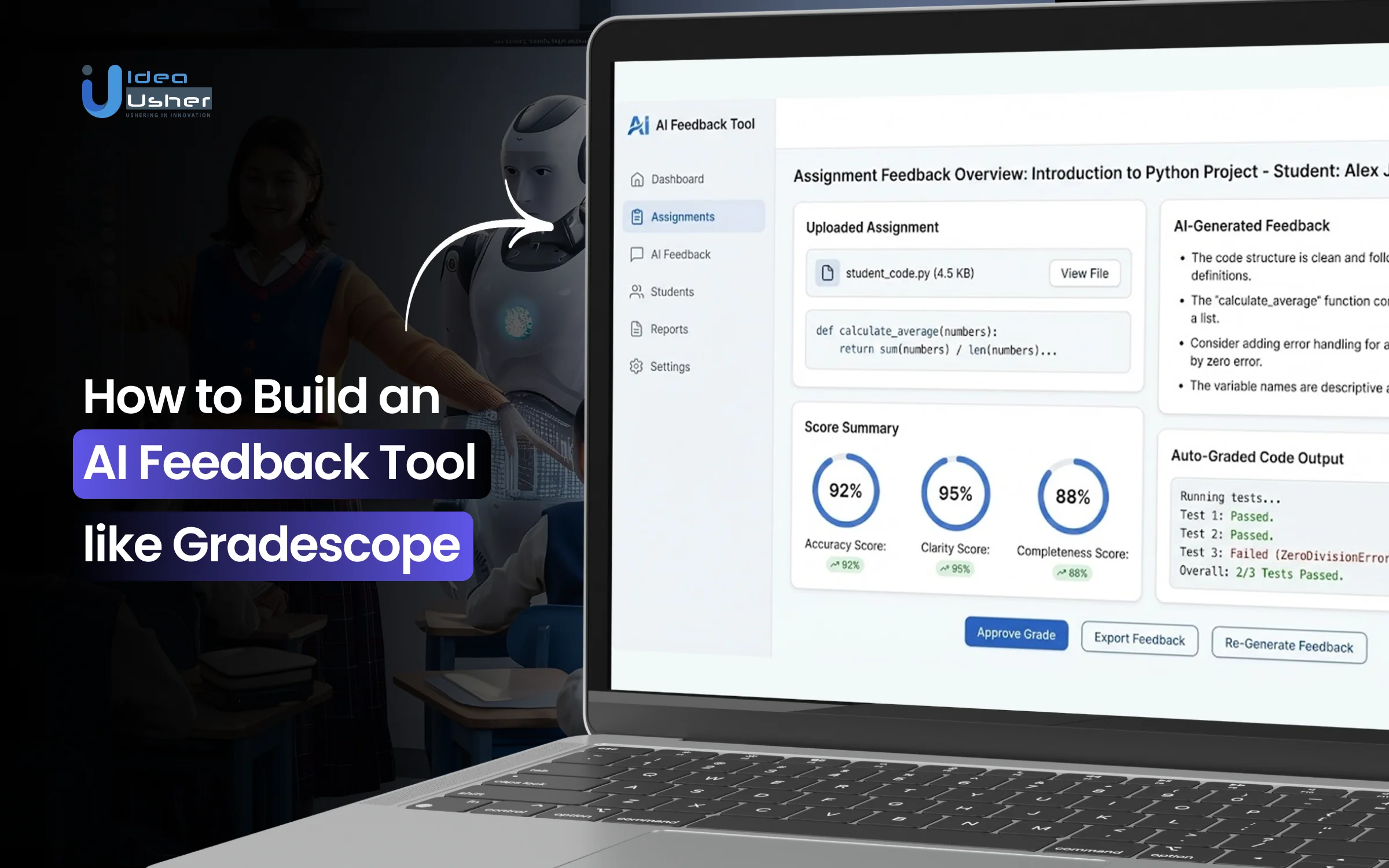

In this guide, we’ll explore how to build an AI feedback tool like Gradescope, covering the technology, key features, and classroom impact. With experience helping enterprises launch AI products, IdeaUsher can develop your AI feedback tool to outperform competitors and capture market growth.

What is an AI Feedback Tool, Gradescope?

Gradescope is an AI-assisted assessment and grading platform by Turnitin that automates and standardizes the evaluation of handwritten, digital, and code-based assignments. It uses optical character recognition (OCR), answer-grouping algorithms, and auto-grading engines to speed up grading and provide timely, consistent feedback across large student populations.

By enabling flexible assessment types, from paper exams to programming projects and bubble-sheet quizzes, Gradescope delivers scalable feedback loops, detailed analytics, and rapid turnaround. Institutions can dramatically reduce grading overhead, increase grading consistency across courses, and leverage actionable insights about student performance at both individual and cohort levels.

- Rubric propagation that applies instructor-created rubric changes across hundreds of submissions instantly.

- Dynamic regrading workflows that update scores automatically when an instructor modifies criteria.

- Cross-course analytics that reveal performance patterns across departments or semesters.

- AI-based similarity detection that flags common error patterns, not just identical answers.

- Bulk submission ingestion that handles scanned PDFs, image uploads and mixed-format assessments with minimal manual preparation.

A. Business Model: How Gradescope Operates

Gradescope’s business model combines institutional licensing with scalable AI-driven assessment tools to streamline grading and improve learning outcomes.

- Institution- and organization-facing SaaS model: Gradescope is licensed to institutions like universities, colleges, and schools, not individual students. Once adopted, instructors and students access it for free through their institution.

- Flexible assessment support across assignment types: The platform supports handwritten exams, bubble-sheet tests, programming assignments, digital uploads, and mixed assessments, serving STEM, humanities, and interdisciplinary courses.

- Efficiency and scalability for large classes: Bulk grading, AI-assisted grouping, reusable rubrics, and automation cut grading time, adding value for large courses where traditional grading is slow and resource-heavy.

- Data and analytics for continuous improvement: Gradescope provides insights into student performance, common errors, learning trends, and assessment metrics, helping schools identify knowledge gaps and enhance curriculum design.

B. Revenue Model: How Gradescope Generates Income

Gradescope generates revenue through scalable, institution-focused licensing models designed to align pricing with usage, features, and the value delivered to educators.

- Per-student or per-course licensing fees: Gradescope charges based on student volume, usually per student or per course. Basic plans start around USD $1 per student per course, with advanced AI tiers costing more.

- Tiered plans based on features and scale: Pricing depends on features like AI grading, autograding, bubble-sheet grading, collaboration tools, LMS integrations, and dashboards. Higher plans earn more revenue because of expanded capabilities.

- Institutional and campus-wide licensing agreements: Many universities opt for campus-wide or institutional licenses, enabling broad adoption across departments. These deals create stable, recurring revenue through annual or term-based renewals.

- Value-driven pricing based on time and cost savings: Gradescope reduces grading time by 50% or more and improves consistency, delivering operational value. This boosts institutional investment, supporting steady revenue.

How an AI Feedback Tool Works?

An AI feedback tool analyzes student submissions using algorithms and AI models to generate accurate, consistent evaluations. It streamlines grading, identifies learning gaps, and delivers personalized feedback in real time.

1. Instructor Creates or Uploads an Assignment

The instructor begins by setting up an assessment. They upload a PDF or create questions, while template parsing logic and layout detection modules map answer regions automatically. This prepares the system for structured grading and smooth evaluation.

2. Students Submit Work Digitally or via Scans

Students upload handwritten scans or digital responses. Behind the scenes, OCR extraction pipelines and page alignment algorithms clean, segment and map responses to their respective questions. This ensures instructors receive well-organized digital copies without manual sorting.

3. AI Groups Similar Answers for Evaluation

The system runs clustering algorithms and semantic similarity models to group comparable student answers. Instructors see grouped reasoning patterns rather than hundreds of scattered submissions, enabling grading that is consistent, efficient and supported by machine-assisted pattern recognition.

4. Instructor Grades One Example & Applies to Group

The instructor grades a representative answer and the system replicates that decision across the cluster using score propagation logic and rubric mapping engines. This eliminates repetitive work and ensures identical grading standards across all similar responses.

5. AI Supports Auto-Grading & Error Pattern Insights

Objective questions and code tasks are handled by rule-driven evaluators and execution check systems. The platform also identifies recurring misunderstandings using error pattern analytics, giving instructors deeper insight into classwide learning gaps.

6. Instructor Reviews & Adjusts Rubric Decisions

When instructors modify a rubric point, dynamic rubric syncing updates all linked submissions instantly. This ensures fair scoring, reduces manual revisions and maintains full consistency regardless of submission volume or grader involvement.

7. Feedback Is Generated & Delivered to Students

The tool compiles scores, comments and micro feedback using feedback generation modules. Students receive structured insights tailored to their mistakes, providing clear reasoning and improvement paths without any extra effort from the instructor.

8. Analytics Help Instructors Understand Performance

The analytics dashboard aggregates results using performance indexing models and data visualization layers. Instructors can view difficulty trends, error frequency and success patterns, allowing them to refine teaching strategies using real, measurable insights.

Types of AI Used in an AI Feedback Tool

AI feedback tools leverage multiple types of artificial intelligence, including machine learning, natural language processing, and computer vision. Each AI type plays a crucial role in automating grading, analyzing responses, and providing actionable insights.

| Type of AI | Purpose | How It Supports an AI Feedback Tool |

| Optical Character Recognition (OCR) AI | Digitizing handwritten or scanned submissions | Converts handwritten responses into digital text using structured extraction, enabling smooth online grading. |

| Answer Grouping and Clustering AI | Grouping similar responses | Uses pattern similarity modeling to batch answers for faster, consistent evaluation. |

| Auto-Grading AI | Scoring objective questions and code tasks | Applies rule-based logic to grade objective questions and coding outputs quickly. |

| Natural Language Understanding (NLU) | Interpreting written responses | Understands student phrasing and intent to support semi-automated scoring. |

| Natural Language Generation (NLG) | Creating feedback explanations | Generates context-aware feedback, hints and improvement notes. |

| Error Pattern Detection AI | Finding common misconceptions | Identifies repeated mistakes using response pattern analytics. |

| Similarity Detection AI | Monitoring conceptual overlap | Detects semantic similarity across answers for integrity checks. |

| Predictive Analytics AI | Forecasting trends and insights | Highlights scoring patterns and predicts learning gaps. |

| Rubric Optimization AI | Improving scoring criteria | Suggests consistent rubric adjustments based on grader behavior. |

How 72% Global School Adoption Proves AI Grading Tools Are Essential?

The global AI Grading Assistants market was valued at USD 1.72 billion in 2024 and is projected to reach USD 12.24 billion by 2033, growing at a CAGR of 22.8%. This growth is driven by widespread adoption, with schools worldwide using AI grading systems, turning experimental tech into vital infrastructure that tackles teacher workload, grading accuracy, and student engagement.

In 2025, 72% of schools globally use AI for grading, with AI tools in the US auto-grading 48% of multiple-choice tests and essay-scoring AI at 63% of universities. AI grading has become standard across K-12 and higher education.

A. Efficiency Gains from AI-Driven Feedback Tool

Educational institutions adopt AI grading tools for significant efficiency and cost benefits, leading to fundamental resource and support shifts.

- Teachers using AI grading tools save an average of 5.9 hours per week, which adds up to 222 hours per school year. These reclaimed weeks of work time let educators focus more on instruction, curriculum planning, and student support instead of repetitive grading.

- AI-powered grading systems can reduce educator workload by up to 70 percent, according to UK research. This shift goes beyond time savings and helps address teacher burnout by reshaping how educators spend their workday.

- Schools report significant cost savings with AI-driven administrative tools, with 60 percent noting reduced expenses. Lower reliance on extra grading staff and more efficient resource allocation make AI assessment systems financially appealing even for budget-limited institutions.

- Global automation of educational content and assessment could save around 60 billion dollars, according to McKinsey. This shows that AI grading is part of a major worldwide transformation, not a niche efficiency improvement.

B. How AI Feedback Tool Upgrades Quality For Institutions

Beyond efficiency, AI grading tools are providing measurable gains in assessment quality, accuracy, and user satisfaction for all stakeholders from students seeking quicker feedback to institutions demanding consistent evaluation standards.

- Automated assessment systems significantly reduce grading errors, with a 40 percent accuracy improvement. This increases fairness, reduces bias, and lowers the number of grade disputes.

- Teachers report noticeable stress relief with automated grading, as 60 percent feel less pressure. AI assessment tools directly support educator well-being and retention.

- Students strongly prefer AI-graded multiple-choice tests, with 83 percent favoring faster results. Their expectation for immediate feedback pushes institutions toward automated assessment.

- Universities are rapidly adopting AI-based peer review, with 62 percent already using these systems. This demonstrates proven value in rigorous academic environments.

- AI tools improve alignment between learning outcomes and assessments, with a 35 percent increase in accuracy of alignment. This helps institutions strengthen accreditation and program evaluation.

- Educator adoption of AI tools is accelerating, rising from 30 percent to 60 percent in one year. Frequent users save several hours weekly, highlighting strong demand and practical benefits.

Key Features of a Gradescope-like AI Feedback Tool

Gradescope-like AI feedback tool combines automation, analytics, and personalized insights to streamline grading and enhance learning. Their features improve accuracy, save educator time, and provide actionable feedback for students across diverse assessment types.

1. AI-Assisted Answer Grouping & Clustering

This feature uses pattern recognition models to automatically cluster similar student responses, allowing instructors to grade groups rather than individuals. It accelerates evaluation, improves consistency and reduces repetitive decision-making across large exam datasets.

2. Dynamic Rubric Management

A dynamic rubric builder lets instructors modify grading criteria at any moment. When a change is made, instant rubric propagation updates all linked submissions automatically. This ensures fair grading, minimizes manual rechecking and maintains consistent scoring logic across the entire class.

3. Automated Grading for Code

The tool supports automated scoring for multiple choice, numeric problems and programming tasks using rule based validators and code execution checks. This reduces instructor workload, increases grading speed and delivers immediate feedback for structured assessment types.

4. Handwritten & Scanned Submission Recognition

Users can upload handwritten exams or scanned PDFs that the system processes with structured OCR pipelines. The platform extracts answers, aligns templates and organizes pages, enabling digital grading without requiring instructors to modify their existing exam workflows.

5. Role-Based Grading

Multiple graders can work together using role-based permissions and synchronized grading queues. Every change stays in sync, preventing duplication of effort and ensuring that large teams maintain consistent evaluation standards during peak grading periods.

6. AI-Powered Error Detection

The system identifies recurring student errors using subtle response pattern analytics. Instructors receive insights into misconceptions, weak concepts and common mistakes which supports targeted teaching adjustments and more effective learning interventions.

7. Real-Time Analytics & Performance Insights

Analytics dashboards summarize scoring trends, question difficulty and student performance distribution using data visualization layers. These insights help instructors measure assessment quality, refine question design and identify areas where learners struggle collectively.

8. AI-Based Plagiarism & Similarity Detection

Beyond answer grouping, the platform evaluates conceptual similarity using semantic similarity algorithms. It flags lightly paraphrased or conceptually identical answers, supporting academic integrity checks that are more advanced than string matching or keyword detection.

9. Flexible Multi-Format Assessment Support

The tool supports a wide range of assignment types including bubble sheets, digital submissions, handwritten responses and code files. A unified assessment engine organizes all formats under a single workflow which simplifies deployment for diverse academic departments.

10. Seamless Integration & Grade Export Tools

The platform connects with institutional systems using secure synchronization protocols that automate roster import and grade export. This minimizes administrative overhead and ensures that instructors manage grading and reporting through a unified workflow.

How to Build an AI Feedback Tool like Gradescope?

Building a Gradescope-like AI feedback tool involves integrating machine learning, optical recognition, and automated grading workflows. A well-designed system ensures accurate assessments, scalable operations, and personalized feedback for students.

1. Consultation

We begin with a detailed consultation to understand institutional needs, grading workflows and target assessment formats. Our team aligns on project goals, identifies unique value opportunities and defines a clear development roadmap supported by structured discovery sessions.

2. Requirement Analysis & Product Blueprinting

We translate initial insights into a precise requirements blueprint. This includes defining assignment types, grading flows, feedback automation rules and compliance needs. Our developers map a feature-prioritized MVP structure that ensures efficiency without sacrificing essential functionality.

3. UI/UX Design & Workflow Modeling

Our designers craft intuitive grading and feedback workflows using interaction flow modeling. We ensure instructors can navigate assignments effortlessly while students receive clear feedback. This stage sets the foundation for a tool that reduces friction and enhances grading clarity.

4. AI Logic Planning and Model Behavior Structuring

We outline the AI behavior strategies for answer grouping, feedback generation and rubric enhancement. This involves designing decision layers, clustering logic and evaluation pathways to ensure the AI supports instructors without losing accuracy or control over assessments.

5. Platform Development & Assessment Engine

Our developers build the platform core including submission handling, grading modules, rubric systems and feedback generation tools. We use modular logic patterns that support scalable assessment workflows, enabling instructors to manage diverse assignment formats seamlessly.

6. AI Feature Development & Training Pipeline Setup

We develop AI capabilities for answer clustering, auto-scoring and error pattern detection. This includes building training workflows, calibration routines and behavior refinement loops which ensure dependable performance and meaningful instructor-level insights.

7. OCR Integration & Submission Structuring

We enhance the system with OCR capabilities that digitize handwritten or scanned submissions. Using structured extraction pipelines, we align answers with templates, organize pages and prepare documents for seamless digital evaluation.

8. Collaborative Grading Tools and Permission Framework

Our team builds role-based access structures, shared grading queues and synchronized evaluation tools. These features allow instructors and assistants to collaborate efficiently while maintaining grading consistency across large courses.

9. QA Testing & Assessment Validation

We conduct extensive testing across grading flows, AI outputs and submission formats. Our QA team validates accuracy, stability and workflow integrity, ensuring each feature performs reliably under real academic use cases before moving toward launch.

10. Deployment & Continuous Improvement

After validation, we deploy the platform and begin ongoing optimization. Our team monitors usage patterns, grading metrics and AI performance to introduce improvements and maintain a continuously evolving feedback ecosystem aligned with academic needs.

Cost to Build an AI Feedback Tool like Gradescop

The cost to build a Gradescope-like AI feedback tool depends on features, AI complexity, and platform scalability. Understanding these factors helps estimate development budgets and plan for long-term maintenance and updates.

| Development Phase | Description | Estimated Cost |

| Consultation | Defines product goals, assessment needs and core grading workflows. | $3,000 – $6,000 |

| UI/UX Design | Designs intuitive instructor and student flows with structured grading paths. | $6,000 – $12,000 |

| AI Logic & System Architecture | Outlines answer grouping logic, evaluation layers and scalable system structure. | $12,000 – $18,000 |

| Core Platform Development | Builds grading modules, submission handlers and rubric engines. | $26,000 – $34,000 |

| AI Feature Development | Develops clustering, auto-scoring and pattern detection features. | $18,000 – $30,000 |

| OCR Integration & Submission Structuring | Enables the digitization of handwritten or scanned assignments. | $14,000 – $20,000 |

| Collaborative Grading Tools | Implements role-based access, shared queues and synchronized grading. | $8,000 – $14,000 |

| Testing | Tests grading accuracy, AI behavior and workflow stability. | $6,000 – $12,000 |

| Deployment & Post Launch Support | Ensures stable rollout and early lifecycle improvements. | $2,000 – $4,000 |

Total Estimated Cost: $64,000 – $128,000

Note: Actual development costs vary with AI sophistication, rubric complexity, assessment volume, and scalability, with advanced analytics or automation adding to the estimate.

Consult with IdeaUsher to receive a personalized project estimate and a detailed roadmap for building a high-performing AI feedback tool tailored to your institution’s grading workflows and learning goals.

Cost-Affecting Factors to Consider in AI Feedback Tool Development

Several factors, including AI complexity, assessment types, scalability, and feature set, influence the cost of developing a Gradescope-like AI feedback tool, helping institutions plan budgets and prioritize functionality effectively.

1. AI Features & Automation Depth

The cost increases as you add more advanced features such as answer clustering, rubric automation and pattern detection. Higher accuracy requires deeper model training, refinement cycles and greater data preparation efforts.

2. Volume & Variety of Assessment Formats

Supporting multiple formats like handwritten exams, code files, digital quizzes and bubble sheets requires additional processing layers. The need for OCR pipelines and flexible evaluation logic significantly influences development hours and overall cost.

3. UI/UX & Workflow Design

Intuitive grading flows, clean dashboards and streamlined feedback screens require thoughtful design. Building frictionless instructor workflows adds design complexity and increases UI and usability engineering costs.

4. Collaboration & Multi-Grader Support

If the platform must support teaching assistants, role-based access and synchronized grading queues, you need additional permission frameworks and grading coordination logic, adding to both complexity and budget.

5. Analytics & Reporting Features

Advanced insights such as performance trends, question difficulty patterns or mastery analytics require data modeling. Implementing custom dashboards and analytical layers impacts cost depending on reporting depth.

Challenges & How Our Developers Will Solve These?

Developing a Gradescope-like AI feedback tool comes with challenges like data accuracy, system scalability, and user adoption. Identifying these issues early and implementing effective solutions ensures a reliable, efficient, and user-friendly platform.

1. AI Accuracy & Reliable Answer Grouping

Challenge: Ensuring the AI correctly groups similar answers is difficult because student responses vary widely in phrasing, handwriting, structure and clarity.

Solution: We solve this by training refined clustering models, adding instructor feedback loops and calibrating grouping boundaries. This ensures accurate clustering over time and keeps instructors in control of final grading decisions.

2. Handling Handwritten & Scanned Submissions

Challenge: Digitizing handwritten responses is challenging due to uneven scans, low image clarity and inconsistent writing styles among students.

Solution: Our developers implement structured OCR pipelines, noise reduction steps and template alignment. This transforms handwritten pages into clean digital units that instructors can grade smoothly with minimal manual correction.

3. Maintaining Grading Consistency Across Instructors

Challenge: Large grading teams often produce inconsistent scoring even when using the same rubric, leading to fairness issues.

Solution: We use synchronized rubric logic, instant rubric propagation and shared grading queues. These features align grader decisions, reduce subjective variation and maintain consistent evaluation across all student submissions.

4. Trustworthy AI Assistance for Subjective Answers

Challenge: Subjective questions require nuanced judgment, and instructors often hesitate to rely fully on automated scoring.

Solution: We design human-in-the-loop scoring, where AI suggests likely scores and instructors confirm or adjust. This keeps grading fast while ensuring instructors maintain full control over subjective evaluation.

Conclusion

Creating a Gradescope-like AI Feedback Tool can transform the way educators assess and provide feedback, making the process faster, more consistent, and scalable. By leveraging AI technologies, such a platform enhances learning outcomes while reducing manual grading workloads. Implementing features like automated grading, detailed analytics, and personalized feedback ensures that both teachers and students gain maximum value. Investing in a well-designed tool not only streamlines academic workflows but also fosters a more engaging and effective learning environment for students across subjects.

Why Choose IdeaUsher for Your AI Feedback Tool Development?

IdeaUsher has extensive experience developing AI-driven evaluation tools that automate grading, reduce instructor workload, and improve feedback accuracy. We help institutions and edtech companies build reliable platforms that support multiple assignment formats and scale efficiently.

Why Work with Us?

- AI Grading Expertise: Our team builds ML models that detect patterns, evaluate student responses, and deliver consistent and objective feedback.

- Robust Integrations: We integrate seamlessly with LMS platforms, cloud storage systems, and education infrastructure to streamline workflows.

- Security Focused: With advanced encryption practices and compliance-driven development, we ensure safe handling of academic data.

- Scalable Architecture: We design grading tools capable of processing thousands of submissions quickly while maintaining precision and performance.

Explore our portfolio to see how we have helped clients launch AI-powered solutions in the market.

Connect with us for a free consultation and bring your vision for a Gradescope-like AI feedback tool to life with a secure and scalable foundation.

Work with Ex-MAANG developers to build next-gen apps schedule your consultation now

FAQs

A Gradescope-like AI Feedback Tool should include automated grading, plagiarism detection, real-time analytics, rubric-based scoring, personalized feedback generation, and integration with LMS platforms to enhance teacher productivity and student engagement.

Developing a Gradescope-like AI Feedback Tool requires AI and machine learning for automated grading, natural language processing for feedback generation, cloud infrastructure for scalability, and web or mobile frameworks for a seamless multi-platform experience.

An AI Feedback Tool like Gradescope streamlines grading by providing consistent and fast evaluations, identifying errors, suggesting personalized feedback, and generating performance insights. This reduces manual effort while improving accuracy and learning outcomes for students.

AI in a Gradescope-like AI Feedback Tool is trained using large datasets of graded assignments, historical feedback, and rubric-based rules. Continuous learning allows the system to improve accuracy, consistency, and provide insightful suggestions for students.