Security teams today are buried under alerts that demand attention but rarely deliver clarity. A single weak signal can quickly turn into hours of investigation spread across tools that were never designed to work together. Over time, this pressure creates gaps through which serious threats can quietly slip.

An attack intel tool like Recorded Future changes that dynamic by continuously gathering data from open sources, dark web forums, and technical telemetry. Machine learning correlates infrastructure activity, exploit behavior, and attacker intent into risk-scored intelligence. Automation then surfaces early warning signals linked to vulnerabilities, brands, or regions so teams can act with focus and speed.

Over the years, we’ve developed numerous cyber threat monitoring solutions, powered by machine learning and graph-based intelligence systems. Using this expertise, we’re sharing this blog to discuss the steps to develop an attack intel tool like Recorded Future. Let’start!

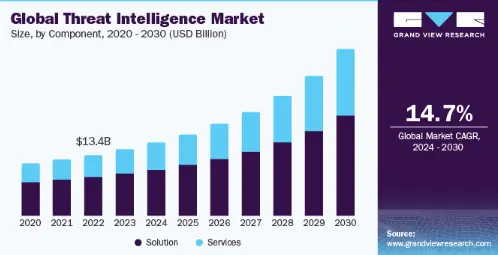

Key Market Takeaways for Attack Intel Tools

According to GrandViewResearch, the attack intelligence tools market is growing rapidly as organizations face more frequent and sophisticated cyber threats. The global threat intelligence market reached USD 14.59 billion in 2023 and is expected to grow to USD 36.53 billion by 2030, at a 14.7% CAGR from 2024 to 2030. This reflects rising demand for early threat visibility in an environment dominated by ransomware, phishing, and AI-enabled attacks.

Source: GrandViewResearch

Attack intel tools are gaining adoption because they enable proactive defense rather than reactive response. By monitoring the dark web, tracking adversary activity, and automating threat analysis, these platforms help security teams prioritize risks and respond faster. Regulatory pressure, cloud adoption, and shortages of skilled analysts are further accelerating demand for intelligence-driven security operations.

Leading examples highlight this shift. CrowdStrike Falcon Intelligence Recon monitors criminal forums and marketplaces to identify brand-specific threats, while its Recon+ managed service adds expert-led triage. Recorded Future uses AI to analyze millions of sources and deliver actionable intelligence at speed.

Additionally, Intel and Check Point have expanded their collaboration to embed AI-driven threat detection directly into chipsets, enabling earlier ransomware detection through preemptive, hardware-level analysis.

What is the Recorded Future Platform?

Recorded Future is an AI-driven cyber threat intelligence platform that helps organizations identify, analyze, and prioritize cyber threats in real time. It continuously collects and correlates intelligence from millions of sources across the open web, technical feeds, the dark web, and internal telemetry to support faster, more informed security decisions.

The platform is designed not only to aggregate threat data but to connect and contextualize it. By using advanced analytics and machine learning, Recorded Future transforms fragmented signals into actionable intelligence that integrates directly into existing security workflows and tools.

Here are some of its standout features,

1. Real-Time Threat Intelligence Dashboard

Users can search, explore, and visualize active threat data such as threat actors, vulnerabilities, malware, and infrastructure from a single interface. This eliminates the need to review multiple feeds and sources manually.

2. Intelligence Graph Contextualization

Instead of presenting flat IOC lists, the platform connects entities such as IPs, domains, malware families, vulnerabilities, and threat actors through its Intelligence Graph. Users can instantly see how threats relate to each other and to their organization’s risk exposure.

3. AI-Powered Insights and Summaries

Recorded Future uses AI and natural language processing to summarize complex threat information automatically. Users can interact with the intelligence via natural language queries, view dynamically calculated risk scores, and generate reports without manual research.

4. Malware and Threat Hunting Intelligence

Security teams can analyze malware behavior, track malware evolution, and generate detection rules such as YARA or Sigma. This allows users to move from passive intelligence consumption to proactive threat hunting.

5. Security Tool Integrations

The platform integrates with SIEM, SOAR, EDR, vulnerability management tools, and ticketing systems. Users can push intelligence directly into their existing security stack to automate alerting, investigation, and response workflows.

6. Third-Party and Supply Chain Intelligence

Users can monitor vendors, partners, and third-party entities for breaches, dark web exposure, and emerging threats. This helps organizations manage supply chain risk and exposure to external attack surfaces.

7. Continuous Monitoring and Alerts

Recorded Future continuously monitors relevant sources and delivers alerts when new or high-risk intelligence emerges. Alerts are prioritized based on context and risk scores, reducing alert fatigue for security teams.

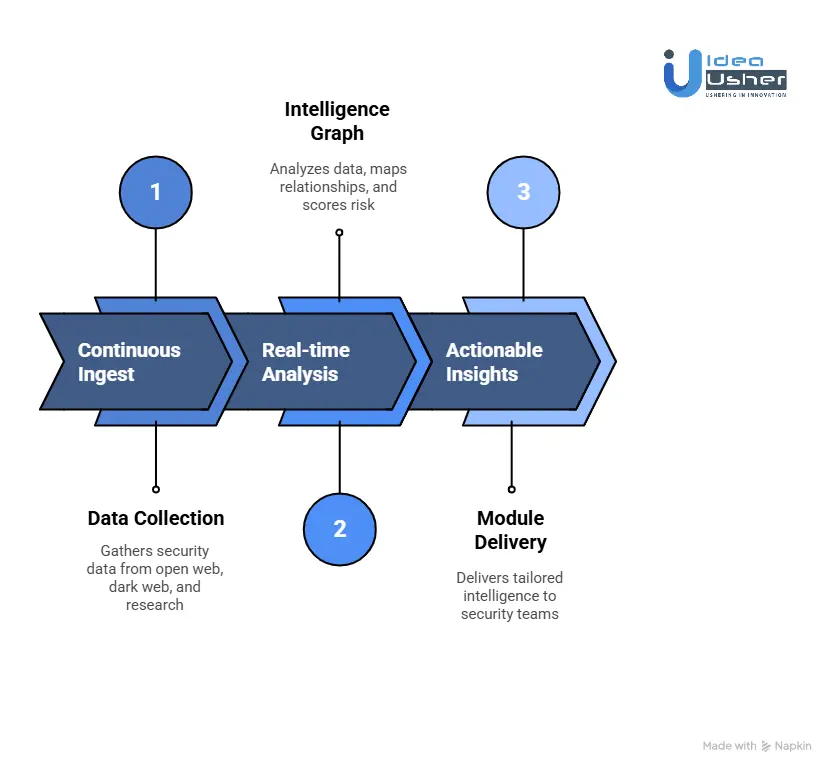

How Does the Recorded Future Tool Work?

Recorded Future works by continuously collecting security data from open sources, the dark web, and technical feeds so you can see threats emerging early. It then analyzes the data using an intelligence graph that links infrastructure vulnerabilities and threat actors to predict risk accurately.

Stage 1: Ingest

Everything begins with data. Recorded Future continuously gathers security-relevant information from an unmatched range of sources across the open web, underground communities, and internal research. This ingestion occurs in near-real-time and spans hundreds of thousands of locations worldwide.

Key data sources include:

- Open and Technical Sources: These include news outlets, blogs, social media, developer forums, code repositories, and public vulnerability databases.

- Dark Web and Criminal Ecosystems: Using secure collection methods, Recorded Future monitors underground forums, illicit marketplaces, and encrypted communication channels.

- Human-Driven Research: The Insikt Group®, Recorded Future’s in-house research team, adds a critical human layer by validating signals and uncovering attacker motivations.

The result of this stage is a constant stream of raw intelligence inputs from across the global digital environment.

Stage 2: Analyze

Raw data has little value without context. This is where Recorded Future’s Security Intelligence Graph becomes central. Rather than treating indicators as isolated data points, the platform maps relationships among billions of entities, including IP addresses, domains, malware families, vulnerabilities, threat actors, and organizations.

Several advanced capabilities drive this analysis:

Natural Language Processing

The platform reads and understands unstructured text from articles, forum posts, and research reports. It extracts key entities, events, and relationships, capturing not just what happened, but how and why it matters.

Predictive and Behavioral Analytics

Recorded Future looks for patterns that indicate future risk. For example, it analyzes newly registered domains, hosting behavior, and certificate data to identify infrastructure likely to be used for phishing or malware campaigns, often before attacks begin.

Dynamic Risk Scoring

Every entity in the Intelligence Graph receives a continuously updated risk score. Vulnerabilities, for instance, are scored not only by technical severity, but by real-world factors such as active exploitation, attacker discussion, and relevance to specific industries.

Instead of producing static lists of “bad” indicators, this stage creates a living, interconnected view of the threat landscape.

Stage 3: Activate

The final stage is where intelligence becomes operational value. Recorded Future delivers insights from the Intelligence Graph through specialized modules designed to support different security roles and workflows.

Core Intelligence Modules

| Module | Description |

| Threat Intelligence | Identifies active threats, attacker behavior, and relevant indicators targeting the organization. |

| Vulnerability Intelligence | Prioritizes vulnerabilities based on real-world exploitation risk. |

| Brand Intelligence | Detects phishing, impersonation, and misuse of brand assets. |

| Security Operations and Third-Party Intelligence | Enriches SOC workflows and highlights risks from vendors and partners. |

What is the Business Model of the Recorded Future Platform?

Recorded Future operates a subscription-based SaaS business model built around its Intelligence Cloud platform. The company delivers real-time cyber threat intelligence by continuously analyzing data from more than 1 million global sources, serving 1,900+ customers worldwide.

Its customer base includes 45+ sovereign governments and over 50% of Fortune 100 companies, spanning industries such as IT (19% of customers), financial services (7%), and critical infrastructure. The model emphasizes recurring revenue, long-term contracts, and expansion through additional intelligence use cases.

Subscription-Based Intelligence Access

The majority of Recorded Future’s revenue comes from annual and multi-year subscriptions to its intelligence modules. Pricing is tiered based on usage, data depth, number of users, and integrations.

Key characteristics of this model include:

- Modular subscriptions that allow customers to expand over time

- Continuous intelligence delivery rather than static reports

- High renewal rates driven by deep operational integration

Core modules include Cyber Threat Intelligence, Vulnerability Intelligence, Brand Intelligence, Third-Party Intelligence, and Malware Intelligence (launched April 2025).

Professional Services, Licensing, and Partnerships

Secondary revenue streams include professional services, data licensing, and technology partnerships. These accounted for approximately 15% of total revenue in 2024 and are projected to grow to around 20% in 2025.

Customers report strong financial returns from these services, including:

- An average of $290,000 in annual value from operational efficiency gains

- More than 100 analyst hours saved per week through automation and intelligence enrichment

Specialized Intelligence Offerings

Additional revenue is generated through specialized solutions such as Brand Intelligence and Third-Party Intelligence. Brand protection customers report measurable ROI, including an estimated $2,602 in monthly value from reduced impersonation and fraud risk.

Financial Performance and Growth

Recorded Future has shown consistent revenue growth over time:

- $140M ARR in 2020

- Surpassed $250M ARR in 2022, reflecting roughly 50% year-over-year growth during that period

- Market estimates place annual revenue between $100M and $1B, with one report citing approximately $750M as of July 2025

Industry data shows that 76% of enterprises use threat intelligence weekly to inform security decisions. Recorded Future customers reportedly detect 22× more threats and resolve incidents 63% faster compared to organizations without advanced intelligence platforms.

Funding History and Acquisition

Before its acquisition, Recorded Future raised between $72.3M and $79M across multiple funding rounds, including:

- Series A: $2.2M (2009)

- Series B: $6.7M (2010)

- Later venture rounds totaling $21.1M by 2022

Key investors included Insight Partners, Balderton Capital, and Google Ventures.

In December 2024, Recorded Future was acquired by Mastercard for $2.65 billion, delivering an estimated 3× return for Insight Partners. Post-acquisition, the platform was integrated into Mastercard’s cyber and fraud prevention ecosystem.

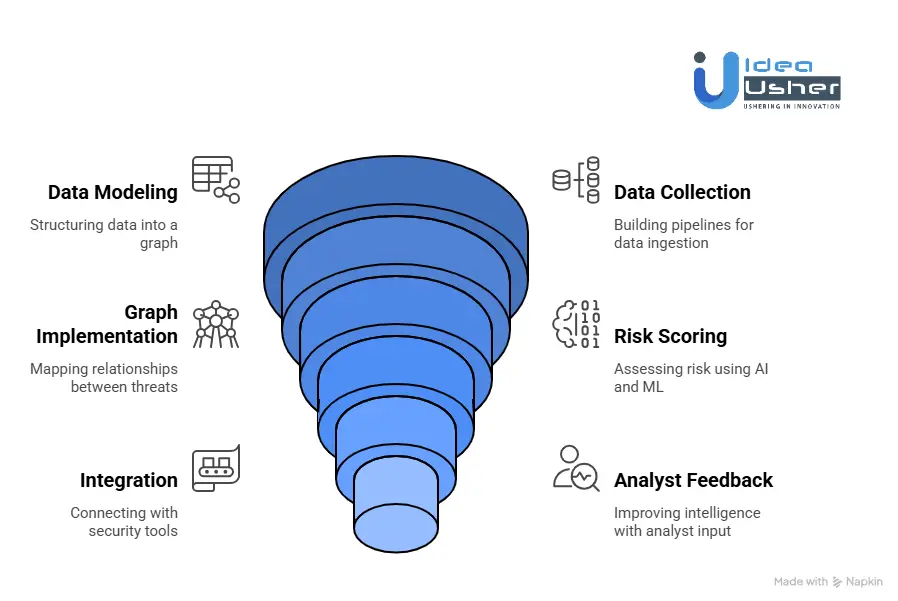

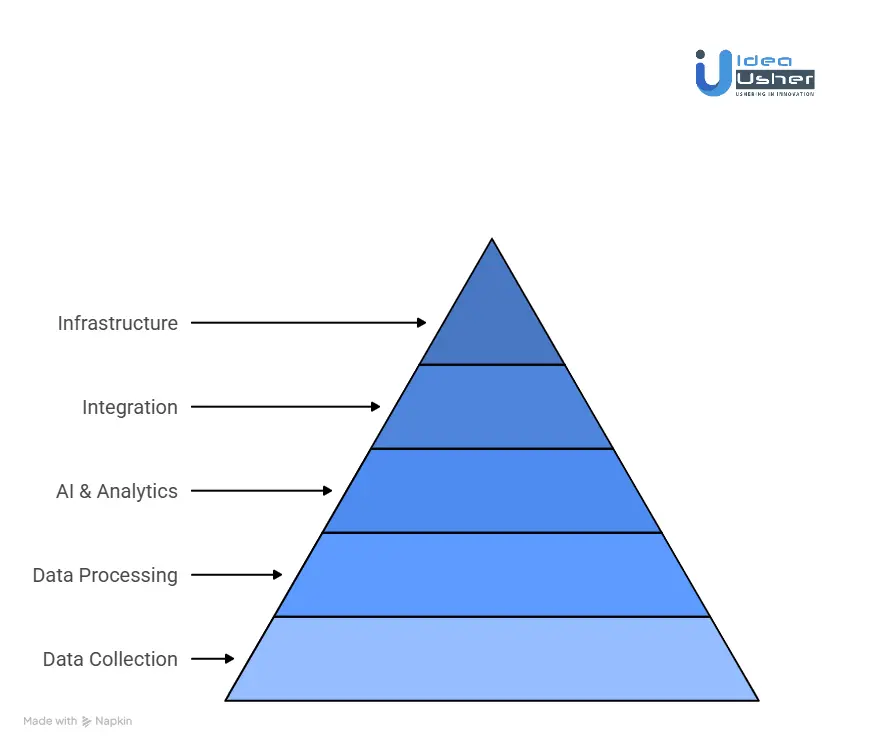

How to Develop an Attack Intel Tool Like Recorded Future?

To build an attack intelligence tool like Recorded Future, start by collecting threat data from multiple sources and modeling it into a graph that shows how the infrastructure and tactics connect. You must then apply machine learning and NLP to score risk over time and summarize the most important considerations for defenders. We have developed numerous attack intelligence tools, such as Recorded Future, for our clients. Here is how we do it.

1. Intelligence Data Model

We define a structured threat intelligence model covering actors, infrastructure, vulnerabilities, and campaigns. All entities and relationships are aligned with MITRE ATT&CK and industry standards. The model is designed for graph-based intelligence so context, relationships, and timelines are captured natively.

2. Intelligence Collection

We build scalable ingestion pipelines that collect data from the open web, dark web, technical feeds, and APIs. Secure identity rotation, proxy infrastructure, and access controls ensure reliable collection. Collected data is normalized into structured intelligence objects ready for analysis.

3. Intelligence Graph

We implement a graph database to map relationships between threats, infrastructure, and activity. NLP is used to extract entities, identify aliases, and cluster related intelligence. The graph updates continuously, with relationship confidence and relevance scored in real time.

4. Risk Scoring and AI

We develop machine learning models to assess risk based on time, confidence, and impact. Scoring adapts as new intelligence emerges. Large language models are integrated to support analyst queries, generate summaries, and automate intelligence reporting.

5. Integrations and Correlation

We integrate the platform with SIEM, EDR, SOAR, and vulnerability management tools. External intelligence is correlated with internal telemetry and asset exposure. This enables automated alert prioritization and faster remediation workflows.

6. Analyst Feedback

We design workflows that allow analysts to validate and enrich intelligence. Analyst feedback continuously improves scoring and model accuracy. Validated intelligence is converted into deployable detections, advisories, and response guidance for security teams.

How Much Revenue Can an Attack Intel Tool Generate?

Attack intelligence sits at the high-value end of the cybersecurity market, where buyers are willing to pay premium prices for timely, actionable insight.

Most successful players monetize through enterprise SaaS subscriptions, with pricing tied to company size, number of analysts, data access, or intelligence modules. Public disclosures and market signals give us a useful benchmark.

What the Market Is Already Paying

Recorded Future

One of the category leaders, with estimated annual revenue in the $400M to $500M+ range. Entry-level enterprise contracts often start around $50K per year, while global organizations routinely spend seven figures annually across multiple teams and regions.

CrowdStrike Falcon Intelligence

Not sold standalone, but as a premium add-on to CrowdStrike’s endpoint platform. CrowdStrike reported approximately $3.4B in ARR for FY 2024, and intelligence is a key expansion lever. Falcon Intelligence typically adds $50 to $100 per user per year on top of the core platform.

Mandiant (now part of Google Cloud)

Prior to acquisition, Mandiant generated $483M in revenue, with intelligence tightly bundled into high-value incident response and consulting engagements. Enterprise intelligence contracts often reached high six- or seven-figure values.

A Realistic Revenue Model for a New Entrant

A new attack intelligence company will not displace incumbents overnight. The winning path is focused entry followed by disciplined expansion.

Core Assumptions

- Average Contract Value: $50K initially, expanding over time

- Target Customers: Mid-market and enterprise security teams

- Sales Cycle: 6 to 9 months early, shortening with brand credibility

- Market Entry Strategy: Start with a narrow, painful use case such as vulnerability exploitation intelligence or dark web monitoring for a regulated industry

Five-Year Revenue Projection

| Year | Stage | Focus | Customers (Cumulative) | ARR |

| Y1 | Launch | Product-market fit, early design partners | 15 | $750K |

| Y2 | Early Scale | Dedicated sales, deeper penetration | 50 | $2.5M |

| Y3 | Growth | New modules, larger enterprises | 125 | $6.25M |

| Y4 | Expansion | Premium features, higher ACV | 250 | $18.75M |

| Y5 | Category Contender | Global reach, partnerships | 450 | $40.5M |

Why This Model Works

- Customer growth follows a typical enterprise SaaS curve once trust is established.

- Additional modules, including automation, AI-driven analytics, third-party risk, and integrations, drive ACV expansion.

- Retention matters more than new logos. This model assumes a strong net revenue retention above 120 percent, indicating that customers increase usage over time.

What It Takes to Reach $100M+ ARR

Reaching the top tier of this market is possible, but not easy. The companies that get there share a few traits.

1. Clear Differentiation

The product must deliver something materially better than existing tools, such as faster signal-to-noise reduction, unique data sources, or automation that genuinely replaces analyst effort.

2. Strong Go-To-Market Execution

This is not a self-serve product. Success requires enterprise-grade sales talent, credibility with CISOs and security leaders, and strong channel and platform partnerships.

3. Capital and Discipline

Building and scaling an attack intelligence platform is expensive. Between R&D, data acquisition, and sales, $20M to $50M in funding is a realistic requirement to reach meaningful scale.

4. Becoming a Platform, Not Just a Tool

The highest-revenue companies expand beyond subscriptions by offering APIs for high-volume intelligence consumption, ecosystem partnerships with revenue sharing, and managed intelligence services such as analyst-on-demand, which can double contract value.

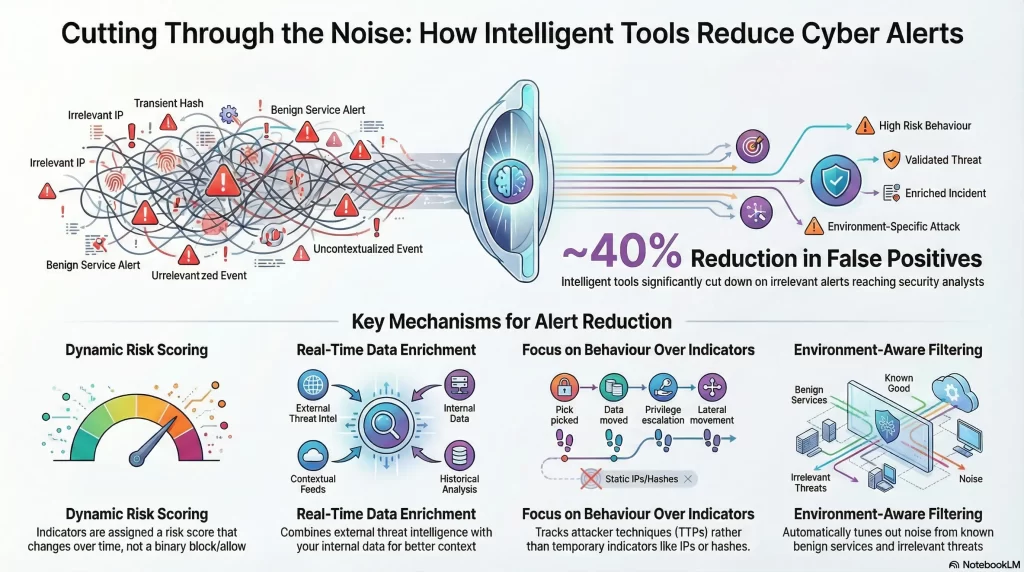

How Attack Intel Tools Cut False Positives by 40%?

Attack intelligence tools reduce false positives by adding context before alerts reach the analyst. Instead of reacting to raw indicators, the system can dynamically score risk and filter noise so alerts that should matter are surfaced faster. According to reports, a 40 percent reduction in false positives is achievable when threat intelligence feeds are properly tuned and filtered, allowing SOC analysts to focus more confidently on real threats.

From Raw Indicators to Actionable Context

Traditional security tools rely heavily on static logic.

“If this IP, domain, or hash appears, generate an alert.”

While simple, this approach fails in real-world environments for several reasons.

- Attackers often reuse infrastructure, which later becomes legitimate.

- Generic threat feeds lack relevance to individual organizations.

- Normal user or system behavior can resemble malicious activity when viewed in isolation.

Attack intelligence tools solve this by adding context before alerts reach analysts. Instead of asking “Is this indicator known?”, they ask more relevant questions.

- Is this indicator currently active?

- Is it being used in a real attack campaign?

- Does that campaign matter to us?

The result is a decision-making layer that filters noise before it becomes work.

1. Dynamic Risk Scoring Instead of Binary Alerts

Modern intelligence platforms no longer treat indicators as simply good or bad. Every data point is assigned a dynamic risk score that changes over time.

For example, an IP that actively supports a phishing campaign targeting your industry may receive a score of 95-100. The same IP, flagged months ago but now hosting legitimate services, may drop to single digits.

These scores are calculated using multiple signals.

- Time sensitivity, whether the activity is current or historical

- Source reliability, whether the intelligence comes from trusted researchers, closed-source telemetry, or open forums

- Target relevance, whether your industry, geography, or technology stack is part of the threat actor’s focus

Only high-confidence, high-relevance indicators trigger action. Everything else is deprioritized or logged silently.

2. Automated Enrichment with Internal Security Data

False positives drop sharply when threat intelligence is combined with internal telemetry. When a firewall, SIEM, or EDR detects suspicious activity, the intelligence platform automatically enriches the event in real time with additional context.

- Infrastructure ownership

- Historical usage patterns

- Known threat actor associations

- Malware or campaign links

Example:

A blocked outbound connection triggers a firewall alert. Before escalating, the system identifies the destination IP as shared cloud infrastructure used by thousands of legitimate organizations. No active malicious campaigns are linked, so the alert is automatically suppressed.

If the same IP were tied to a live ransomware operation, the alert would be escalated with full context already attached. Analysts investigate confirmed risk instead of chasing false alarms.

3. Detecting Adversary Behavior, Not Just Indicators

Static indicators expire quickly. Attack techniques do not.

Advanced attack intelligence tools prioritize tactics, techniques, and procedures. This approach focuses on how adversaries operate rather than relying on single-use artifacts such as IP addresses or file hashes.

This allows SOCs to reduce noise from outdated indicators, detect new attacks that reuse known techniques, and write more durable, higher-confidence detection logic.

A rule that flags suspicious PowerShell execution patterns used for lateral movement remains effective long after a specific malware hash becomes obsolete. Behavioral intelligence is harder to evade and far less prone to false positives.

4. Environment-Aware Suppression and Filtering

Every organization has unique infrastructure, partners, and business constraints. Attack intelligence platforms account for this by enabling automated, environment-specific tuning.

Common filters include:

- Suppressing alerts tied to known benign services such as CDNs, SaaS providers, and update servers

- Ignoring threat activity aimed at industries in which the organization does not operate

- Deprioritizing attacks from regions where the organization has no users or assets

Instead of analysts manually tuning rules for months, the system adapts automatically. Predictable noise is removed without weakening the security posture.

Common Challenges to Create an Attack Intel Tool

Building a proprietary attack intelligence platform is an attractive idea. The promise is clear: tailored insights, direct ownership of data, and intelligence that aligns precisely with business risk. In practice, however, transforming that vision into a reliable and defensible intelligence engine is far more difficult than most teams expect.

After delivering these platforms for multiple organizations, we see the same obstacles appear again and again. Below are the four most common challenges and the proven approaches that help teams move past them.

1. Scaling Without Losing Control of Costs

Many attack intelligence initiatives begin with an ambitious goal like “monitoring millions of sources.” What quickly follows is an explosion in infrastructure complexity. Collecting, processing, and storing vast volumes of unstructured content, telemetry, and artifacts demands constant scaling. Cloud compute, storage, and data transfer costs can grow faster than the platform itself, turning a technical project into a budget crisis.

The Practical Approach

Successful platforms are designed for efficiency from the first day.

Event-Driven, Cloud-Native Architecture

Serverless components and managed services scale automatically and eliminate costs tied to idle capacity. This allows the system to expand during peak ingestion and contract when activity drops.

Distributed Processing on Demand

Large analytical jobs are executed only when needed using distributed frameworks. This prevents continuous resource consumption while still supporting massive data workloads.

Tiered Data Storage

Recent, high-value data is kept in fast storage for analysis, while older material is progressively moved to lower-cost tiers or archival storage. This approach can cut long-term storage costs by more than half.

2. Legal & Ethical Barriers to Intelligence Collection

Meaningful attack intelligence often requires visibility into closed forums, underground marketplaces, and restricted communities. Accessing these environments introduces serious legal and compliance risks, including violations of platform terms, privacy regulations, and jurisdiction-specific laws. One misstep can result in blocked access, legal action, or reputational harm.

The Practical Approach

Legal and ethical safeguards must be operational, not theoretical.

- Formal Source Approval Processes: Every data source is reviewed before collection begins, with clear documentation and legal sign-off for restricted or sensitive environments.

- Jurisdiction-Aware Collection Controls: Collection mechanisms respect rate limits, platform rules, and geographic boundaries to avoid regulatory exposure.

- Privacy-First Data Handling: Personally identifiable information is never stored in raw form. Data is anonymized, tokenized, or aggregated at ingestion, with full provenance tracking to demonstrate responsible sourcing.

3. Assembling the Right Expertise

Attack intelligence platforms sit at the intersection of multiple specialized disciplines. They require expertise in threat analysis, large-scale data engineering, machine learning, and graph-based modeling. Building and retaining a team with this combined skill set is difficult, expensive, and time-consuming.

The Practical Approach

Teams that succeed rarely build everything alone.

- Strategic Engineering Partnerships: Working with specialized development partners provides immediate access to experienced engineers who have built similar systems before, without the long hiring cycle.

- Managed Infrastructure Services: Offloading complex infrastructure components to managed platforms reduces dependency on niche in-house expertise.

- Focused Internal Ownership: Internal teams concentrate on core intellectual property such as intelligence logic, prioritization models, and customer-facing insights rather than foundational plumbing.

Tools & APIs to Create an Attack Intel Tool

Building an attack intelligence platform comparable to Recorded Future is a large-scale systems engineering effort that spans data collection, data processing, AI-driven analysis, and deep security integrations. Success depends less on individual tools and more on how these technologies are composed into a reliable, continuously learning system across every architectural layer.

1. Data Collection and Ingestion Layer

This layer exists to continuously collect data from a wide range of open, technical, and adversarial sources. It must operate around the clock and remain resilient against source instability and evasion tactics.

Web Scraping and Automation

Scrapy: A proven framework for large-scale, structured scraping of open and deep web sources such as news sites, blogs, vulnerability disclosures, and technical forums.

Playwright and Selenium: Required for modern, JavaScript-heavy websites and environments that require authentication. These tools are especially important for accessing forums, marketplaces, and social platforms where attackers operate. Playwright’s multi-browser support helps emulate real user behavior and reduce detection.

Streaming and Data Pipelines

- Apache Kafka: Serves as the backbone of real-time ingestion. It buffers millions of events from scrapers, feeds, and APIs while decoupling data collection from downstream processing.

- Apache NiFi: Used to design and manage ingestion workflows. It is well-suited for pulling from REST APIs, scheduled feeds, and file-based sources while maintaining data lineage and secure transfers.

2. Data Processing, Storage, and the Intelligence Graph

Once collected, raw data must be normalized, structured, and connected to produce actionable insights.

Relationship and Entity Storage (Neo4j or ArangoDB)

Graph databases are critical for representing how threat actors, infrastructure, malware, and targets connect to each other. They enable analysts and automation to pivot across relationships, uncover hidden links, and analyze campaigns in ways that traditional relational databases cannot support.

Search and Investigation (Elasticsearch)

Elasticsearch is used to store and search large volumes of unstructured and semi-structured data such as forum posts, articles, and intelligence reports. Its fast indexing and aggregation capabilities allow investigators to quickly explore and correlate data across massive datasets.

Large-Scale Processing (Apache Spark)

Apache Spark supports batch processing and large-scale analytics across historical data. It is commonly used for NLP processing, entity enrichment, model training preparation, and identifying long-running or recurring threat campaigns.

3. AI, Machine Learning, and Analytics Layer

This layer turns structured data into insights, predictions, and prioritization.

Core Machine Learning Stack

Python with PyTorch and TensorFlow: Forms the foundation for custom model development. These frameworks support tasks such as:

- Entity extraction from unstructured text

- Classification of malware and infrastructure

- Anomaly detection across behavioral datasets

- Risk scoring for domains, IPs, and vulnerabilities

Language Models and Analyst Assistance

Commercial or Open-Source LLMs: APIs such as OpenAI or open-source models like Llama and Mistral can power analyst-facing features. Common use cases include summarizing reports, generating investigation narratives, and enabling natural-language queries over intelligence data.

4. Integration and Actionability Layer

Intelligence only delivers value when it integrates directly into security workflows.

Threat Intelligence Standards

- MISP: Enables structured sharing and ingestion of threat intelligence with external communities and partners.

- STIX and TAXII: Required for interoperability. The platform must publish intelligence as STIX objects and expose TAXII services so downstream security tools can consume it seamlessly.

Security Tool Integrations

- SIEM and SOAR APIs: Platforms such as Splunk, Microsoft Sentinel, and Cortex XSOAR allow intelligence to enrich alerts and automate response workflows.

- EDR and Network Security APIs: Integrations with tools like CrowdStrike and Palo Alto Networks enable direct enforcement actions such as blocking indicators or isolating compromised hosts.

5. Cloud Infrastructure and DevOps Foundation

The platform must scale globally while remaining reliable and secure.

Cloud Infrastructure

AWS, GCP, or Azure: Provide the storage, compute, and networking needed for large data lakes, distributed processing, and ML training workloads.

Orchestration and Infrastructure Management

Kubernetes: Manages containerized services across ingestion, processing, AI inference, and user-facing applications. It enables horizontal scaling and fault isolation.

Terraform: Used to define infrastructure as code. This ensures environments are reproducible, auditable, and consistent across development, staging, and production.

Conclusion

Building an attack intelligence tool like Recorded Future is not a simple software effort. It is a living intelligence system that must continuously ingest data, correlate signals, and apply AI models with human judgment. For enterprises, the opportunity can be significant, but execution will require strong architecture, deep security expertise, and a long-term platform mindset. You should plan for intelligence graphs, automation pipelines, and scalable integrations from day one. IdeaUsher can help you design, build, and scale such platforms so you can move faster without starting from scratch.

Looking to Develop an Attack-Intel Tool Like Recorded Future?

IdeaUsher can help you design an attack intelligence tool that mirrors how analysts actually think and work. We will architect graph-based correlation pipelines and dark web ingestion that can operate securely and reliably at scale.

With over 500,000 hours of hands-on engineering experience and a team that includes former FAANG and MANG engineers, we specialize in building intelligence platforms that do more than aggregate data.

Why Build with IdeaUsher

- Intelligence Graph Engine: We design graph-driven systems that resolve entities, map relationships in real time, and assign dynamic risk scores so signals don’t live in silos.

- Dark Web and Underground Source Ingestion: Secure, scalable pipelines for collecting data from volatile and hard-to-reach sources, built to persist where others break.

- AI-Assisted Analysis Layer: Custom NLP pipelines, automated briefings, and predictive threat scoring that are trained on your data and aligned to your threat model.

- Security Stack Integrations: API-first architecture that connects cleanly with SIEMs, SOAR tools, ticketing systems, and existing workflows.

- Analyst-Centric Workflows: Interfaces designed for real analysts, including validation loops, enrichment controls, and alerting that support judgment instead of replacing it.

Work with Ex-MAANG developers to build next-gen apps schedule your consultation now

FAQs

A1: To create an attack intelligence tool, start by defining the threat scope and the users who may rely on it. You must collect data from log sensors and external feeds and normalize it early. You should then design a processing layer that can correlate events and score risk logically. Over time, you may refine detection models carefully and validate outputs continuously.

A2: An attack intel tool should include real-time data ingestion and structured threat classification. It must support enrichment using the IP domain and behavior context. It should provide prioritization so analysts can act quickly and confidently. Reporting dashboards should be clear and actionable while remaining technically precise.

A3: An attack intelligence tool ingests signals from multiple sources and analyzes them programmatically. It may apply rules, heuristics or models to detect malicious patterns. The system then intelligently correlates indicators across time and infrastructure. Finally, it should surface alerts that analysts can trust and investigate effectively.

A4: Attack intelligence tools can generate revenue through subscription-based access for enterprises and security teams. They may offer tiered features based on data depth and response speed. Some providers could license APIs to platforms that need embedded intelligence. Consulting integrations and premium threat reports may also generate revenue steadily.