Artificial intelligence (AI) has emerged as a driving force behind innovation and efficiency across diverse fields, revolutionizing traditional processes and introducing software models capable of accomplishing a myriad of tasks.

Conversational AI, one of the many aspects of AI, is a particularly interesting matter that aims to improve human-machine communication. One well-known example of conversational AI technology is ChatGPT, which has won praise from many for its ability to blend together human and computer assistance.

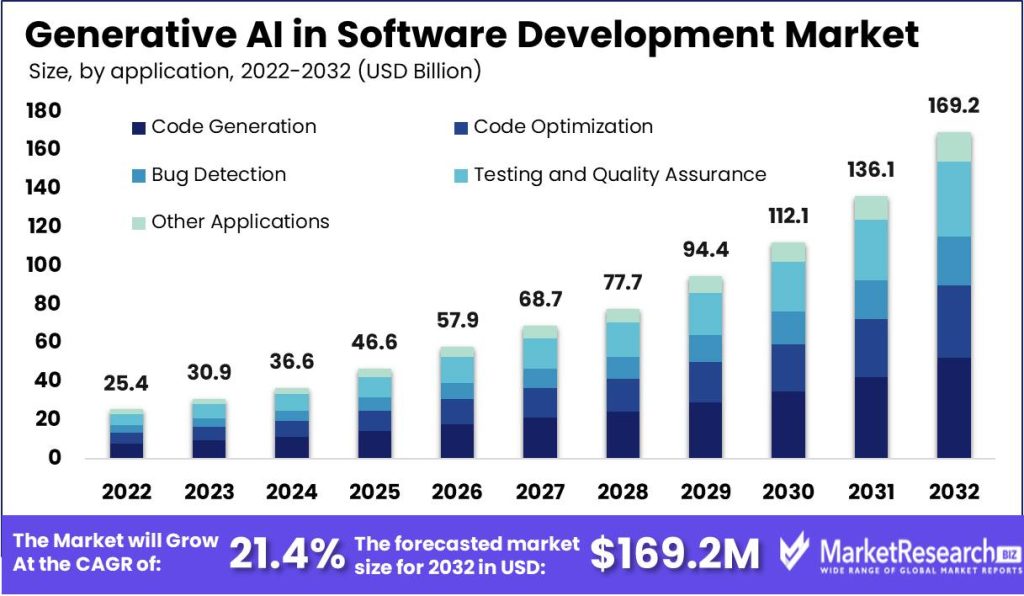

Source: MarketResearch.Biz

With its advanced coding capabilities and adept handling of diverse tasks, ChatGPT empowers developers to streamline workflows, save valuable time, and foster innovation in application development. From its ability to enhance user engagement to its efficiency in handling complex tasks, ChatGPT emerges as a game-changer in the realm of application development, paving the way for a more interactive and efficient future.

In this article, we will explore how this powerful AI-based chatbot can be harnessed for building applications and the transformative impact it brings to the development process.

What Is ChatGPT?

ChatGPT, an AI language model developed by OpenAI, is a cutting-edge technology built upon the foundation of Generative Pre-trained Transformer (GPT) architecture. This model is adept at generating human-like text by leveraging its generative capabilities, meaning it can create contextually relevant content based on the input it receives. The pre-training aspect involves exposing the model to an extensive and diverse dataset, allowing it to grasp intricate linguistic patterns and nuances from various sources. The Transformer architecture, which forms the backbone of ChatGPT, facilitates parallel computation and utilizes self-attention mechanisms, enabling the model to handle complex language tasks effectively.

The evolution of ChatGPT began with the introduction of GPT-1 in 2018, followed by substantial improvements with GPT-2 in 2019 and a paradigm-shifting advancement with GPT-3 in 2020. ChatGPT, specifically based on the GPT-3.5 version, was officially launched in November 2022. This version marked a significant milestone in natural language processing, showcasing the model’s prowess in delivering contextually accurate responses, making it a valuable tool across various industries.

ChatGPT’s versatility is evident in its wide range of applications, from answering queries to aiding in content creation, language translation, and programming assistance. Its training involves a meticulous fine-tuning process by human AI trainers, incorporating reinforcement learning and feedback loops to continually enhance its performance. The recent release of GPT-4 represents a further leap forward, with improvements in performance, scalability, and overall capabilities, underscoring the rapid progress in generative AI research.

The impact of ChatGPT extends beyond its technological advancements, highlighting the transformative potential of AI in reshaping communication, problem-solving, and information processing in both everyday life and professional domains. As the capabilities of language models like ChatGPT continue to evolve, they open new possibilities for innovation and efficiency in diverse fields.

The core essence of ChatGPT is encapsulated in its acronym, GPT, which unfolds into Generative Pre-trained Transformer, delineating its fundamental characteristics:

I. Generative

This fundamental attribute underscores the innate ability of GPT models to produce novel, contextually relevant content. With a remarkable capacity for creativity, these models excel at crafting text that closely mirrors human conversation. Their proficiency extends to generating responses that not only align with the given context but also exhibit a coherence reminiscent of natural language, contributing to a more human-like interaction.

II. Pre-trained

The “Pre-trained” aspect signifies a pivotal phase in the development of GPT models. Prior to their deployment, these models undergo training using an extensive and diverse corpus of text data sourced from a myriad of channels. This inclusive linguistic training equips them with a nuanced understanding of intricate patterns, diverse contexts, and a wealth of factual information. This pre-training serves as the bedrock, laying a robust foundation that empowers the models to engage in high-quality text generation across a spectrum of applications.

III. Transformer

At the architectural core of GPT models lies the Transformer, a sophisticated framework that

underpins their functionality. Drawing on Transformer architecture, these models leverage self-attention mechanisms and parallel computation to efficiently navigate complex language tasks. The Transformer architecture is pivotal in enabling GPT models to generate text with an exceptional level of contextual accuracy. This strategic combination of self-attention and parallel computation enhances the models’ ability to capture and understand intricate linguistic nuances, contributing to the coherent and contextually relevant text they produce.

How Does ChatGPT Work?

ChatGPT is an advanced Large Language Model (LLM) that operates in the field of Natural Language Processing (NLP). It builds upon the GPT-3 model and addresses some of its limitations. LLMs like GPT-3 are designed to understand and generate human-like text based on the patterns and relationships learned from vast amounts of textual data.

However, GPT-3 may produce outputs that are unhelpful, hallucinatory, lack interpretability, or contain biased content. ChatGPT improves upon this by employing techniques such as fine-tuning, Reinforcement Learning from Human Feedback (RLHF), embeddings, reward modeling, and Proximal Policy Optimization (PPO). These methods enhance the model’s ability to generate contextually relevant and accurate responses while mitigating issues associated with standard LLMs.

I. Embeddings

Embeddings are a crucial component of ChatGPT’s functioning, playing a vital role in both its pre-training and response generation phases. These embeddings serve as vector representations of words, phrases, or sentences, capturing the semantic and syntactic relationships within language. In the pre-training phase, ChatGPT learns embeddings from an extensive text corpus, allowing it to grasp contextual information and relationships in a high-dimensional space.

When given a prompt, ChatGPT utilizes these learned embeddings to comprehend the context and connections between words. By finding embeddings that best match the semantics and syntax of the input, the model generates a response that is contextually relevant and coherent. The use of embeddings enables ChatGPT to produce appropriate responses across a wide range of prompts, showcasing its ability to generalize knowledge to new, unseen inputs. This is made possible as the embeddings encapsulate the inherent structure and patterns in language, facilitating the model’s adaptation and understanding of diverse linguistic contexts.

II. Fine Tuning

During fine-tuning, ChatGPT is trained on a curated dataset consisting of input-output pairs generated by human labelers. These pairs serve as examples of desired model behavior. The model’s parameters are adjusted to align more closely with the task at hand. Reinforcement Learning from Human Feedback (RLHF) is employed during fine-tuning to minimize harmful or biased outputs. RLHF involves supervised fine-tuning, reward modeling, and Proximal Policy Optimization (PPO) to optimize the model based on human preferences.

The detailed stages of fine-tuning include:

1. Supervised Fine Tuning (SFT)

The first step involves training the GPT-3 model on a specific task using a curated dataset. This dataset is created by human labelers who provide input-output pairs based on real user queries from the OpenAI API. The goal is to teach the model to generate responses that align with user expectations.

The dataset is designed for diversity, with a cap on prompts per user ID and exclusion of prompts containing Personally Identifiable Information (PII) for privacy. Contractors also generate sample prompts to augment categories lacking substantial real data. The prompts cover various types, including plain queries, few-shot instructions, and user-based prompts.

The key challenge for labelers is to decipher the user’s intended instruction when formulating responses. This phase results in the creation of a Supervised Fine Tuning (SFT) model, also known as GPT-3.5, which forms the basis for subsequent refinement.

2. Reward Model

After the SFT model is trained, it starts generating responses that are more aligned with user prompts. The reward model is introduced to further refine the model’s behavior using Reinforcement Learning (RL). This model takes sequences of prompts and responses as input and outputs a scalar reward value.

Labelers are presented with multiple outputs from the SFT model for a single input prompt and are tasked with ranking them in order of quality. This ranking is used to train the reward model. To prevent overfitting, each group of rankings is treated as a single batch data point when building the model, enhancing generalization.

3. Reinforcement Learning (RL) Model

The final phase involves applying Reinforcement Learning to continue refining the model. In this step, the model is given a random prompt, and it responds based on the policy learned during the SFT step. The reward model, developed in the previous stage, assigns a reward to the prompt-response pair.

The model uses this reward to adjust its policy, optimizing it to generate responses that maximize rewards. Although steps two and three can be repeated iteratively for further refinement, extensive iteration has not been widely implemented in practice. The goal is to ensure that the model produces outputs that not only align with user prompts but also meet the desired quality criteria based on human preferences.

This fine-tuning process enables ChatGPT to adapt its general language skills acquired during pretraining to specific tasks or domains, providing more accurate and contextually appropriate responses.

ChatGPT Key Features

ChatGPT possesses several key features that distinguish it as a potent language model, setting it apart from its predecessors and enhancing its efficiency across various applications.

1. Understanding Human Language

ChatGPT stands out for its sophisticated natural language understanding, surpassing mere word recognition. Its foundation as a large language model enables it to comprehend complex language structures, including grammar, syntax, and semantics. This proficiency extends to grasping contextual nuances, cultural references, metaphors, analogies, and even humor. This deep understanding allows ChatGPT to interpret a wide range of inputs, responding in a manner that mirrors human interaction, making it a versatile tool for effective communication.

2. Contextual Inquiries and Clarifications

In scenarios demanding clarity, ChatGPT exhibits an interactive and inquisitive approach by seeking contextual clarifications. Similar to how humans naturally seek more details for better understanding, the model prompts users for additional information when queries lack clarity, contributing to more meaningful and accurate responses.

ChatGPT not only comprehends directives but also provides guidance on executing specific tasks. This practical utility is especially valuable in scenarios where users seek step-by-step instructions or assistance in completing actions, positioning the model as a helpful and interactive tool for task-oriented conversations.

3. Context Management Capability

A pivotal feature of ChatGPT is its ability to maintain context from previous exchanges. This capability ensures that responses remain relevant and coherent within the ongoing conversation. While GPT-3 had a context window limit of 2048 tokens, ChatGPT, based on GPT-4, boasts a significantly enhanced capacity of up to 25,000 words. This expanded contextual awareness enables ChatGPT to excel in prolonged conversations, maintaining a coherent narrative and enhancing the overall user experience.

4. Comprehensive Knowledge Across Domains

ChatGPT’s extensive training on a diverse dataset allows it to showcase comprehensive domain knowledge. It can engage in conversations on a wide array of topics, ranging from science and technology to arts, humanities, social sciences, and beyond. While the depth of understanding may vary depending on the complexity of the subject, ChatGPT’s versatility in addressing an extensive range of topics makes it akin to conversing with a knowledgeable expert.

5. Scalability and Adaptability

ChatGPT exhibits remarkable scalability and versatility, attributes derived from its well-designed architecture and training methodologies. The increased number of parameters in the GPT-4 architecture enables ChatGPT to comprehend intricate language patterns, while extensive training data, efficient computational resource management, and fine-tuning capabilities contribute to its adaptability across diverse use cases. The model’s scalability, reliant on infrastructure and deployment optimizations, positions it to cater to varying user interactions with the appropriate hardware and software setup, solidifying its status as a highly valuable and versatile language model.

6. Dynamic Multi-Turn Conversations

ChatGPT excels in dynamic multi-turn conversations by retaining context and coherence across multiple exchanges. Its seamless navigation through complex dialogues showcases its proficiency in understanding and responding contextually. This adaptability proves invaluable in applications like customer support, where sustained interaction is vital for user satisfaction. ChatGPT’s ability to remember past interactions enables it to engage users in meaningful and coherent conversations, contributing to a more satisfying user experience.

7. User Intent Recognition

A standout feature of ChatGPT is its exceptional capability to discern user intent. It goes beyond merely understanding the surface-level meaning of queries or directives, delving into the underlying purpose behind them. This advanced comprehension allows ChatGPT to provide more accurate and contextually appropriate responses, enhancing the overall quality of user interactions. Whether in casual conversations or more complex scenarios, the model’s ability to recognize user intent contributes significantly to its effectiveness and versatility.

8. Controlled Content Generation

Addressing concerns about content generation, ChatGPT incorporates mechanisms for controlled text generation. This feature allows users to set specific guidelines or constraints, ensuring that the generated content aligns with ethical and user-defined standards. By providing users with the ability to control the output, ChatGPT becomes a versatile tool applicable in professional and sensitive contexts. This emphasis on controlled content generation enhances the model’s utility, making it a reliable solution for diverse content requirements and ensuring responsible AI use.

9. Summarization Skills

ChatGPT showcases proficiency in multiple languages, enabling seamless engagement and response generation in diverse linguistic contexts. Its versatility in understanding and generating content across languages makes it a globally applicable conversational agent, breaking down language barriers for a more inclusive user experience.

With effective summarization skills, ChatGPT excels in condensing complex information and distilling key points from lengthy passages. This capability enhances the model’s utility in tasks requiring information synthesis and presentation, offering users concise and relevant summaries for better understanding.

Factors To Consider While Building An App With ChatGPT

When developing an application with ChatGPT or similar generative AI models, it’s crucial to navigate carefully, considering certain limitations inherent in these technologies. Here are key factors to consider:

1. Mitigating Bias and Toxicity

As the use of generative AI models like ChatGPT becomes more prevalent, addressing biases and toxic content is paramount. Training data often reflects the biases present on the internet, and without careful curation, these biases may manifest in the AI-generated content. Responsible AI practices involve proactive measures, such as filtering training datasets to remove harmful content and implementing watchdog models for real-time monitoring. Enterprises can contribute to ethical AI development by leveraging first-party data to fine-tune models, tailoring outputs to meet specific use cases while minimizing biases. Emphasizing responsible AI practices ensures that the potential risks associated with biased and toxic content are mitigated, allowing the full benefits of generative AI to be realized.

2. Enhancing Factual Accuracy

The phenomenon of hallucination in AI models, where generated content may be factually inaccurate, poses challenges to reliability. OpenAI and other developers are actively working on addressing this issue through techniques like data augmentation, adversarial training, improved model architectures, and human evaluation. For app developers using ChatGPT, adopting similar measures is crucial to enhance the accuracy and reliability of model outputs. Ensuring factual correctness not only builds user trust in the application but also promotes responsible AI deployment by minimizing the dissemination of misinformation.

3. Safeguarding Against Data Leakage

Safeguarding against the inadvertent disclosure of sensitive information is a critical aspect of deploying AI models. Establishing clear policies that prohibit developers from entering confidential data into ChatGPT helps prevent the incorporation of such information into the model, reducing the risk of later public disclosure. Vigilance in protecting against potential risks associated with AI model usage, combined with proactive measures, is essential for upholding individual and organizational privacy and security.

4. Enabling External Connectivity

The evolution of generative models includes the exciting potential for integration with external systems and information sources. Future models are expected to go beyond static responses by identifying the need to consult external databases or trigger actions in external systems. This advancement transforms generative models into fully connected conversational interfaces, unlocking a new realm of possibilities for dynamic and seamless user experiences. The integration of AI with external systems promises real-time, relevant information, and insights, paving the way for a new generation of powerful and impactful applications that cater to diverse user needs.

5. Handling Ethical Considerations

Beyond addressing bias and toxicity, navigating ethical considerations is crucial. Developers should prioritize transparency in AI applications, making users aware of the technology’s limitations and potential biases. Additionally, incorporating user feedback loops can help refine models over time, ensuring ongoing ethical standards are met. Ethical guidelines and governance frameworks should be established to guide the responsible development and deployment of AI applications.

6. Ensuring User Safety

Ensuring the safety of users interacting with AI applications is paramount. Implementing robust user verification mechanisms can prevent malicious use, safeguarding against potential harm. Developers must also consider potential misuse scenarios and incorporate features that allow users to report inappropriate content or behavior, fostering a secure and trustworthy user experience.

7. Optimizing for Resource Efficiency

Generative AI models can be computationally intensive. Developers need to consider resource efficiency, especially in resource-constrained environments such as mobile applications. Implementing model optimization techniques, such as model quantization and efficient inference strategies, can help strike a balance between performance and resource consumption, ensuring a smoother user experience.

8. Providing Explainability

The lack of transparency in AI models can lead to challenges in understanding how decisions are made. Developers should focus on incorporating explainability features, enabling users to comprehend the rationale behind the AI-generated responses. This not only enhances user trust but also facilitates accountability in AI applications, aligning with the principles of responsible and transparent AI development.

Advantages Of Using ChatGPT For App Development

Artificial intelligence and its applications in development tools have become pivotal in advancing contemporary app development. These tools provide a range of capabilities, allowing businesses across various industries to achieve their objectives more efficiently. Here are benefits worth to notice:

1. Efficient Code Writing

ChatGPT can significantly expedite the code writing process for app development. Developers can interact with the model to generate code snippets, functions, or even entire modules in various programming languages. This is particularly advantageous for both experienced developers looking to speed up their workflow and beginners who can learn from the generated code. By leveraging ChatGPT’s natural language processing capabilities, developers can articulate their requirements and quickly receive relevant code, reducing the time spent on manual coding.

2. Enhanced Efficiency and Productivity

The efficiency gains from using ChatGPT in app development extend beyond code generation. The tool can assist in automating repetitive tasks, generating documentation, and providing quick solutions to common problems. Developers can focus more on high-level design and critical problem-solving, leading to increased productivity. The streamlined workflow allows teams to allocate resources effectively, ensuring that time is spent on tasks that contribute most to the overall success of the project.

3. Code Debugging and Optimization

One of the standout features of ChatGPT is its ability to assist in debugging code. Developers can present their code to the model, identify errors, and receive suggestions for fixes. This not only accelerates the debugging process but also helps in writing cleaner and more efficient code. ChatGPT’s recommendations may include best practices, performance optimizations, and adherence to coding standards, resulting in a more robust and reliable application.

4. Valuable educational resource

ChatGPT can act as a valuable educational resource for developers. It can provide explanations, examples, and clarifications on various programming concepts, helping both beginners and experienced developers expand their knowledge base. This knowledge transfer aspect is particularly beneficial in a collaborative development environment where team members can share insights, ask questions, and learn from each other through interactions with ChatGPT.

5. Versatility Across Development Stages

ChatGPT’s adaptability makes it a versatile tool across different stages of app development. From conceptualizing and planning to coding and debugging, developers can integrate ChatGPT into various phases of their workflow. Whether it’s brainstorming ideas, drafting pseudocode, or refining the final implementation, the model can offer valuable assistance. This versatility makes ChatGPT a comprehensive solution for developers throughout the entire app development lifecycle.

How To Build An App With ChatGPT?

In this experiment, we aim to explore the capabilities of ChatGPT in the context of app development, adhering to specific guidelines for a comprehensive examination. Our approach involves a hands-off strategy, with no manual coding involved; instead, we will act as intermediaries, translating ChatGPT’s instructions into practical implementation by solely relying on the model’s generated code.

The entire coding process will consist of copying and pasting the code provided by ChatGPT, ensuring that the development journey strictly follows the AI model’s guidance. From configuring settings in Android Studio to obtaining API keys, every step will be executed based on ChatGPT’s instructions.

In the event of encountering bugs or errors during development, our bug-handling strategy will involve seeking solutions directly from ChatGPT. We will rely on the chatbot to provide guidance and implement the suggested fixes.

The project goals are clear: the code generated by ChatGPT should be compile-ready without any errors, resulting in a functional application that seamlessly operates on an Android device

Now, Let’s us explore the possibilities of OpenAI’s ChatGPT for application development. The task at hand involves crafting a simple yet effective Android application that highlights the latest news using an appropriate news API by using prompts.

I. Prompt:

– I want to write you a code where I am interested in developing an Android application that displays the latest news, utilizing the news API. Could you guide me ?

ChatGPT Response:

Certainly! Developing an Android application that displays the latest news using a news API involves several steps. I’ll guide you through the process, and provide a simple example using Kotlin and the Retrofit library for making API requests.

Step 1: Set up your project

Open Android Studio and create a new project.

Choose an empty activity template.

Set up your project with a suitable package name, language (Kotlin), and other necessary configurations.

Step 2: Add dependencies

Open your app-level build.gradle file and add the following dependencies:

implementation 'com.squareup.retrofit2:retrofit:2.9.0'

implementation 'com.squareup.retrofit2:converter-gson:2.9.0'

implementation 'com.google.code.gson:gson:2.8.8'

implementation 'com.squareup.okhttp3:okhttp:4.9.0'

Sync your project to make sure the dependencies are downloaded.

Step 3: Create a data model

Create a data class to represent a news item. For example:

data class NewsItem(

val title: String,

val description: String,

val url: String,

// Add other necessary fields

)Step 4: Create a Retrofit interface

Create an interface to define the API requests. For example:

import retrofit2.Call

import retrofit2.http.GET

interface NewsApiService {

@GET("v2/top-headlines")

fun getTopHeadlines(): Call<NewsResponse>

}Step 5: Create a response model

Create a response model to handle the JSON response from the API. For example:

data class NewsResponse(

val articles: List<NewsItem>

)Step 6: Set up Retrofit

Create a Retrofit instance in your application class or a dedicated class:

import retrofit2.Retrofit

import retrofit2.converter.gson.GsonConverterFactory

object RetrofitClient {

private const val BASE_URL = "https://newsapi.org/"

val retrofit: Retrofit by lazy {

Retrofit.Builder()

.baseUrl(BASE_URL)

.addConverterFactory(GsonConverterFactory.create())

.build()

}

val newsApiService: NewsApiService by lazy {

retrofit.create(NewsApiService::class.java)

}

}Step 7: Make API requests

Now, you can make API requests from your activity or fragment. For example:

import retrofit2.Call

import retrofit2.Callback

import retrofit2.Response

class NewsActivity : AppCompatActivity() {

override fun onCreate(savedInstanceState: Bundle?) {

super.onCreate(savedInstanceState)

setContentView(R.layout.activity_main)

val call = RetrofitClient.newsApiService.getTopHeadlines()

call.enqueue(object : Callback<NewsResponse> {

override fun onResponse(call: Call<NewsResponse>, response: Response<NewsResponse>) {

if (response.isSuccessful) {

val newsList = response.body()?.articles

// Handle the list of news items

} else {

// Handle API error

}

}

override fun onFailure(call: Call<NewsResponse>, t: Throwable) {

// Handle network failure

}

})

}

}Step 8: Display the news

Finally, use the retrieved list of news items to display them in your app’s UI using RecyclerView or any other UI component.

Make sure to replace the placeholder URLs and API keys with your actual News API endpoint and key. Note that you need to sign up for a News API key on their website: https://newsapi.org/.

This is a basic example, and you may need to handle things like pagination, error handling, and UI enhancements based on your application’s requirements.

II. Prompt:

– Provide me complete class with error handling

ChatGPT response:

Certainly! Here’s a more complete example with error handling in the NewsActivity class:

import android.os.Bundle

import android.util.Log

import androidx.appcompat.app.AppCompatActivity

import androidx.recyclerview.widget.LinearLayoutManager

import androidx.recyclerview.widget.RecyclerView

import retrofit2.Call

import retrofit2.Callback

import retrofit2.Response

class NewsActivity : AppCompatActivity() {

private lateinit var recyclerView: RecyclerView

private lateinit var newsAdapter: NewsAdapter

override fun onCreate(savedInstanceState: Bundle?) {

super.onCreate(savedInstanceState)

setContentView(R.layout.activity_main)

recyclerView = findViewById(R.id.recyclerView)

recyclerView.layoutManager = LinearLayoutManager(this)

newsAdapter = NewsAdapter()

recyclerView.adapter = newsAdapter

fetchData()

}

private fun fetchData() {

val call = RetrofitClient.newsApiService.getTopHeadlines()

call.enqueue(object : Callback<NewsResponse> {

override fun onResponse(call: Call<NewsResponse>, response: Response<NewsResponse>) {

if (response.isSuccessful) {

val newsList = response.body()?.articles

newsList?.let {

newsAdapter.setData(it)

}

} else {

handleApiError(response.code())

}

}

override fun onFailure(call: Call<NewsResponse>, t: Throwable) {

handleNetworkFailure(t)

}

})

}

private fun handleApiError(responseCode: Int) {

Log.e(TAG, "API Error: $responseCode")

// Handle different response codes accordingly

}

private fun handleNetworkFailure(t: Throwable) {

Log.e(TAG, "Network Failure", t)

// Handle network failure

}

companion object {

private const val TAG = "NewsActivity"

}

}In this example, I’ve added a RecyclerView to display the news items. Also, I created a NewsAdapter class to handle the RecyclerView’s data.

Create a new Kotlin file named NewsAdapter:

import android.view.LayoutInflater

import android.view.View

import android.view.ViewGroup

import android.widget.TextView

import androidx.recyclerview.widget.RecyclerView

import com.yourpackage.model.NewsItem

class NewsAdapter : RecyclerView.Adapter<NewsAdapter.NewsViewHolder>() {

private var newsList: List<NewsItem> = ArrayList()

override fun onCreateViewHolder(parent: ViewGroup, viewType: Int): NewsViewHolder {

val view = LayoutInflater.from(parent.context).inflate(R.layout.news_item, parent, false)

return NewsViewHolder(view)

}

override fun onBindViewHolder(holder: NewsViewHolder, position: Int) {

val newsItem = newsList[position]

holder.titleTextView.text = newsItem.title

holder.descriptionTextView.text = newsItem.description

// Set other views accordingly

}

override fun getItemCount(): Int {

return newsList.size

}

fun setData(newsList: List<NewsItem>) {

this.newsList = newsList

notifyDataSetChanged()

}

class NewsViewHolder(itemView: View) : RecyclerView.ViewHolder(itemView) {

val titleTextView: TextView = itemView.findViewById(R.id.titleTextView)

val descriptionTextView: TextView = itemView.findViewById(R.id.descriptionTextView)

// Add other views accordingly

}

}Make sure to create a layout file named news_item.xml in your res/layout directory for the RecyclerView item layout.

Now, this example includes a simple RecyclerView setup, and it handles API response and network failure errors. Customize the error handling based on your application’s requirements.

Note:

If you face any challenges with the outlined steps, feel free to seek assistance from ChatGPT. For instance, if you’re unsure about how to start a new Android project in Android Studio, simply ask ChatGPT for detailed, step-by-step instructions, and it will guide you through the process.

Conclusion

Harnessing the capabilities of ChatGPT for application development represents an innovative and potent approach to software engineering. As illustrated throughout this article, the process is not only viable but remarkably efficient. With ChatGPT’s advanced natural language understanding, extensive domain knowledge, and contextual awareness, the potential for creating engaging, user-friendly, and highly functional applications is substantial.

What sets ChatGPT apart is its capacity to seamlessly guide developers through every stage, from initial planning to error handling, making it an invaluable tool for both experienced developers and newcomers. Regardless of your programming expertise, ChatGPT serves as a supportive companion, streamlining the development process and democratizing app building accessibility.

The unique strength of ChatGPT lies in its ability to construct applications and revolutionize our approach to software development. By tapping into this artificial intelligence tool, developers can expedite their timelines, optimize code, and ultimately produce superior applications.

Looking To Get An App Developed , Then We Can Help

Idea Usher offers valuable assistance for businesses seeking to incorporate ChatGPT-like solutions into their operations. With a rich portfolio, Idea Usher has successfully collaborated with over 500 clients, including Fortune 500 companies such as Gold’s Gym and Honda, as well as indie brands. The company specializes in developing hybrid apps, mobile applications, and custom websites, contributing significantly to the growth and success of its clients. Leveraging their experience with diverse projects, Idea Usher is well-equipped to understand the unique requirements of different businesses and deliver tailored solutions that align with their goals.

Here is more information about our AI development service

Contact Idea Usher and open the door to a world of possibilities, where the integration of AI solutions becomes a seamless and transformative experience for your business.

Work with Ex-MAANG developers to build next-gen apps schedule your consultation now

FAQ

Q. How to create an using ChatGPT?

A. Creating an app with ChatGPT involves integrating its capabilities through the OpenAI API. To begin, sign up for access to the OpenAI API on the OpenAI platform and acquire your API key upon approval. Choose a programming language and set up your development environment, ensuring you have the necessary libraries or SDKs for making HTTP requests. Use your API key to authenticate requests, sending user inputs as prompts to the OpenAI API and receiving generated text as responses. Customize the integration based on your app’s requirements, experimenting with parameters like temperature and max tokens to control the model’s output. Implement security measures to protect API keys and ensure compliance with privacy regulations.

Q. What programming languages are compatible with building an app using ChatGPT?

A. Organizations can use any programming language to make HTTP requests. Common languages like Python, JavaScript, Java, and others can be employed to interact with the API and integrate ChatGPT into your application.